Administrator tasks

This chapter is written for administrators who must manage and maintain Autonomous Identity.

ForgeRock® Autonomous Identity is an entitlements and roles analytics system that lets you fully manage your company’s access to your data.

An entitlement refers to the rights or privileges assigned to a user or thing for access to specific resources. A company can have millions of entitlements without a clear picture of what they are, what they do, and who they are assigned to. Autonomous Identity solves this problem by using advanced artificial intelligence (AI) and automation technology to determine the full entitlements landscape for your company. The system also detects potential risks arising from incorrect or over-provisioned entitlements that lead to policy violations. Autonomous Identity eliminates the manual re-certification of entitlements and provides a centralized, transparent, and contextual view of all access points within your company.

For installation instructions, refer to the Autonomous Identity Installation Guide.

For a description of the Autonomous Identity UI console, refer to the Autonomous Identity Users Guide.

Self service

Autonomous Identity provides a self service UI page for administrators to change their profile and password information.

The page also lets administrators create time-based API keys for users to access the Autonomous Identity system. For more information, refer to Generate an API key.

Reset your password

-

On the Autonomous Identity UI, click the admin drop-down on the top-left of the page.

-

Click Self Service.

-

On the Profile page, enter and re-enter a new password, and then click Save.

Click an example

Update your profile

-

On the Autonomous Identity UI, click the admin drop-down on the top-left of the page.

-

Click Self Service.

-

On the Profile page, click Edit personal info to update your profile details:

You cannot change your email address or group ID as these are used to identify each user. -

Update the display name.

-

Update your distinguished name (DN).

-

Update your uid.

-

-

Click Save to apply your changes.

Click an example

Manage identities

The Manage Identities page lets administrators add or edit, assign roles, and deactivate users to Autonomous Identity.

View the default roles

-

On the Autonomous Identity UI, click the administration icon on the navigation menu, and then click Manage.

-

On the Manage Identities page, click Roles.

-

Select a specific role, and then click Edit to view its details.

-

Click through the Details and Permissions to view its details. You cannot change the permissions in these roles.

-

Click Role Members to access the members associated with this role. If you want to add a user to this Role group, click New Role Member and enter the user’s name. You can enter multiple users. When finished, click Save.

Click an example

Create a new user

-

On the Autonomous Identity UI, click the administration icon on the navigation menu, and then click Manage.

-

On the Manage Identities page, click New User.

-

Enter the Display Name, Email Address, DN, Gid Number, Uid, and Password for the user.

-

Click Save.

-

Click Authorization Roles, and then click New Authorization Roles. This step is important to assign the proper role to the user.

-

Select a role to assign the user, and then click Save.

Click an example

Reset a user’s password

-

On the Autonomous Identity UI, click the administration icon on the navigation menu, and then click Manage.

-

On the Manage Identities page, search for a user.

-

For a specific user, click Edit.

-

Click Reset Password, enter a temporary password, and then click Save.

Click an example

Add a role to an existing user

Often administrators need to assign roles to existing members. There are two ways to do this: from the user’s detail page and through the role’s Role Members page (refer to View the default roles).

-

On the Autonomous Identity UI, click the administration icon on the navigation menu, and then click Manage.

-

On the Manage Identities page, search for a user.

-

For a specific user, click Edit.

-

Click Authorization Roles, and then click New Authorization Roles.

-

Select one or more roles to add, and then click Save.

Click an example

Prepare data

Autonomous Identity administrators and deployers must set up additional tasks prior to your installment.

The following are some deployments tasks that may occur:

Data preparation

Once you have deployed Autonomous Identity, you can prepare your dataset into a format that meets the schema.

The initial step is to obtain the data as agreed upon between ForgeRock and your company. The files contain a subset of user attributes from the HR database and entitlement metadata required for the analysis. Only the attributes necessary for analysis are used.

There are a number of steps that must be carried out before your production entitlement data is input into Autonomous Identity. The summary of these steps are outlined below:

Data collection

Typically, the raw client data is not in a form that meets the Autonomous Identity schema. For example, a unique user identifier can have multiple names, such as user_id, account_id, user_key, or key. Similarly, entitlement columns can have several names, such as access_point, privilege_name, or entitlement.

To get the correct format, here are some general rules:

-

Submit the raw client data in

.csvfile format. The data can be in a single file or multiple files. Data includes application attributes, entitlement assignments, entitlements decriptions, and identities data. -

Duplicate values should be removed.

-

Add optional columns for additional training attributes, for example,

MANAGERS_MANAGERandMANAGER_FLAG. You can add these additional attributes to the schema using the Autonomous Identity UI. For more information, refer to Set Entity Definitions. -

Make a note of those attributes that differ from the Autonomous Identity schema, which is presented below. This is crucial for setting up your attribute mappings. For more information, refer to Set Attribute Mappings.

CSV files and schema

The required attributes for the schema are as follows:

| Files | Schema |

|---|---|

applications.csv |

This file depends on the attributes that the client wants to include. Here are some required columns:

|

assignments.csv |

|

entitlements.csv |

|

identities.csv |

|

Deployment tasks

Autonomous Identity administrators and deployers must set up additional tasks during installment.

The following are some deployments tasks that may occur:

Customize the domain and namespace

By default, the Autonomous Identity URL and domain for the UI console is set to autoid-ui.forgerock.com,

and the URL and domain for the self-service feature is autoid-selfservice.forgerock.com.

| These instructions are for new deployments. To change the domain and certificates in existing deployments, refer to Customize domain and namespace (existing deployments). |

Customize domain and namespace (new deployments)

-

Customize the domain name and target environment by editing the

/autoid-config/vars.xmlfile. By default, the domain name is set toforgerock.comand the target environment is set toautoid. The default Autonomous Identity URL will be:https://autoid-ui.forgerock.com. For example, set the domain name to the following:domain_name: example.com target_environment: autoid

-

If you set up your domain name and target environment in the previous step, you need to change the certificates to reflect the changes. Autonomous Identity generates self-signed certificates for its default configuration. You must generate new certificates as follows:

-

Generate the private key (that is,

server.key).openssl genrsa -out server.key 2048

-

Generate the certificate signing request using your key. When prompted enter attributes sent with your certificate request:

openssl req -new -key server.key -out server.csr Country Name (2 letter code) [XX]:US State or Province Name (full name) {}:Texas Locality Name (eg, city) [Default City]:Austin Organization Name (eg, company) [Default Company Ltd]:Ping Organizational Unit Name (eg, section) []:Eng Common Name (eg, your name or your server’s hostname) []:autoid-ui.example.com Email Address []: A challenge password []: An optional company name []:

-

-

Generate the self-signed certificate.

openssl x509 -req -days 365 -in server.csr -signkey server.key -out server.crt

-

Copy the certificate to the

/autoid-config/certsdirectory. Make sure to use the following filename:nginx-jas-wildcard.pem.openssl x509 -in server.crt -out nginx-jas-wildcard.pem cp nginx-jas-wildcard.pem ~/autoid-config/certs

-

Copy the key to the

/autoid-config/certsdirectory. Make sure to use the following filename:nginx-jas.key', depending on where your `/autoid-config/certs/resides.cp -i ~/.ssh/server.key /autoid-config/certs/nginx-jas.key or scp -i ~/.ssh/server.key autoid@remotehost:/autoid-config/certs/nginx-jas.key

-

Run the Autonomous Identity deployer. Make sure that there are no errors after running the

./deployer.sh runcommand../deployer.sh run

-

Make the domain changes on your DNS server or update your

/etc/hosts(Linux/Unix) file orC:\Windows\System32\drivers\etc\hosts(Windows) locally on your machine.

-

Customize domain and namespace (existing deployments)

-

Modify the server name values with your updated domain name in the following files under

/opt/autoid/mounts/nginx/conf.d:-

api.conf

-

ui.conf

-

kibana.conf

-

jas.conf

-

-

Copy the SSL certificate file and corresponding SSL certificate key to the

/opt/autoid/mounts/nginx/certdirectory. The/opt/autoid/mounts/nginx/certdirectory is mounted under/etc/nginx/certin the container. -

Modify

ssl_certificateandssl_certificate_keyin/opt/autoid/mounts/nginx/nginx.confwith the correct filenames. Only the name of the files need to be updated, the path stays the same.When using self-signed certificates, you need to import the new certificates in the JAS keystore and truststore at:

/opt/autoid/certs/jas/jas-client-keystore.jksand/opt/autoid/certs/jas/jas-server-truststore.jks:keytool -importcert -keystore jas-client-keystore.jks -alias myalias -file /opt/autoid/mounts/nginx/cert/cert.crt -noprompt -keypass mypass -storepass mypass keytool -importcert -keystore jas-server-truststore.jks -alias myalias -file /opt/autoid/mounts/nginx/cert/cert.crt -noprompt -keypass mypass -storepass mypass

-

Restart the nginx container:

docker stack rm nginx docker stack deploy -c /opt/autoid/res/nginx/docker-compose.yml nginx

-

Update the new domain name in your hosts file where an entry exists for

JAS. For example, the default JAS url isautoid-jas.forgerock.com.Change the JAS URL with your domain name. -

Update the

JAS_URLenvironment variable on all nodes by updating and sourcing your.bashrcfile. -

Restart Spark and Livy.

Configuring your filters

The filters on the Applications pages let you focus your searches based on entitlement and user attributes. In most cases, the default filters should suffice for most environments. However, if you need to customize the filters, you can do so by accessing Searchable attribute under entity definitions. For information, refer to Set Entity Definitions.

The default filters for an entitlement are the following:

-

Risk Level

-

Criticality

The default filters for an user attributes are the following:

-

User Department Name

-

Line of Business Subgroup

-

City

-

Jobcode Name

-

User Employee Type

-

Chief Yes No

-

Manager Name

-

Line of Business

-

Cost Center

Change the Vault Passwords

Autonomous Identity uses the ansible vault to store passwords in encrypted files, rather than in plaintext. Autonomous Identity stores the vault file at /autoid-config/vault.yml

saves the encrypted passwords to /config/.autoid_vault_password

. The /config/

mount is internal to the deployer container. The default encryption algorithm used is AES256.

By default, the /autoid-config/vault.yml

file uses the following parameters:

configuration_service_vault: basic_auth_password: Welcome123 openldap_vault: openldap_password: Welcome123 cassandra_vault: cassandra_password: Welcome123 cassandra_admin_password: Welcome123 mongo_vault: mongo_admin_password: Welcome123 mongo_root_password: Welcome123 elastic_vault: elastic_admin_password: Welcome123 elasticsearch_password: Welcome123

Assume that the vault file is encrypted during the installation. To edit the file:

Set Up single sign-on (SSO)

Autonomous Identity supports single sign-on (SSO) using OpenID Connect (OIDC) JWT tokens. SSO lets you log in once and access multiple applications without the need to re-authenticate yourself. You can use any third-party identity provider (IdP) to connect to Autonomous Identity.

There are two scenarios for SSO configuration:

-

Set up SSO for initial deployments. In this example, we use ForgeRock Access Management (AM) as an OpenID Connect (OIDC) IdP for Autonomous Identity during the original installation of Autonomous Identity. Refer to Set up SSO in initial deployments.

-

Set up SSO for existing deployments. For procedures to set up SSO in an existing Autonomous Identity deployment, see Set up SSO in existing deployments.

|

If you set up SSO-only, be aware that the following services are not deployed with this setting:

If you want to use these services and SSO, set up the authentication as |

Set up SSO in initial deployments

The following procedure requires a running instance of ForgeRock AM. For more information, refer to ForgeRock Access Management Authentication and Single Sign-On Guide.

-

First, set up your hostnames locally in

/etc/hosts(Linux/Unix) file orC:\Windows\System32\drivers\etc\hosts(Windows):35.189.75.99 autoid-ui.forgerock.com autoid-selfservice.forgerock.com 35.246.65.234 openam.example.com

-

Open a browser and point to

http://openam.example.com:8080/openam. Log in with username:amadmin, password:cangetinam. -

In AM, select Realm > Identities > Groups tab, and add the following groups:

-

AutoIdAdmin

-

AutoIdEntitlementOwner

-

AutoIdExecutive

-

AutoIdSupervisor

-

AutoIdUser

-

AutoIdAppOwner

-

AutoIdRoleOwner

-

AutoIdRoleEngineer

The group names above are arbitrary and are defined in the

/autoid-config/vars.ymlfile. Ensure that the groups you create in AM match the values in thevars.ymlfile.

-

-

Add the

demouser to each group. -

Go back to the main AM Admin UI page. Click Configure OAuth Provider.

-

Click Configure OpenID Connect, and then Create.

-

Select desired Realm > Go to Applications > OAuth 2.0, and then click Add Client. Enter the following properties, specific to your deployment:

Client ID: <autoid> Client secret: <password> Redirection URIs: https://<autoid-ui>.<domain>/api/sso/finish Scope(s): openid profile

For example:

Client ID: autoid Client secret: Welcome123 Redirection URIs: https://autoid-ui.forgerock.com/api/sso/finish Scope(s): openid profile

-

On the New Client page, go to to the Advanced tab, and enable

Implied Consent. Next, change theToken Endpoint Authentication Methodtoclient_secret_post. -

Edit the OIDC claims script to return

roles (groups), so that AM can match the Autonomous Identity groups. Additionally, add the groups as a claim in the script:"groups": { claim, identity -> [ "groups" : identity.getMemberships(IdType.GROUP).collect { group -> group.name }]}In the

utils.setScopeClaimsMapblock, add:groups: ['groups']

For more information about the OIDC claims script, refer to the ForgeRock Knowledge Base. The

id_tokenreturns the content that includes the group names.{ "at_hash": "QJRGiQgr1c1sOE4Q8BNyyg", "sub": "demo", "auditTrackingId": "59b6524d-8971-46da-9102-704694cae9bc-48738", "iss": "http://openam.example.com:8080/openam/oauth2", "tokenName": "id_token", "groups": [ "AutoIdAdmin", "AutoIdSupervisor", "AutoIdUser", "AutoIdExecutive", "AutoIdEntitlementOwner", "AutoIdAppOwner", "AutoIdRoleOwner", "AutoIdRoleEngineer" ], "given_name": "demo", "aud": "autoid", "c_hash": "SoLsfc3zjGq9xF5mJG_C9w", "acr": "0", "org.forgerock.openidconnect.ops": "B15A_wXm581fO8INtYHHcwSQtJI", "s_hash": "bOhtX8F73IMjSPeVAqxyTQ", "azp": "autoid", "auth_time": 1592390726, "name": "demo", "realm": "/", "exp": 1592394729, "tokenType": "JWTToken", "family_name": "demo", "iat": 1592391129, "email": "demo@example.com" }For more information on how to retrieve the id_tokenfor observation, refer to OpenID Connect 1.0 Endpoints.You have successfully configured AM as an OIDC provider.

-

Next, we set up Autonomous Identity. Change to the Autonomous Identity install directory on the deployer machine.

cd ~/autoid-config/

-

Open a text editor, and set the SSO parameters in the

/autoid-config/vars.ymlfile. Make sure to changeLDAPtoSSO.authentication_option: "SSO" oidc_issuer: "http://openam.example.com:8080/openam/oauth2" oidc_auth_url: "http://openam.example.com:8080/openam/oauth2/authorize" oidc_token_url: "http://openam.example.com:8080/openam/oauth2/access_token" oidc_user_info_url: "http://openam.example.com:8080/openam/oauth2/userinfo" oidc_jwks_url: "http://openam.example.com:8080/openam/oauth2/connect/jwk_uri" oidc_callback_url: "https://autoid-ui.forgerock.com/api/sso/finish" oidc_client_scope: 'openid profile' oidc_groups_attribute: groups oidc_uid_attribute: sub oidc_client_id: autoid oidc_client_secret: Welcome1 admin_object_id: AutoIdAdmin entitlement_owner_object_id: AutoIdEntitlementOwner executive_object_id: AutoIdExecutive supervisor_object_id: AutoIdSupervisor user_object_id: AutoIdUser application_owner_object_id: AutoIdAppOwner role_owner_object_id: AutoIdRoleOwner role_engineer_object_id: AutoIdRoleEngineer oidc_end_session_endpoint: "http://openam.example.com:8080/openam/oauth2/logout" oidc_logout_redirect_url: "http://openam.example.com:8088/openam/logout"

-

On the target machine, edit the

/etc/hostsfile or your DNS server, and add an entry foropenam.example.com.35.134.60.234 openam.example.com

-

On the deployer machine, run

deployer.shto push the new configuration.$ deployer.sh run

-

Test the connection now. Access

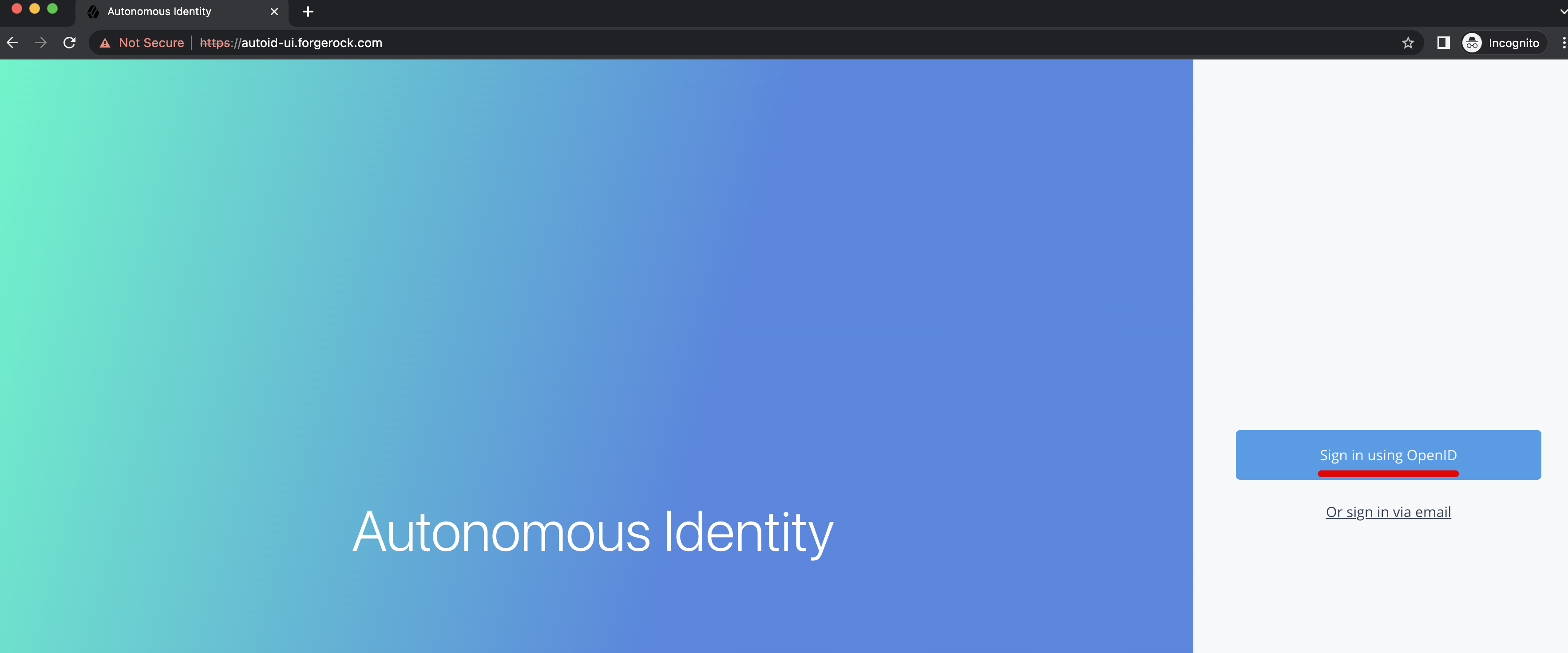

https://autoid-ui/forgerock.com. The redirect should occur with the following:

Set up SSO in existing deployments

-

First, update the permissions configuration object as follows:

-

Obtain an Autonomous Identity admin level JWT bearer token. You can obtain it using curl and the Autonomous Identity login endpoint with administrator credentials. Use your admin username and password:

curl -X POST \ https://autoid-ui.forgerock.com/api/authentication/login \ -k \ -H 'Content-Type: application/json' \ -d '{ "username": "bob.rodgers@forgerock.com", "password": "Welcome123" }'

The response is:

{ "user": { "dn": "cn=bob.rodgers@zoran.com,ou=People,dc=zoran,dc=com", "controls": [], "displayName": "Bob Rodgers", "gidNumber": "999", "uid": "bob.rodgers", "_groups": [ "Zoran Admin" ] }, "token": "eyJhbGciOiJIUzI1NiIsInR5 …” } -

Use curl and the bearer token from the previous step to obtain the Autonomous Identity JAS tenant ID:

curl -k -L -X GET 'https://autoid-ui.forgerock.com/jas/tenants' \ -H 'Authorization: Bearer <token_value>'

The response is:

[ { "id": "31092f95-3eed-418e-8ffb-f1b707bc9372", "name": "autonomous-iam", "description": "System Tenancy", "created": "2023-03-02T20:15:30.166Z" } ] -

To open the current permissions object, run the following curl command with the bearer token and tenant ID from the previous steps:

curl -k -L -X POST 'https://autoid-ui.forgerock.com/jas/entity/search/common/config' \ -H 'X-TENANT-ID: <tenant_id>' \ -H 'Content-Type: application/json' \ -H 'Authorization: Bearer <token_value> \ -d '{ "query": { "query": { "bool": { "must": { "match": { "name": "PermissionsConf" } } } } } }'An example response is as follows:

You can find the permissions value under the hitsobject >hitsarray >_source>value.{ "took": 1, "timed_out": false, "_shards": { "total": 3, "successful": 3, "skipped": 0, "failed": 0 }, "hits": { "total": { "value": 1, "relation": "eq" }, "max_score": 0.9808291, "hits": [ { "_index": "autonomous-iam_common_config_latest", "_type": "_doc", "_id": "f72a58dd8bf5a38205c2d4c9eeafe85ebbaa1c3a2670b45c57f0219022b90ea6fc50ebf88e720c98410600e427528f0fe702b55f70975c8f49cb73c64ab1e101", "_score": 0.9808291, "_source": { "name": "PermissionsConf", "value": { "permissions": { "Zoran Admin": { "title": "Admin", "can": "*" }, … -

Edit the Permissions object in the template by replacing the "###Zoran_.._Token###" fields with the SSO group ID. For example, the Permissions object would appear as follows before the change:

"###Zoran_Admin_Token###": { "title": "Admin", "can": "*" },For SSO only setup, the following is used:

f5bd09ca-096c-4a6e-b06d-65decc22cb09is an example group ID for an organization’s administrators."f5bd09ca-096c-4a6e-b06d-65decc22cb09": { "title": "Admin", "can": "*" },For SSO and local setup, use the following:

"###Zoran_Admin_Token###": { "title": "Admin", "can": "*" }, "f5bd09ca-096c-4a6e-b06d-65decc22cb09": { "title": "Admin", "can": "*" }, -

Update the Permissions object in JAS with the edited JSON file:

curl -k -L -X PATCH 'https://autoid-ui.forgerock.com/jas/entity/upsert/common/config' \ -H 'X-TENANT-ID: <tenant_id>' \ -H 'Content-Type: application/json' \ -H 'Authorization: Bearer <token_value>' \ -d @<path/to/SSO.json>

A successful response is:

{ "indexName": "autonomous-iam_common_config_latest", "indices": { "latest": "autonomous-iam_common_config_latest", "log": "autonomous-iam_common_config" } } -

Depending on how you want to configure SSO, use one of the following templates:

The ContextID is an arbitrary UUID that can be any UUID. It is used just to track this transaction. localAndSSO template (

LocalAndSSO.json){ "branch": "actual", "contextId": "ecba1baa-66d1-4548-8c74-6012bea9b838", "indexingRequired": true, "tags": {}, "indexInSync": true, "entityData": [ { "name": "PermissionsConf", "value": { "permissions": { "Zoran Admin": { "title": "Admin", "can": "*" }, "###Zoran_Admin_Token###": { "title": "Admin", "can": "*" }, "Zoran Role Engineer": { "title": "Role Engineer", "can": [ "SHOW__ROLE_PAGE", "SEARCH__ALL_ROLES", "CREATE__ROLE", "UPDATE__ROLE", "DELETE__ROLE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "STATS_ALL__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "###Zoran_Role_Engineer_Token###": { "title": "Role Engineer", "can": [ "SHOW__ROLE_PAGE", "SEARCH__ALL_ROLES", "CREATE__ROLE", "UPDATE__ROLE", "DELETE__ROLE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "STATS_ALL__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "Zoran Role Owner": { "title": "Role Owner", "can": [ "SHOW__ROLE_PAGE", "SEARCH__ROLES", "CREATE__ROLE", "UPDATE__ROLE", "DELETE__ROLE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "STATS__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLES", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "###Zoran_Role_Owner_Token###": { "title": "Role Owner", "can": [ "SHOW__ROLE_PAGE", "SEARCH__ROLES", "CREATE__ROLE", "UPDATE__ROLE", "DELETE__ROLE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "STATS__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLES", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "Zoran Role Auditor": { "title": "Role Auditor", "can": [ "SEARCH__ALL_ROLES", "STATS_ALL__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS" ] }, "###Zoran_Role_Auditor_Token###": { "title": "Role Auditor", "can": [ "SEARCH__ALL_ROLES", "STATS_ALL__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS" ] }, "Zoran Application Owner": { "title": "Application Owner", "can": [ "SHOW__APPLICATION_PAGE", "SEARCH__USER", "SEARCH__ENTITLEMENTS_BY_APP_OWNER", "SHOW_OVERVIEW_PAGE", "SHOW__ENTITLEMENT", "SHOW__ENTITLEMENT_USERS", "SHOW__APP_OWNER_FILTER_OPTIONS", "SHOW__ENTT_OWNER_UNSCORED_ENTITLEMENTS", "SHOW__ENTT_OWNER_PAGE", "SHOW__ENTT_OWNER_USER_PAGE", "SHOW__ENTT_OWNER_ENT_PAGE", "SHOW__USER_ENTITLEMENTS", "SHOW__RULES_BY_APP_OWNER", "REVOKE__CERTIFY_ACCESS", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "###Zoran_Application_Owner_Token###": { "title": "Application Owner", "can": [ "SHOW__APPLICATION_PAGE", "SEARCH__USER", "SEARCH__ENTITLEMENTS_BY_APP_OWNER", "SHOW_OVERVIEW_PAGE", "SHOW__ENTITLEMENT", "SHOW__ENTITLEMENT_USERS", "SHOW__APP_OWNER_FILTER_OPTIONS", "SHOW__ENTT_OWNER_UNSCORED_ENTITLEMENTS", "SHOW__ENTT_OWNER_PAGE", "SHOW__ENTT_OWNER_USER_PAGE", "SHOW__ENTT_OWNER_ENT_PAGE", "SHOW__USER_ENTITLEMENTS", "SHOW__RULES_BY_APP_OWNER", "REVOKE__CERTIFY_ACCESS", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "Zoran Entitlement Owner": { "title": "Entitlement Owner", "can": [ "SEARCH__ENTITLEMENTS_BY_ENTT_OWNER", "SHOW_OVERVIEW_PAGE", "SHOW__ENTITLEMENT", "SHOW__ENTITLEMENT_USERS", "SHOW__ENTT_OWNER_FILTER_OPTIONS", "SHOW__ENTT_OWNER_UNSCORED_ENTITLEMENTS", "SHOW__ENTT_OWNER_PAGE", "SHOW__ENTT_OWNER_USER_PAGE", "SHOW__ENTT_OWNER_ENT_PAGE", "SHOW__USER_ENTITLEMENTS", "SHOW__RULES_BY_ENTT_OWNER", "REVOKE__CERTIFY_ACCESS", "SHOW__USER", "SHOW__CERTIFICATIONS", "LOOKUP_USER", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "###Zoran_Entitlement_Owner_Token###": { "title": "Entitlement Owner", "can": [ "SEARCH__ENTITLEMENTS_BY_ENTT_OWNER", "SHOW_OVERVIEW_PAGE", "SHOW__ENTITLEMENT", "SHOW__ENTITLEMENT_USERS", "SHOW__ENTT_OWNER_FILTER_OPTIONS", "SHOW__ENTT_OWNER_UNSCORED_ENTITLEMENTS", "SHOW__ENTT_OWNER_PAGE", "SHOW__ENTT_OWNER_USER_PAGE", "SHOW__ENTT_OWNER_ENT_PAGE", "SHOW__USER_ENTITLEMENTS", "SHOW__RULES_BY_ENTT_OWNER", "REVOKE__CERTIFY_ACCESS", "SHOW__USER", "SHOW__CERTIFICATIONS", "LOOKUP_USER", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "Zoran Executive": { "title": "Executive", "can": [ "SEARCH__USER", "SHOW__ASSIGNMENTS_STATS", "SHOW__COMPANY_PAGE", "SHOW__COMPANY_ENTITLEMENTS_DATA", "SHOW__CRITICAL_ENTITLEMENTS", "SHOW__ENTITLEMENT_AVG_GROUPS", "SHOW__USER_ENTITLEMENTS" ] }, "###Zoran_Executive_Token###": { "title": "Executive", "can": [ "SEARCH__USER", "SHOW__ASSIGNMENTS_STATS", "SHOW__COMPANY_PAGE", "SHOW__COMPANY_ENTITLEMENTS_DATA", "SHOW__CRITICAL_ENTITLEMENTS", "SHOW__ENTITLEMENT_AVG_GROUPS", "SHOW__USER_ENTITLEMENTS" ] }, "Zoran Supervisor": { "title": "Supervisor", "can": [ "SEARCH__USER", "SHOW_OVERVIEW_PAGE", "SHOW__SUPERVISOR_FILTER_OPTIONS", "SHOW__SUPERVISOR_PAGE", "SHOW__SUPERVISOR_ENTITLEMENT_USERS", "SHOW__SUPERVISOR_USER_ENTITLEMENTS", "SHOW__SUPERVISOR_UNSCORED_ENTITLEMENTS", "SEARCH__SUPERVISOR_USER_ENTITLEMENTS", "REVOKE__CERTIFY_ACCESS", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "###Zoran_Supervisor_Token###": { "title": "Supervisor", "can": [ "SEARCH__USER", "SHOW_OVERVIEW_PAGE", "SHOW__SUPERVISOR_FILTER_OPTIONS", "SHOW__SUPERVISOR_PAGE", "SHOW__SUPERVISOR_ENTITLEMENT_USERS", "SHOW__SUPERVISOR_USER_ENTITLEMENTS", "SHOW__SUPERVISOR_UNSCORED_ENTITLEMENTS", "SEARCH__SUPERVISOR_USER_ENTITLEMENTS", "REVOKE__CERTIFY_ACCESS", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "Zoran User": { "title": "User", "can": [ "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "###Zoran_User_Token###": { "title": "User", "can": [ "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "Zoran Service Connector": { "title": "Service Connector", "can": [ "SERVICE_CONNECTOR", "SHOW__API_KEY_MGMT_PAGE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "SHOW__RULES", "REVOKE__CERTIFY_ACCESS" ] }, "###Zoran_Service_Connector_Token###": { "title": "Service Connector", "can": [ "SERVICE_CONNECTOR", "SHOW__API_KEY_MGMT_PAGE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "SHOW__RULES", "REVOKE__CERTIFY_ACCESS" ] } } } } ] }SSO template (

SSO.json){ "branch": "actual", "contextId": "ecba1baa-66d1-4548-8c74-6012bea9b838", "indexingRequired": true, "tags": {}, "indexInSync": true, "entityData": [ { "name": "PermissionsConf", "value": { "permissions": { "###Zoran_Admin_Token###": { "title": "Admin", "can": "*" }, "###Zoran_Role_Engineer_Token###": { "title": "Role Engineer", "can": [ "SHOW__ROLE_PAGE", "SEARCH__ALL_ROLES", "CREATE__ROLE", "UPDATE__ROLE", "DELETE__ROLE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "STATS_ALL__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "###Zoran_Role_Owner_Token###": { "title": "Role Owner", "can": [ "SHOW__ROLE_PAGE", "SEARCH__ROLES", "CREATE__ROLE", "UPDATE__ROLE", "DELETE__ROLE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "STATS__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLES", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "###Zoran_Role_Auditor_Token###": { "title": "Role Auditor", "can": [ "SEARCH__ALL_ROLES", "STATS_ALL__USERS", "SEARCH_ALL__USERS", "SEARCH_ALL__ENTITLEMENTS", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS" ] }, "###Zoran_Application_Owner_Token###": { "title": "Application Owner", "can": [ "SHOW__APPLICATION_PAGE", "SEARCH__USER", "SEARCH__ENTITLEMENTS_BY_APP_OWNER", "SHOW_OVERVIEW_PAGE", "SHOW__ENTITLEMENT", "SHOW__ENTITLEMENT_USERS", "SHOW__APP_OWNER_FILTER_OPTIONS", "SHOW__ENTT_OWNER_UNSCORED_ENTITLEMENTS", "SHOW__ENTT_OWNER_PAGE", "SHOW__ENTT_OWNER_USER_PAGE", "SHOW__ENTT_OWNER_ENT_PAGE", "SHOW__USER_ENTITLEMENTS", "SHOW__RULES_BY_APP_OWNER", "REVOKE__CERTIFY_ACCESS", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "###Zoran_Entitlement_Owner_Token###": { "title": "Entitlement Owner", "can": [ "SEARCH__ENTITLEMENTS_BY_ENTT_OWNER", "SHOW_OVERVIEW_PAGE", "SHOW__ENTITLEMENT", "SHOW__ENTITLEMENT_USERS", "SHOW__ENTT_OWNER_FILTER_OPTIONS", "SHOW__ENTT_OWNER_UNSCORED_ENTITLEMENTS", "SHOW__ENTT_OWNER_PAGE", "SHOW__ENTT_OWNER_USER_PAGE", "SHOW__ENTT_OWNER_ENT_PAGE", "SHOW__USER_ENTITLEMENTS", "SHOW__RULES_BY_ENTT_OWNER", "REVOKE__CERTIFY_ACCESS", "SHOW__USER", "SHOW__CERTIFICATIONS", "LOOKUP_USER", "SEARCH__ROLE_USERS", "SEARCH__ROLE_ENTITLEMENTS", "SEARCH__ROLE_JUSTIFICATIONS", "SHOW_ROLE_METADATA", "SHOW_ROLE_ATTRIBUTES", "WORKFLOW__REQUESTS", "WORKFLOW__TASKS", "WORKFLOW__TASK_APPROVE" ] }, "###Zoran_Executive_Token###": { "title": "Executive", "can": [ "SEARCH__USER", "SHOW__ASSIGNMENTS_STATS", "SHOW__COMPANY_PAGE", "SHOW__COMPANY_ENTITLEMENTS_DATA", "SHOW__CRITICAL_ENTITLEMENTS", "SHOW__ENTITLEMENT_AVG_GROUPS", "SHOW__USER_ENTITLEMENTS" ] }, "###Zoran_Supervisor_Token###": { "title": "Supervisor", "can": [ "SEARCH__USER", "SHOW_OVERVIEW_PAGE", "SHOW__SUPERVISOR_FILTER_OPTIONS", "SHOW__SUPERVISOR_PAGE", "SHOW__SUPERVISOR_ENTITLEMENT_USERS", "SHOW__SUPERVISOR_USER_ENTITLEMENTS", "SHOW__SUPERVISOR_UNSCORED_ENTITLEMENTS", "SEARCH__SUPERVISOR_USER_ENTITLEMENTS", "REVOKE__CERTIFY_ACCESS", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "###Zoran_User_Token###": { "title": "User", "can": [ "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS" ] }, "###Zoran_Service_Connector_Token###": { "title": "Service Connector", "can": [ "SERVICE_CONNECTOR", "SHOW__API_KEY_MGMT_PAGE", "SHOW__ENTITLEMENT", "SHOW__USER", "SHOW__CERTIFICATIONS", "SHOW__RULES", "REVOKE__CERTIFY_ACCESS" ] } } } } ] } -

Verify your changes by opening the permissions object as shown in step 1c.

-

-

Next, update the JAS container environment variables:

-

On the instance where Docker is running, create a backup of the

/opt/autoid/res/jas/docker-compose.ymlfile, and edit the variables in the environment section. For example, change the following variables:From:

- OIDC_ENABLED=False - GROUPS_ATTRIBUTE=_groups - OIDC_JWKS_URL=na

To:

- OIDC_ENABLED=True - GROUPS_ATTRIBUTE=groups - OIDC_JWKS_URL= <Same value as in the zoran-api. Refer to step 3 below>

The GROUPS_ATTRIBUTEvariable must match theOIDC_GROUPS_ATTRIBUTEvariable used in thedocker-compose.ymlfile. -

Remove the running JAS container and re-deploy:

docker stack rm jas docker stack deploy --with-registry-auth --compose-file /opt/autoid/res/jas/docker-compose.yml jas

-

-

Next, update the

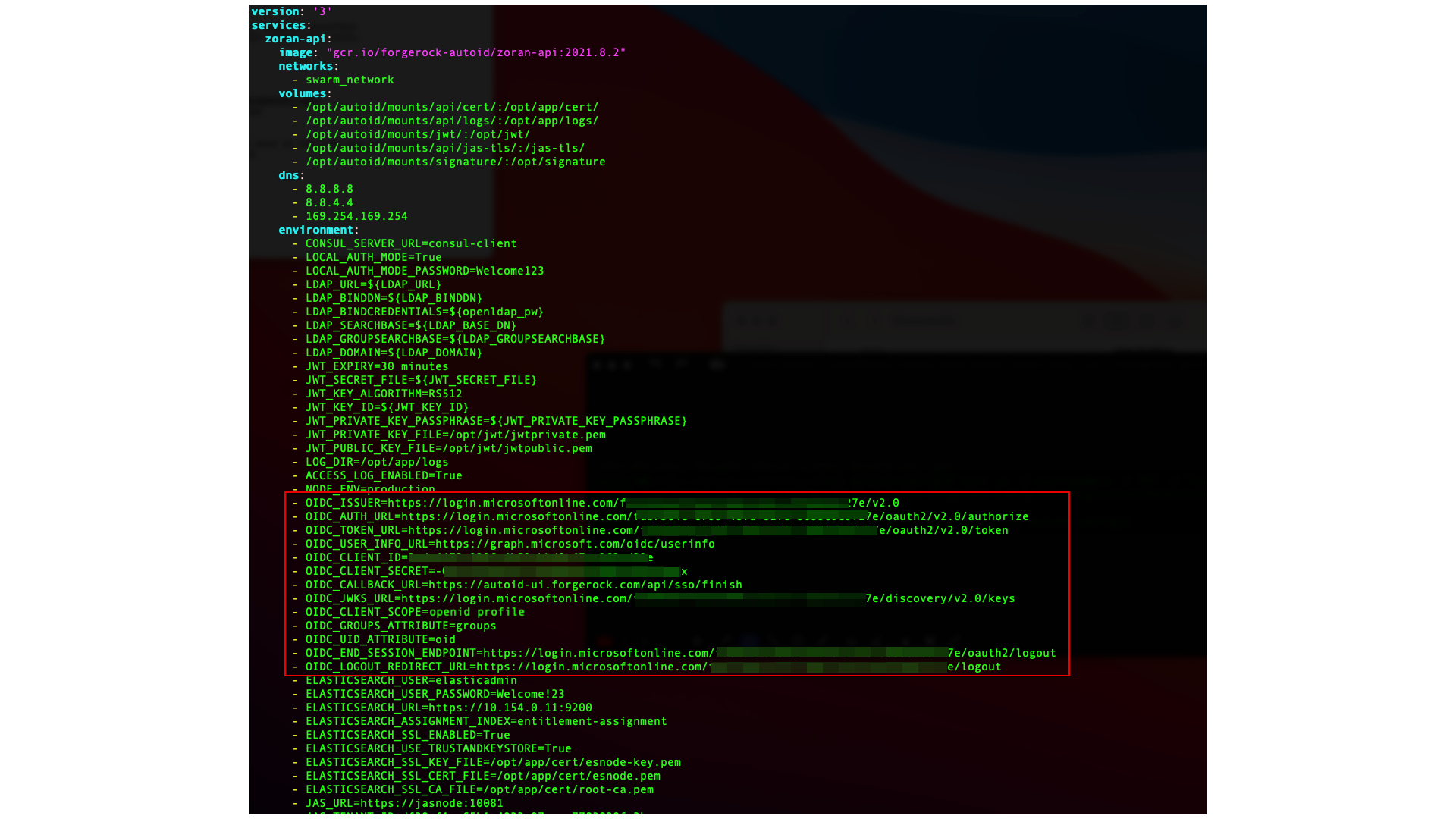

zoran-apicontainer environment variables:-

On the instance where Docker is running, create a backup of the

/opt/autoid/res/api/docker-compose.ymlfile, and edit the following variables in the file replacing the\$\{…\}placeholders:- OIDC_ISSUER=${OIDC_ISSUER} - OIDC_AUTH_URL=${OIDC_AUTH_URL} - OIDC_TOKEN_URL=${OIDC_TOKEN_URL} - OIDC_USER_INFO_URL=${OIDC_USER_INFO_URL} - OIDC_CLIENT_ID=${OIDC_CLIENT_ID} - OIDC_CLIENT_SECRET=${OIDC_CLIENT_SECRET} - OIDC_CALLBACK_URL=${OIDC_CALLBACK_URL} - OIDC_JWKS_URL=${OIDC_JWKS_URL} - OIDC_CLIENT_SCOPE=${OIDC_CLIENT_SCOPE} - OIDC_GROUPS_ATTRIBUTE=${OIDC_GROUPS_ATTRIBUTE} - OIDC_UID_ATTRIBUTE=${OIDC_UID_ATTRIBUTE} - OIDC_END_SESSION_ENDPOINT=${OIDC_END_SESSION_ENDPOINT} - OIDC_LOGOUT_REDIRECT_URL=${OIDC_LOGOUT_REDIRECT_URL}For example, Autonomous Identity displays something similar below (the example uses Asure links and object IDs):

If you are configuring SSO only for the login mode, set LOCAL_AUTH_MODEtofalse(for example, LOCAL_AUTH_MODE=false). If you keepLOCAL_AUTH_MODEtotrue, Autonomous Identity defaults toLocalAndSSO,which uses the OIDC and email options for login. -

Remove the running zoran-api Docker container and re-deploy:

docker stack rm api docker stack deploy --with-registry-auth --compose-file /opt/autoid/res/api/docker-compose.yml api

-

Restart the UI and Nginx Docker containers:

docker service update --force ui_zoran-ui docker service update --force nginx_nginx

-

-

Open the Autonomous Identity UI to verify the SSO login.

Setting the session duration

By default, the session duration is set to 30 minutes. You can change this value at installation by setting the JWT_EXPIRY property in the /autoid-config/vars.yml

file.

If you did not set the value at installation, you can make the change after installation by setting the JWT_EXPIRY property using the API service.

-

Log in to the Docker manager node.

-

Verify the

JWT_EXPIRYproperty.$ docker inspect api_zoran-api

-

Go to the API folder.

$ cd /opt/autoid/res/api

-

Edit the

docker-compose.ymlfile and update theJWT_EXPIRYproperty. TheJWT_EXPIRYproperty is set to minutes. -

Redeploy the Docker stack API.

$ docker stack deploy --with-registry-auth --compose-file docker-compose.yml api

If the command returns any errors, such as "image could not be accessed by the registry," then try the following command:

$ docker stack deploy --with-registry-auth --resolve-image changed \ --compose-file /opt/autoid/res/api/docker-compose.yml api -

Verify the new

JWT_EXPIRYproperty.$ docker inspect api_zoran-api

-

Log in to the Docker worker node.

-

Stop the worker node.

$ docker stop [container ID]

The Docker manager node re-initiates the worker node. Repeat this step on any other worker node.

Custom certificates

By default, Autonomous Identity uses self-signed certificates in its services. You can replace these self-signed certificates with a certificate issued by a Certificate Authority (CA). This section provides instructions on how to replace your self-signed certificates and also update your existing certificates when expired.

Pre-requisites

The following items were used to test the custom certificate procedures:

-

Private key file. The procedure uses a private key file privkey.pem.

-

Full trust chain. The procedure also uses a full trust certificate chain, fullchain.pem.

-

Keystore password. The procedure was tested using the keystore password is Acc#1234.

-

NGINX certificate. The NGINX certificate must support subject alternative name (SAN) for the following domains:

-

autoid-ui.<domain-name>

-

autoid-jas.<domain-name>

-

autoid-configuration-service.<domain-name>

-

autoid-kibana.<domain-name>

-

autoid-api.<domain-name>

-

-

Domain name. The domain name used in the procedure below is

certupdate.autoid.me.Change the value in various places to the domain name applicable to your deployment. -

Autonomous Identity version. The procedures were tested on Autonomous Identity versions 2021.8.5 and 2021.8.6.

Update certificates on Cassandra

-

Create the Java keystore and truststore files for the server using

keytool.The commands generate two JKS files:cassandra-keystore.jksandcassandra-truststore.jks.You need these files for configuring Cassandra and the Java API Service (JAS).openssl pkcs12 -export -in fullchain.pem -inkey privkey.pem -out server.p12 -name cassandranode keytool -importkeystore -deststorepass Acc#1234 -destkeypass Acc#1234 -destkeystore cassandra-keystore.jks -srckeystore server.p12 -srcstoretype PKCS12 -srcstorepass Acc#1234 -alias cassandranode keytool -importcert -keystore cassandra-truststore.jks -alias rootCa -file fullchain.pem -noprompt -keypass Acc#1234 -storepass Acc#1234

-

Create the client certificate. The client certificate is used by external clients, such as JAS and

cqlshto authenticate to Cassandra. In the following example, use the same client certificate for the Cassandra nodes to authenticate with each other.You can create a different certificate, if desired, using similar steps. # Create client.conf with following contents [ req ] distinguished_name = CA_DN prompt = no default_bits = 2048 [ CA_DN ] C = cc O = eng OU = cass CN = CA_CN # Create client key and CSR openssl req -newkey rsa:2048 -nodes -keyout client.key -out signing_request.csr -config client.conf # Create client certificate openssl x509 -req -CA fullchain.pem -CAkey privkey.pem -in signing_request.csr -out client.crt -days 3650 -CAcreateserial # Import client cert into a Java keystore for JAS openssl pkcs12 -export -in client.crt -inkey client.key -out client.p12 -name jas keytool -importkeystore -deststorepass Acc#1234 -destkeypass Acc#1234 -destkeystore client-keystore.jks -srckeystore client.p12 -srcstoretype PKCS12 -srcstorepass Acc#1234 -alias jas

-

View the files that are needed in later steps:

$ ls -1 . cassandra-keystore.jks cassandra-truststore.jks client.conf client.crt client.key client-keystore.jks client.p12 fullchain.pem fullchain.srl privkey.pem server.p12 signing_request.csr

-

Copy the following files to the

/opt/autoid/apache-cassandra-3.11.2/conf/certsdirectory on each Cassandra node:-

cassandra-keystore.jks

-

cassandra-truststore.jks

-

client-keystore.jks

-

-

Copy the following files to the

<autoid-user-home-dir>/.cassandradirectory on each Cassandra node:-

client.key

-

client.crt

-

fullchain.pem

-

-

Make the following edits in the

/opt/autoid/apache-cassandra-3.11.2/conf/cassandra.yamlfile on each Cassandra node:-

Change the keystore and truststore paths in the

server_encryption_optionsandclient_encryption_optionssections:keystore: /opt/autoid/apache-cassandra-3.11.2/conf/certs/client-keystore.jks truststore: /opt/autoid/apache-cassandra-3.11.2/conf/certs/cassandra-truststore.jks

-

-

Update the

<autoid-user-home-dir>/.cassandra/cqlshrcfile with the following edits:[authentication] username = zoranuser password = <password> [connection] hostname = <ip address> factory = cqlshlib.ssl.ssl_transport_factory [ssl] certfile = <autoid-user-home-dir>/.cassandra/fullchain.pem validate = false version = SSLv23 # Next 2 lines must be provided when require_client_auth = true in the cassandra.yaml file userkey = <autoid-user-home-dir>/.cassandra/client_key.key usercert = <autoid-user-home-dir>/.cassandra/client.crt

-

Restart Cassandra.

ps auxf | grep cassandra kill <pid> cd /opt/autoid/apache-cassandra-3.11.2/bin nohup ./cassandra >/opt/autoid/apache-cassandra-3.11.2/cassandra.out 2>&1 &

-

Make sure that Cassandra is running normally using

cqlsh.Use your server’s IP. :$ cqlsh --ssl Connected to Zoran Cluster at <server-ip>:9042. [cqlsh 5.0.1 | Cassandra 3.11.2 | CQL spec 3.4.4 | Native protocol v4] Use HELP for help. zoranuser@cqlsh> describe keyspaces; autonomous_iam system_auth system_distributed autoid_analytics autoid system system_traces autoid_base system_schema autoid_meta autoid_staging zoranuser@cqlsh>

Update certificates on MongoDB

-

Create the keystore and truststore using

keytool.openssl pkcs12 -export -in fullchain.pem -inkey privkey.pem -out server.p12 -name mongonode keytool -importkeystore -deststorepass Acc#1234 -destkeypass Acc#1234 -destkeystore mongo-client-keystore.jks -srckeystore server.p12 -srcstoretype PKCS12 -srcstorepass Acc#1234 -alias mongonode keytool -importcert -keystore mongo-server-truststore.jks -alias rootCa -file fullchain.pem -noprompt -keypass Acc#1234 -storepass Acc#1234

-

Create a new mongodb.pem file.

cat fullchain.pem privkey.pem > mongodb.pem

-

Download the

isg root x1root certificate from Lets Encrypt at https://letsencrypt.org/certs/isgrootx1.pem, and save it asrootCA.pem. -

Back up the MongoDB certificates.

cd /opt/autoid/mongo/certs/ mkdir backup mv mongodb.pem backup/ mv rootCA.pem backup/ mv mongo-*.jks backup

-

Copy the new certificates to the mongodb installation.

cp mongodb.pem /opt/autoid/mongo/certs/ cp rootCA.pem /opt/autoid/mongo/certs/

-

Restart Mongo DB.

sudo systemctl stop mongo-autoid sudo systemctl start mongo-autoid

-

Check for logs for errors in

/opt/autoid/mongo/mongo-autoid.log.The log may show connection errors until JAS has been updated and restarted. -

Add the hostname to the hosts file. For example, we are using:

autoid-mongo.certupdate.autoid.me. -

Check the MongoDB connection from the command line.

mongo --tls --host autoid-mongo.certupdate.autoid.me --tlsCAFile /opt/autoid/mongo/certs/rootCA.pem --tlsCertificateKeyFile /opt/autoid/mongo/certs/mongodb.pem --username mongoadmin

-

Back up and copy the new keystore and truststore to JAS.

cd /opt/autoid/mounts/jas mkdir backup mv mongo-*.jks backup cp mongo-server-truststore.jks /opt/autoid/mounts/jas cp mongo-client-keystore.jks /opt/autoid/mounts/jas

-

Update the JAS configuration. On each Docker manager and worker node, copy the following files to the

/opt/autoid/mount/jasdirectory.-

mongo-client-keystore.jks

-

mongo-server-truststore.jks

The certificates must exist on all Docker nodes (all managers and worker nodes).

-

-

On each Docker manager node, update

/opt/autoid/res/jas/docker-compose.ymlfile and set the Mongo keystore, truststore, and host, and add theextra_hostsline as follows:MONGO_KEYSTORE_PATH=/db-certs/mongo-client-keystore.jks MONGO_TRUSTSTORE_PATH=/db-certs/mongo-server-truststore.jks MONGO_HOST=autoid-mongo.certupdate.autoid.me extra_hosts: - "autoid-mongo.certupdate.autoid.me:<ip of mongodb host>"

-

Restart JAS.

docker stack rm jas nginx docker stack deploy -c /opt/autoid/res/jas/docker-compose.yml jas docker stack deploy -c /opt/autoid/res/nginx/docker-compose.yml nginx

-

Check JAS logs for errors.

docker service logs -f jas_jasnode

Update certificates on JAS

-

On each Docker manager and worker node, copy the following keystore and truststore files to

/opt/autoid/mounts/jasdirectory:-

client-keystore.jks

-

cassandra-truststore.jks

-

-

On each Docker manager node, update

/opt/autoid/res/jas/docker-compose.ymlwith the correct keystore and truststore paths:CASSANDRA_KEYSTORE_PATH=/db-certs/client-keystore.jks CASSANDRA_TRUSTSTORE_PATH=/db-certs/cassandra-truststore.jks

-

Redeploy the JAS server.

docker stack rm jas docker stack deploy jas -c /opt/autoid/res/jas/docker-compose.yml

-

Make sure JAS has no errors.

docker service logs -f jas_jasnode

Update the certificates on NGINX

-

Copy the following files to

/opt/autoid/mounts/nginx/cert/:-

privkey.pem

-

fullchain.pem

-

-

In the

/opt/autoid/mounts/nginx/nginx.conffile, update thessl_certificateandssl_certificate_keyproperties as follows:#SSL Settings ssl_certificate /etc/nginx/cert/fullchain.pem; ssl_certificate_key /etc/nginx/cert/privkey.pem;

-

Make sure that the domain names in the configuration files (

/opt/autoid/mounts/nginx/conf.d) matches the domain names used for certificate generation. -

Restart the Docker container.

docker stack rm nginx docker stack deploy -c /opt/autoid/res/nginx/docker-compose.yml nginx

Update certificates on Opensearch

-

Create a keystore and truststore using

keystore.openssl pkcs12 -export -in fullchain.pem -inkey privkey.pem -out server.p12 -name esnodekey keytool -importkeystore -deststorepass Acc#1234 -destkeypass Acc#1234 -destkeystore elastic-client-keystore.jks -srckeystore server.p12 -srcstoretype PKCS12 -srcstorepass Acc#1234 -alias esnodekey keytool -importcert -keystore elastic-server-truststore.jks -alias rootCa -file fullchain.pem -noprompt -keypass Acc#1234 -storepass Acc#1234

-

Create backups.

cd /opt/autoid/certs/elastic mkdir backup mv *.jks backup

-

Copy the new jks files,

fullchain.pem,privkey.pem, andchain.pemto/opt/autoid/certs/elastic. -

Also, copy the

fullchain.pem,privkey.pem, andchain.pemcertificates to/opt/autoid/elastic/Opensearch-1.3.13/config/. -

Update the following attributes in the

/opt/autoid/elastic/Opensearch-1.3.13/config/elasticsearch.ymlfile:Opensearch_security.ssl.transport.pemcert_filepath: fullchain.pem Opensearch_security.ssl.transport.pemkey_filepath: privkey.pem Opensearch_security.ssl.transport.pemtrustedcas_filepath: chain.pem Opensearch_security.ssl.http.pemcert_filepath: fullchain.pem Opensearch_security.ssl.http.pemkey_filepath: privkey.pem Opensearch_security.ssl.http.pemtrustedcas_filepath: chain.pem Opensearch_security.nodes_dn: - CN=elastic.certupdate.autoid.me

-

Restart Opensearch on all Opensearch nodes:

sudo systemctl restart elastic

-

Check

/opt/autoid/elastic/logs/elasticcluster.logfor errors. The file shows any certificate error until all nodes have been restarted. -

In the

/opt/autoid/res/jas/docker-compose.ymlfile, add the following:extra_hosts: - "elastic.certupdate.autoid.me:<ip of ES host>" update ES_HOST env var: ES_HOST=elastic.certupdate.autoid.me

-

Restart the JAS container:

docker stack rm jas docker stack rm nginx docker stack deploy -c /opt/autoid/res/jas/docker-compose.yml jas docker stack deploy -c /opt/autoid/res/nginx/docker-compose.yml nginx

-

Test the connection from the JAS container to Opensearch:

docker container exec -it <jas container id> sh apk add curl curl -v --cacert /elastic-certs/fullchain.pem -u elasticadmin https://elastic.certupdate.autoid.me:9200

-

Update the configuration in the JAS service using curl:

-

First log in using

curl.curl -X POST https://autoid-ui.certupdate.autoid.me:443/api/authentication/login -k -H 'Content-Type: application/json' -H 'X-TENANT_ID: <tenant_id >' -d '{ "username": "bob.rodgers@forgerock.com", "password": "Welcome123" }'

-

Pull in the current configuration using

curl.curl -k -H "Content-Type: application/json" -H 'X-TENANT-ID: <tenant_id>' -H 'Authorization: Bearer <bearer_token>' --request GET https://jasnode:10081/config/analytics_env_config

-

Modify value for

elasticsearch" to "host":elastic.certupdate.autoid.me``. -

Push the updated configuration:

curl -k -H "Content-Type: application/json" -H 'X-TENANT-ID: <tenant_id>' -H 'Authorization: Bearer <bearer_token>' --request PUT https://jasnode:10081/config/analytics_env_config -d '<updated json config>'

-

-

Update the environment variable in your

.bashrcon all Opensearch nodes and Spark nodes:ES_HOST=elastic.certupdate.autoid.me

Set entity definitions

Before you run analytics, you can add new attributes to the schema using the Autonomous Identity UI. Only administrators have access to this functionality.

-

Open a browser. If you set up your own url, use it for your login.

-

Log in as an admin user, specific to your system. For example:

test user: bob.rodgers@forgerock.com password: Welcome123

-

Click the Administration icon, indicated by the sprocket. Then, click Entity Definitions.

-

On the Entity Definitions page, click Applications to add any new attributes to the schema.

-

Click the Add attribute button.

-

In the Attribute Name field, enter the name of the new attribute. For example,

app_owner_id. -

In the Display Name field, enter a human-readable name of the attribute.

-

Select the attribute type. The options are:

Text,Boolean,Integer,Float,Date, andNumber. -

Click Searchable if you want the attribute to be indexed and available in your filters.

-

Click Save.

-

Click Save again to apply your attribute.

-

If you need to edit the attribute, click the Edit icon. If you need to remove the attribute, click the Remove icon. Note that attributes marked as

Requiredcannot be removed.

-

-

Click Assignments, and repeat the previous steps.

-

Click Entitlements, and repeat the previous steps.

-

Click Identities, and repeat the previous steps.

Click an example

-

Next, you must set the data sources. Refer to Set Data Sources.

Set data sources

After defining any new attributes, you must set your data sources, so that Autonomous Identity can import and ingest your data. Autonomous Identity supports three types of data source files:

-

Comma-separated values (CSV). A comma-separated values (CSV) file is a text file that uses a comma delimiter to separate each field value. Each line of text represents a record, consisting of one or more fields of data.

-

Java Database Connectivity (JDBC). Java Database Connectivity (JDBC) is a Java API that connects to and executes queries on databases, like Oracle, MySQL, PostgreSQL, and MSSQL.

-

Generic. Generic data sources are those data types from vendors that have neither CSV nor JDBC-based formats, such as JSON, or others.

Data source sync types

Autonomous Identity supports partial or incremental data ingestion for faster and efficient data uploads. The four types are full, incremental, enrichment, and delete, and are summarized below:

| Sync Type | Data Source | In AutoID | Result |

|---|---|---|---|

Full |

The records from the entity represents the full set of all records that you intend to ingest. For example:

|

An existing table may have the following:

|

After the ingest job runs, all existing records are fully replaced:

|

Incremental |

The records from the entity represents the records that you want to add to AutoID. For example:

|

An existing table may have the following:

|

After the ingest job runs, the records in the data source are added to the existing records:

|

Enrichment |

The records from the entity represents changes to existing data, such as adding a department attribute. No new objects are added, but here you want to edit or "patch" in new attributes to existing records:

|

An existing table may have the following:

|

After the ingest job runs, the attributes in the data source is added to the existing records. If attributes exist, they get updated. If attributes do not exist, they do not get updated, but you can add also attributes using mappings:

|

Delete |

The records from the entity represent records to be deleted, identified by the primary key:

|

An existing table may have the following:

|

After the ingest job completes, the records with the primary key are deleted:

|

CSV data sources

The following are general tips for setting up your comma-separated-values (CSV) files:

-

Make sure you have access to your CSV files:

applications.csv,assignments.csv,entitlements.csv, andidentities.csv. -

You can review the Data Preparation chapter for more tips on setting up your files.

-

Log in to the Autonomous Identity UI as an administrator.

-

On the Autonomous Identity UI, click the Administration > Data Sources > Add data source > CSV > Next.

-

In the CSV Details dialog box, enter a human-readable name for your CSV file.

-

Select the Sync Type. The options are as follows:

-

Full. Runs a full replacement of data if any.

-

Incremental. Adds new records to existing data.

-

Enrichment. Adds new attributes to existing data records.

-

Delete. Delete any existing data objects.

-

-

Click Add Object, and then select the data source file.

-

Click Applications, enter the path to the

application.csvfile. For example,/data/input/applications.csv. -

Click Assignments, enter the path to the

assignments.csvfile. For example,/data/input/assignments.csv. -

Click Entitlements, enter the path to the

entitlements.csvfile. For example,/data/input/entitlements.csv. -

Click Identities, enter the path to the

identities.csvfile. For example,/data/input/identities.csv.

-

-

Click Save.

Click an example

-

Repeat the previous steps to add more CSV data source files if needed.

-

Next, you must set the attribute mappings. This is a critical step to ensure a successful analytics run. Refer to Set Attribute Mappings.

JDBC Data Sources

The following are general tips for setting up your JDBC data sources (Oracle, MySQL, PostgreSQL, and MSSQL):

-

When configuring your JDBC database, make sure you have properly "whitelisted" the IP addresses that can access the server. For example, you should include your local

autoidinstance and other remote systems, such as a local laptop. -

Make sure you have configured your database tables on your system:

applications,assignments,entitlements, andidentities. -

Make sure to make note of the IP address of your database server.

The following procedure assumes that you have set up Autonomous Identity with connectivity to a database:

-

Log in to the Autonomous Identity UI as an administrator.

-

On the Autonomous Identity UI, click the Administration icon > Data Sources > Add data source > JDBC > Next.

-

In the JDBC Details dialog box, enter a human-readable name for your JDBC files.

-

Select the Sync Type. The options are as follows:

-

Full. Runs a full replacement of data if any.

-

Incremental. Adds new records to existing data.

-

Enrichment. Adds new attributes to existing data records.

-

Delete. Delete any existing data objects.

-

-

For Connection Settings, enter the following:

-

Database Username. Enter a user name for the database user that connects to the data source.

-

Database Password. Enter a password for the database user.

-

Database Driver. Select the database driver. Options are:

-

Oracle -

Mysql -

Postgresql -

Mssqlserver

-

-

Database Connect String. Enter the database connection URI to the data source. For example,

jdbc:<Database Type>://<Database IP Address>/<Database Acct Name>, where:-

jdbcis the SQL driver type -

<Database Type>is the database management system type. Options are:oracle,mysql,postgresql, orsqlserver. -

<Database IP Address>is the database IP address -

<Database Acct Name>is the database account name created in the database instance.

-

-

For example:

* Oracle: jdbc:oracle://35.180.130.161/autoid

* MySQL: jdbc:mysql://35.180.130.161/autoid

* PostgreSQL: jdbc:postgresql://35.180.130.161/autoid

* MSSQL: jdbc:sqlserver://35.180.130.161;database=autoid

| There are other properties that you can use for each JDBC connection URI. Refer to the respective documentation for more information. |

-

Click Add Object, and then select the data source file:

-

Click Applications, enter the path to the

APPLICATIONStable. For example, using PostgreSQL,SELECT * FROM public.applications, wherepublicis the PostgreSQL schema. Make sure to use your company’s database schema. -

Click Assignments, enter the path to the

ASSIGNMENTStable. For example,SELECT * FROM public.assignments. -

Click Entitlements, enter the path to the

ENTITLEMENTStable. For example,SELECT * FROM public.entitlements. -

Click Identities, enter the path to the

IDENTITIEStable. For example,SELECT * FROM public.identities.

-

-

Click Save.

Click an example

-

If you are having connection issues, check the Java API Service (JAS) logs to verify the connection failure:

$ docker service logs -f jas_jasnode

The following entry can appear, which possibly indicates the whitelist was not properly set on the database server:

jas_jasnode.1.5gauc33o1nnn@autonomous-base-dev | java.lang.RuntiimeException: org.postgresql.util.PSQLException: The connection attempt failed. . . . jas_jasnode.1.5gauc33o1nnn@autonomous-base-dev | Caused by: org.postgresql.util.PSQLException: The connection attempt failed. . . . Caused by: java.net.SocketTimeoutExceptiion: connect timed out

-

Next, you must set the attribute mappings. This is a critical step to ensure a successful analytics run. Refer to Set Attribute Mappings.

Generic Data Sources

The following are general tips for setting up your generic data sources:

-

Make sure you have configured data source files:

applications,assignments,entitlements, andidentities. -

Make sure you have the metadata (e.g., URL, prefix) required to access your generic data source files.

-

Log in to the Autonomous Identity UI as an administrator.

-

On the Autonomous Identity UI, click the Administration icon > Data Sources > Add data source > Generic > Next.

-

In the Generic Details dialog box, enter a human-readable name for your generic files.

-

Select the Sync Type. The options are as follows:

-

Full. Runs a full replacement of data if any.

-

Incremental. Adds new records to existing data.

-

Enrichment. Adds new attributes to existing data records.

-

Delete. Delete any existing data objects.

-

-

For Connection Settings, enter the settings to connect to your database server. For example:

{ "username": "admin", "password": "Password123", "connectURL": "http://identity.generic.com" } -

Click Add Object, and then select the data source file:

-

Click Applications, enter the metadata for applications file. For example:

{ "appMetaUrl": "http://identity.generic.com?q=applications&appName=Ac*", "prefix": "autoid" } -

Click Assignments, enter the metadata for the assignments file. For example:

{ "appMetaUrl": "http://identity.generic.com?q=assignments&userId=*", "prefix": "autoid" } -

Click Entitlements, enter the metadata for the entitlements file. For example:

{ "appMetaUrl": "http://identity.generic.com?q=entitlements&appId=*", "prefix": "autoid" } -

Click Identities, enter the metadata for the identities file. For example:

{ "appMetaUrl": "http://identity.generic.com?q=identities&userId=*", "prefix": "autoid" }

-

-

Click Save.

Click an example

-

Repeat the previous steps to add more JDBC data source files if necessary.

-

Next, you must set the attribute mappings. This is a critical step to ensure a successful analytics run. Refer to Set Attribute Mappings.

Set attribute mappings

After setting your data sources for your CSV files, you must map any attributes specific to each of your data files to the Autonomous Identity schema.

-

On the Autonomous Identity UI, click the Administration icon > Data Sources.

-

Click the specific data source file to map.

-

Click Applications to set up its attribute mappings.

-

Click Discover Schema to view the current attributes in the schema, and then click Save.

-

Click Edit mapping to set up attribute mappings. On the Choose an attribute menu, select the corresponding attribute to map to the required attributes. Repeat for each attribute.

-

Click Save.

-

-

Click Assignments and repeat the previous steps.

-

Click Entitlements and repeat the previous steps.

-

Click Identities and repeat the previous steps.

-

Repeat the procedures for each data source file that you want to map.

Click an example

-

Optional. Next, adjust the analytics thresholds. Refer to Set Analytic Thresholds.

Set analytics thresholds

The Autonomous Identity UI now supports the configuration of the analytics threshold values to calculate confidence scores, predications, and recommendations.

| In general, there is little reason to change the default threshold values. If you do edit these values, be aware that incorrect threshold values can negatively affect your analytics results. |

There are six types of threshold settings that administrators can edit:

-

Confidence Score Thresholds. Confidence score thresholds lets you define High, Medium, and Low confidence score ranges. Autonomous Identity computes a confidence score for each access assignment based on its machine learning algorithm. The properties are:

Table 1: Confidence Score Thresholds Settings Default Description Confidence Score Thresholds

-

High: 0.75 or 75%

-

Medium: 0.35 or 35%

-

Confidence scores from 75 to 100 are set to High.

-

Confidence scores from 35 to 74 are set to Medium, and scores from 0 to 34 are set to Low.

-

-

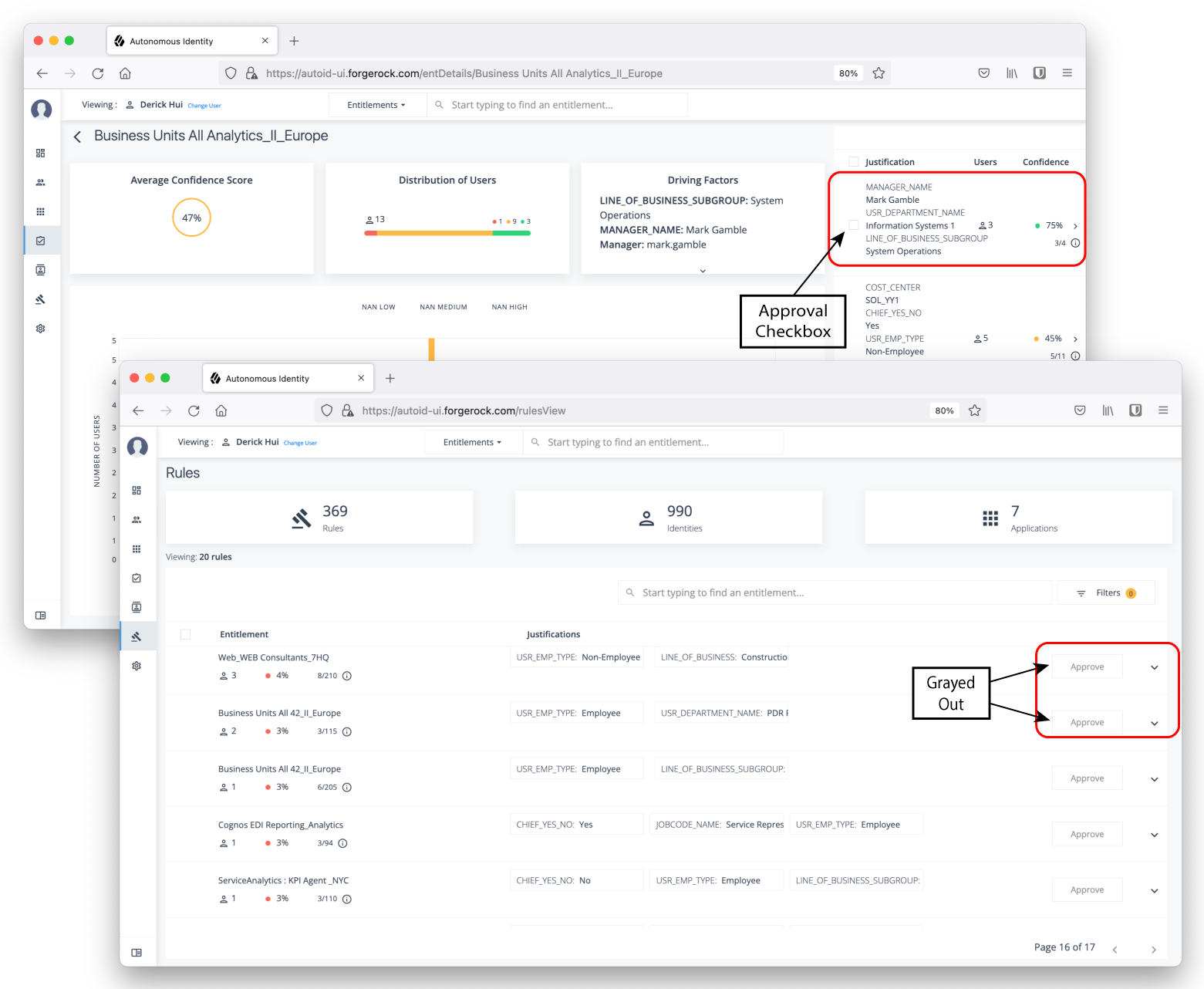

Automation Score Threshold. Automation score threshold is a UI setting determining if an approval button and checkbox appears before a justification rule on the Entitlement Details and Rules pages.

Click to display an example image of where the approval button and checkbox are located

Table 2: Automation Score Threshold Settings Default Description Automation Score Threshold

0.5 or 50%

Specifies if any confidence score less than 50% will not give the user the option to approve the justification or rule.

-

Role Discovery Settings. Role discovery settings determine the key factors for roles for inclusion in the role mining process. Roles are a collection of entitlements and their associated justifications and access patterns. This collection is produced from the output training rules.

Table 3: Role Discovery Settings Default Description Confidence Threshold

0.75 or 75%

Specifies the minimum rule confidence required for inclusion in the role mining process.

Entitlements Threshold

1

Specifies the minimum number of entitlements a role may contain. The Autonomous Identity role mining process does not produce candidate roles below this threshold value. For example, if the threshold is 2, there are no roles that contain only one entitlement.

Minimum Role Membership

30

Specifies the granularity of the role through its membership. For example, the default is 30, which means that no role produced can have fewer than 30 members.

Remove Redundant Access Patterns

Enabled

Specifies a pruning process that removes redundant patterns when more general patterns can be retained.

For example, if a user is an Employee AND in the department, Finance, they receive the Excel access entitlement. However, there may be a more general rule that provisions Excel access by simply being an Employee.

-

[ ENT_Excel | DEPT_Finance, EMP_TYPE_Employee]

-

[ ENT_Excel | EMP_TYPE_Employee]

As a result, Autonomous Identity removes the first pattern and retains the latter more general rule.

-

-

Training Settings. Administrators can set the thresholds for the AI/ML training process, specifically the stemming process and general training properties.

Stemming: During the training process, Autonomous Identity generates rules by searching the data for if-then patterns that have a parent-child relationship in their composition. These if-then patterns are also known as antecedent-consequent relationships, which means rule-entitlement for Autonomous Identity.

Stemming is a process to remove any redundant final association rules output. For a rule to be stemmed, it must match the following criteria:

-

Rule B consequent must match Rule A consequent.

-

Rule B antecedent must be a superset of Rule A antecedent.

-

Rule B confidence score must be within a given range +/- (offset) of Rule A confidence score.

For example, the Payroll Report entitlement has two rules, each that involves the Finance department. All Dublin employees in the Finance department also get the entitlement. Stemming prunes the second rule (B) and retains the more general first rule (A).

ID Consequent (ENT) Antecedent (Rule) Confidence Score A

Payroll report

[Finance]

90%

B

Payroll report

[Dublin,Finance]

89%

Table 4: Training Settings Type Settings Default Description Stemming Predictions

Determines stemming, or pruning, properties.

Stemming Enabled

Enabled

Specifies if stemming occurs or not. Do not disable this feature.

Stemming Offset

0.02

Specifies the confidence range (plus or minus) of one rule to another rule.

Stemming Feature Size

3

Specifies the "up-to" maximum antecedent/justification size that may be the size priority of stemming. Because we want to retain the smallest rules possible, we start by prioritizing rules with an antecedent size of 1. We can then increase the antecedent size, iteratively, until we reach the Stemming Feature Size setting.

Batch Size

15000

Specifies the number of samples viewed is indicated by the batch size. An epoch is defined as the number of passes for a model to iterate through the entire dataset once and update its learning algorithm. To process the entire epoch, the model views a few samples at a time in batches.

Base Minimum Group

2

Specifies the minimum support value used in training. It is referred as Base, because it is used to find the minimum support value for the initial chunk of training.

Minimum Confidence

0.02

Specifies the lowest acceptable confidence score to be included in the entitlement-rule combination.

Number of Partitions

200

Specifies the number of partitions in a Spark configuration. A partition is a smaller chunk of a large dataset. Spark can run one concurrent task in a single partition. As a rule of thumb, the more partitions you have, the more work can be distributed among Spark worker nodes. In this case, small chunks of data are processed by each worker. In the case of fewer partitions, Autonomous Identity can process larger chunks of data.

-

-

Predictions Settings. Administrators can set the thresholds for the recommendation and as-is prediction processes.

Table 5: Predictions Settings Type Settings Default Description Recommendation Settings

Properties for setting the recommendation predictions settings.

Threshold

0.75

Specifies the confidence score threshold to be considered for recommendation.

Batch Size

1000

Specifies the number of rules and user entitlements processed at one time.

Minimum Frequency

0

Specifies the minimum frequency for a rule to appear for consideration as a recommendation. During the first training stage, Autonomous Identity models the frequent itemsets that appear in the HR attributes-only of each user. Only rules that appear a minimum of N times are considered. The value of N is the Minimum Frequency.

As-Is Prediction Settings

Properties for setting the as-is predictions settings.

Batch Size

15000

Specifies the number of rules and user entitlements processed at one time.

Confidence Threshold

0

Specifies the confidence score threshold to be considered for an as-is prediction.

Minimum Rule Length

1

Specifies the minimum justification size for rules to be considered in predictions. You only would increase this property if you don’t want a single rule overriding more specific or granular rules when determining access. For example, if the minimum rule length is 2, Autonomous Identity only uses the rule DEPT_Finance, JOB_TITLE_Account_II. However, if the default is kept at 1, the second and shorter rule can include a broader number of entitlement assignments.

-

[ ENT_AccountingSoftware | DEPT_Finance, JOB_TITLE_Account_II]

-

[ ENT_AccountingSoftware | EMP_TYPE_Employee]

Maximum Rule Length

10

Specifies the maximum justification size for rules to be considered in predictions. This property is a guardrail to keep rules that contain extremely large or complex justifications out of the prediction set.

Prediction Confidence Window

0.05

Specifies the range of acceptable values for a prediction confidence score. Rules with confidence scores outside the prediction confidence window range are filtered out. A confidence window is determined from the values set in the configuration file: max=maxConf, min=maxConf - pred_conf_window.

-

-

Analytics Spark Job Config. Administrators can adjust the Apache Spark job configuration if needed.

Table 6: Analytics Spark Job Configuration Settings Default Description Driver Memory

2G

Specifies the amount of memory for the driver process.

Driver Cores

3

Specifies the number of cores to use for the driver process in cluster mode.

Executor Memory

3G

Specifies the amount of memory to use per executor process.

Executor Cores

6

Specifies the number of executor cores per worker node.

Configure Analytic Settings

-

Log in to the Autonomous Identity UI as an administrator.

-

On the Autonomous Identity UI, click Administration.

-

Click Analytics Settings.

-

Under Confidence Score Thresholds, click Edit next to the High threshold value, and then enter a new value. Click Save. Repeat for the Medium threshold value.

-

Under Automation Score Threshold, click Edit next to a threshold value, and then enter a new value.

-

Under Role Discovery Setting, click Edit next to a threshold value, and then enter a new value.

-

Under Training Settings, click Edit next to a threshold value, and then enter a new value.

-

Under Prediction Settings, click Edit next to a threshold value, and then enter a new value.

-

Under Analytics Spark Job Config, click Edit next to a threshold value, and then enter a new value.

-

Click Save.

Click an example

-

Next, you can run the analytics. Refer Run Analytics.

Run analytics

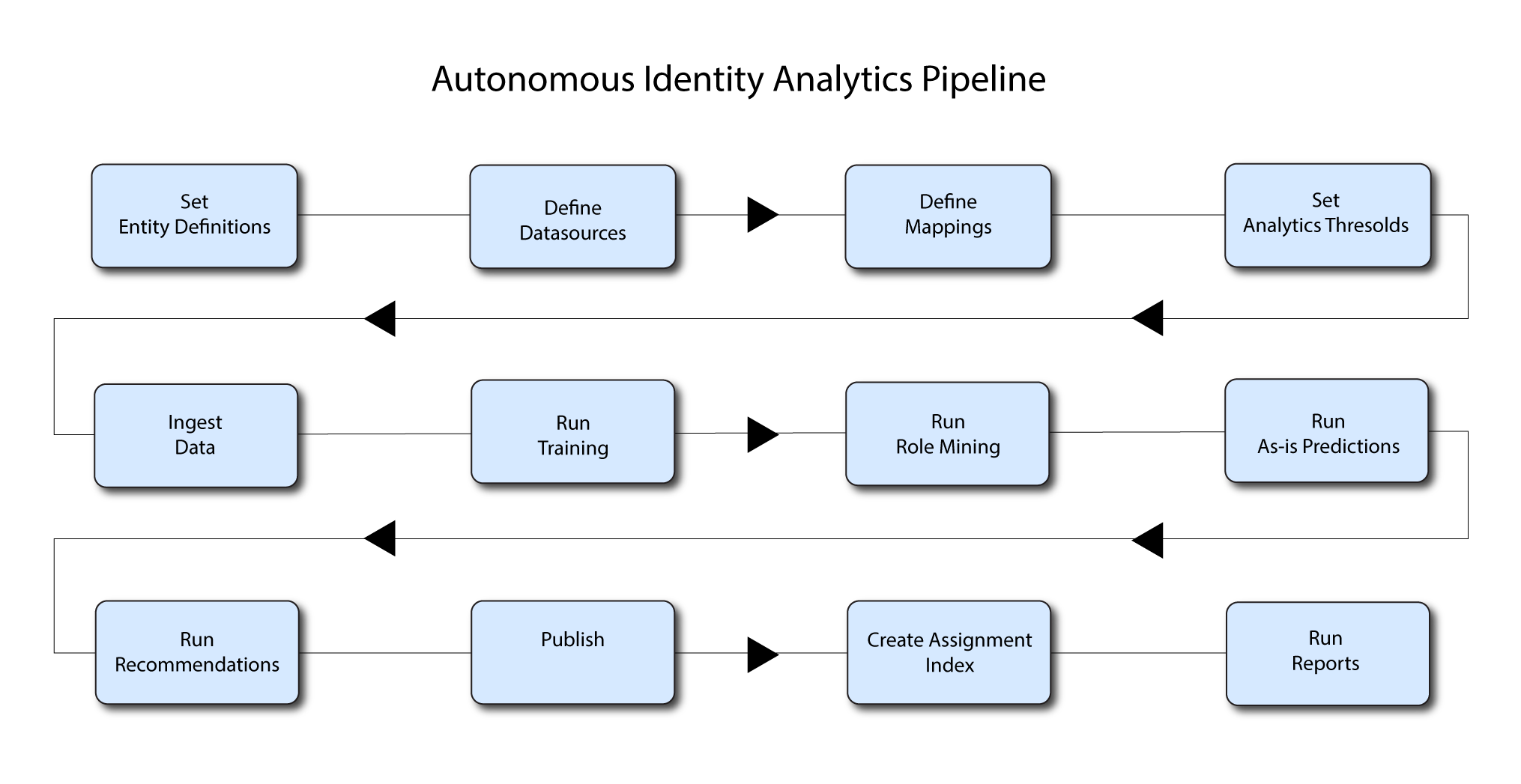

The Analytics pipeline is the heart of Autonomous Identity. The pipeline analyzes, calculates, and determines the association rules, confidence scores, predictions, and recommendations for assigning entitlements and roles to the users.

The analytics pipeline is an intensive processing operation that can take time depending on your dataset and configuration. To ensure an accurate analysis, the data needs to be as complete as possible with little or no null values. Once you have prepared the data, you must run a series of analytics jobs to ensure an accurate rendering of the entitlements and confidence scores.

Pre-analytics tasks

Before running the analytics, you must run the following pre-analytics steps to set up your datasets and schema using the Autonomous Identity UI:

-

Add attributes to the schema. For more information, refer to Set Entity Definitions.

-

Define your datasources. Autonomous Identity supports different file types for ingestion: CSV, JDBC, and generic. You can enter more than one data source file, specifying the dataset location on your target machine. For more information, refer to Set Data Sources.

-

Define attribute mappings between your data and the schema. For more information, refer to Set Attribute Mappings.

-

Configure your analytics threshold values. For more information, refer to Set Analytics Thresholds.

About the analytics process

Once you have finished the pre-analytics steps, you can start the analytics. The general analytics process is outlined as follows:

-

Ingest. The ingestion job pulls in data into the system. You can ingest CSV, JDBC, and generic JSON files depending on your system.

-

Training. The training job creates the association rules for each user-assigned entitlement. This is a somewhat intensive operation as the analytics generates a million or more association rules. Once the association rules have been determined, they are applied to user-assigned entitlements.

-

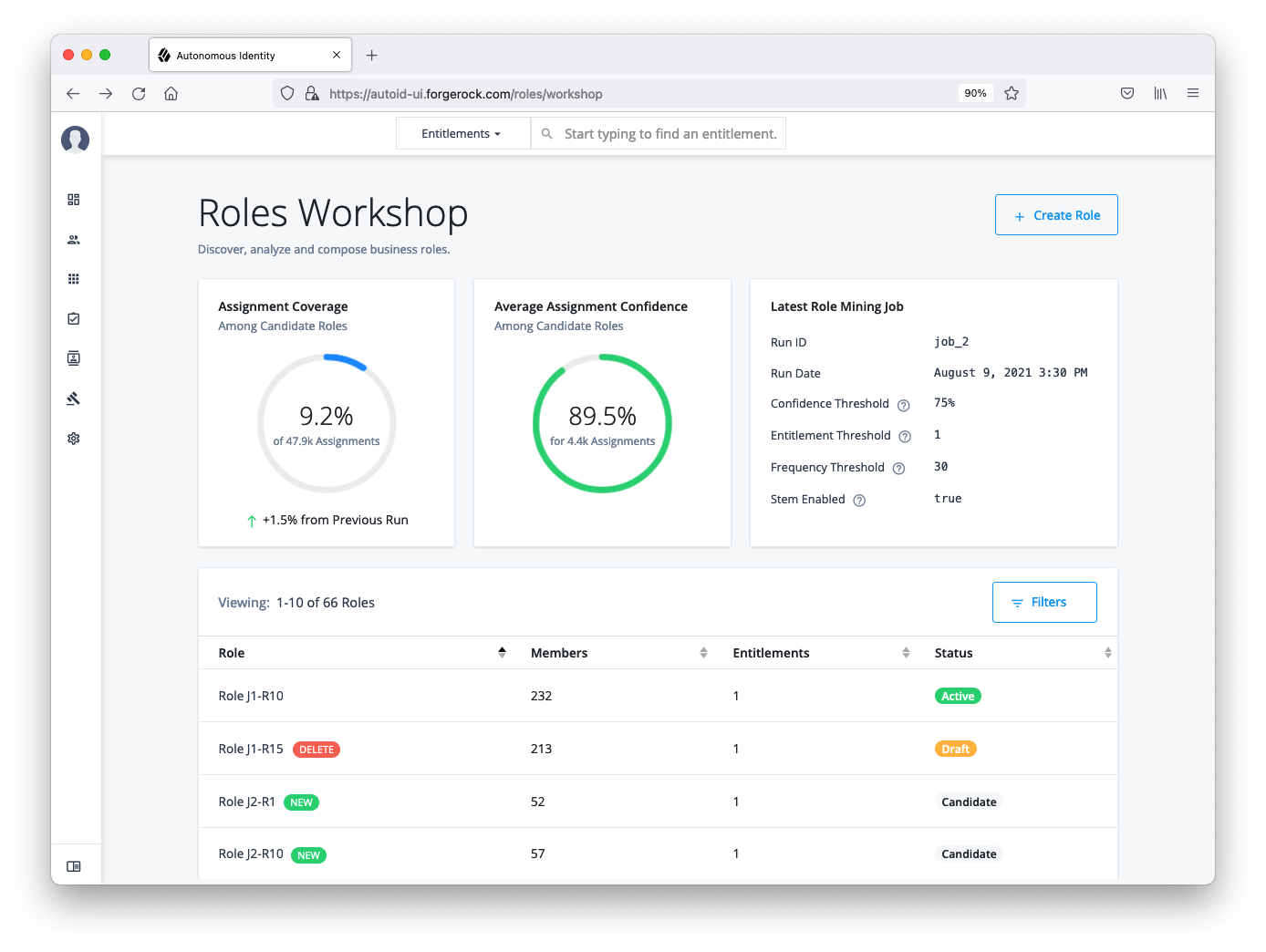

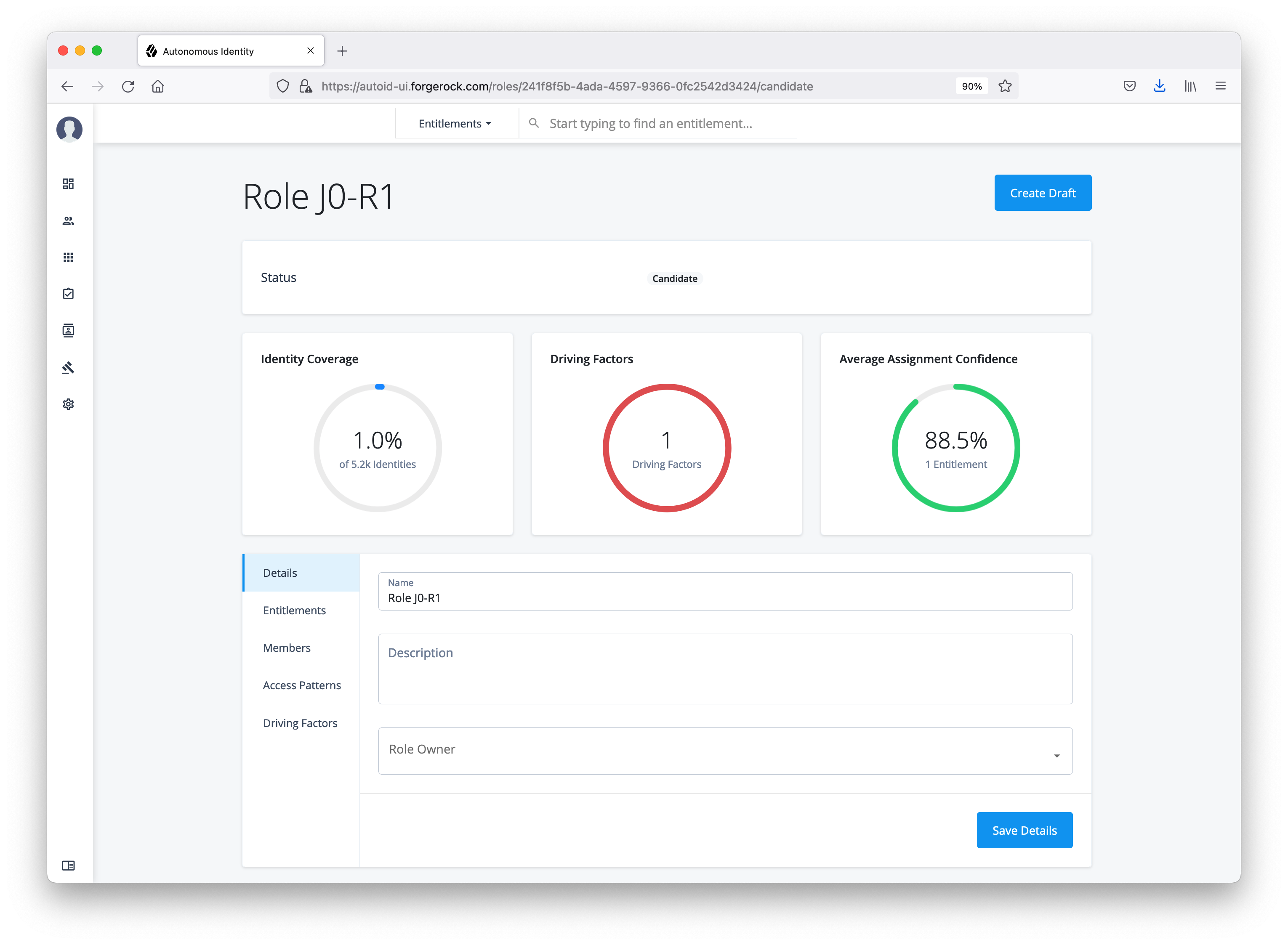

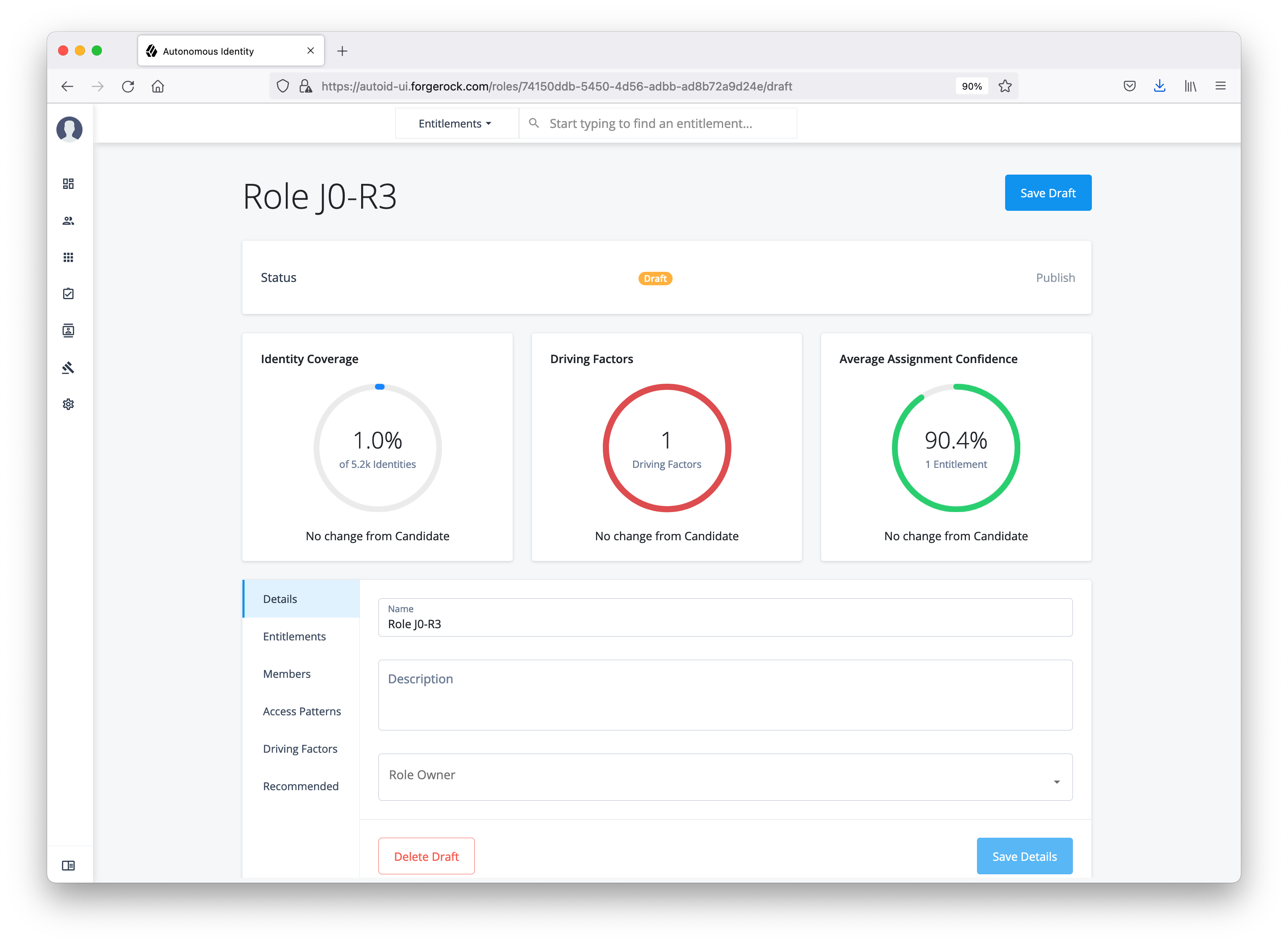

Role Mining. The role mining job analyzes all existing entitlements and analyzes candidate configurations for new roles.

-

Predict As-Is. The predict as-is job determines the current confidence scores for all assigned entitlements.

-

Predict Recommendation. The predict recommendations job looks at all users who do not have a specific entitlement, but are good candidates to receive the entitlement based on their user attribute data.

-

Publish. The publish run publishes the data to the backend Cassandra or MongoDB databases.

-

Create Assignment Index. The create-assignment-index creates the Autonomous Identity index.

-

Run Reports. You can run the create-assignment-index-report (report on index creation), anomaly (report on anomalous entitlement assignments), insight (summary of the analytics jobs), and audit (report on change of data).

|

The analytics pipeline requires that DNS properly resolve the hostname before its start. Make sure to set it on your DNS server or locally in your |

The following sections present the steps to run the analytics pipeline using the Jobs UI.

|

You can continue to use the command-line to run each step of the analytics pipeline. For instructions, refer to Run analytics on the command Line. |

Ingest the data files

At this point, you should have set your data sources and configured your attribute mappings. You can now run the initial analytics job to import the data into the Cassandra or MongoDB database.

Run ingest using the UI:

-

On the Autonomous Identity UI, click the Administration link, and then click Jobs.

-

On the Jobs page, click New Job. Autonomous Identity displays a job schedule with each job in the analytics pipeline.

-

Click Ingest, and then click Next.

-

On the New Ingest Job box, enter the name of the job, and then select the data source file.

-