Configuration

This guide shows you how to configure DS server features.

ForgeRock® Identity Platform serves as the basis for our simple and comprehensive Identity and Access Management solution. We help our customers deepen their relationships with their customers, and improve the productivity and connectivity of their employees and partners. For more information about ForgeRock and about the platform, see https://www.forgerock.com.

The ForgeRock® Common REST API works across the platform to provide common ways to access web resources and collections of resources.

HTTP access

Set the HTTP port

The following steps demonstrate how to set up an HTTP port if none was configured at setup time

with the --httpPort option:

-

Create an HTTP connection handler:

$ dsconfig \ create-connection-handler \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name HTTP \ --type http \ --set enabled:true \ --set listen-port:8080 \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbash -

Enable an HTTP access log.

-

The following command enables JSON-based HTTP access logging:

$ dsconfig \ set-log-publisher-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --publisher-name "Json File-Based HTTP Access Logger" \ --set enabled:true \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbash -

The following command enables HTTP access logging:

$ dsconfig \ set-log-publisher-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --publisher-name "File-Based HTTP Access Logger" \ --set enabled:true \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbash

-

-

After you set up an HTTP port, enable an HTTP endpoint.

For details, see Configure HTTP user APIs or Use administrative APIs.

Set the HTTPS port

At setup time use the --httpsPort option.

Later, follow these steps to set up an HTTPS port:

-

Create an HTTPS connection handler.

The following example sets the port to

8443and uses the default server certificate:$ dsconfig \ create-connection-handler \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name HTTPS \ --type http \ --set enabled:true \ --set listen-port:8443 \ --set use-ssl:true \ --set key-manager-provider:PKCS12 \ --set trust-manager-provider:"JVM Trust Manager" \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbashIf the key manager provider has multiple key pairs that DS could use for TLS, where the secret key was generated with the same key algorithm, such as

ECorRSA, you can specify which key pairs to use with the--set ssl-cert-nickname:server-certoption. The server-cert is the certificate alias of the key pair. This option is not necessary if there is only one server key pair, or if each secret key was generated with a different key algorithm. -

Enable the HTTP access log.

-

The following command enables JSON-based HTTP access logging:

$ dsconfig \ set-log-publisher-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --publisher-name "Json File-Based HTTP Access Logger" \ --set enabled:true \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbash -

The following command enables HTTP access logging:

$ dsconfig \ set-log-publisher-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --publisher-name "File-Based HTTP Access Logger" \ --set enabled:true \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbash

-

-

If the deployment requires SSL client authentication, set the properties

ssl-client-auth-policyandtrust-manager-providerappropriately. -

After you set up an HTTPS port, enable an HTTP endpoint.

For details, see Configure HTTP user APIs, or Use administrative APIs.

Configure HTTP user APIs

The way directory data appears to client applications is configurable. You configure a Rest2ldap endpoint for each HTTP API to user data.

A Rest2ldap mapping file defines how JSON resources map to LDAP entries.

The default mapping file is /path/to/opendj/config/rest2ldap/endpoints/api/example-v1.json.

The default mapping works with Example.com data from the evaluation setup profile.

Edit or add mapping files for your own APIs. For details, see REST to LDAP reference.

If you have set up a directory server with the ds-evaluation profile, you can skip the first two steps:

-

If necessary, change the properties of the default Rest2ldap endpoint, or create a new endpoint.

The default Rest2ldap HTTP endpoint is named

/apiafter itsbase-path. Thebase-pathmust be the same as the name, and is read-only after creation. By default, the/apiendpoint requires authentication.The following example enables the default

/apiendpoint:$ dsconfig \ set-http-endpoint-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --endpoint-name /api \ --set authorization-mechanism:"HTTP Basic" \ --set config-directory:config/rest2ldap/endpoints/api \ --set enabled:true \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbashAlternatively, you can create another Rest2ldap endpoint to expose a different HTTP API, or to publish data under an alternative base path, such as

/rest:$ dsconfig \ create-http-endpoint \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --endpoint-name /rest \ --type rest2ldap-endpoint \ --set authorization-mechanism:"HTTP Basic" \ --set config-directory:config/rest2ldap/endpoints/api \ --set enabled:true \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbash -

If necessary, adjust the endpoint configuration to use an alternative HTTP authorization mechanism.

By default, the Rest2ldap endpoint maps HTTP Basic authentication to LDAP authentication. Change the

authorization-mechanismsetting as necessary. For details, see Configure HTTP Authorization. -

Try reading a resource.

The following example generates the CA certificate in PEM format from the server deployment ID and password. It uses the CA certificate to trust the server certificate. A CA certificate is only necessary if the CA is not well-known:

$ dskeymgr \ export-ca-cert \ --deploymentId $DEPLOYMENT_ID \ --deploymentIdPassword password \ --outputFile ca-cert.pem $ curl \ --cacert ca-cert.pem \ --user bjensen:hifalutin \ https://localhost:8443/api/users/bjensen?_prettyPrint=true { "_id" : "bjensen", "_rev" : "<revision>", "_schema" : "frapi:opendj:rest2ldap:posixUser:1.0", "userName" : "bjensen@example.com", "displayName" : [ "Barbara Jensen", "Babs Jensen" ], "name" : { "givenName" : "Barbara", "familyName" : "Jensen" }, "description" : "Original description", "manager" : { "_id" : "trigden", "_rev" : "<revision>" }, "groups" : [ { "_id" : "Carpoolers", "_rev" : "<revision>" } ], "contactInformation" : { "telephoneNumber" : "+1 408 555 1862", "emailAddress" : "bjensen@example.com" }, "uidNumber" : 1076, "gidNumber" : 1000, "homeDirectory" : "/home/bjensen" }bash

Configure HTTP authorization

HTTP authorization mechanisms map HTTP credentials to LDAP credentials.

Multiple HTTP authorization mechanisms can be enabled simultaneously. You can assign different mechanisms to Rest2ldap endpoints and to the Admin endpoint.

By default, these HTTP authorization mechanisms are supported:

- HTTP Anonymous

-

Process anonymous HTTP requests, optionally binding with a specified DN.

If no bind DN is specified (default), anonymous LDAP requests are used.

This mechanism is enabled by default.

- HTTP Basic (enabled by default)

-

Process HTTP Basic authentication requests by mapping the HTTP Basic identity to a user’s directory account.

By default, the exact match identity mapper with its default configuration is used to map the HTTP Basic user name to an LDAP

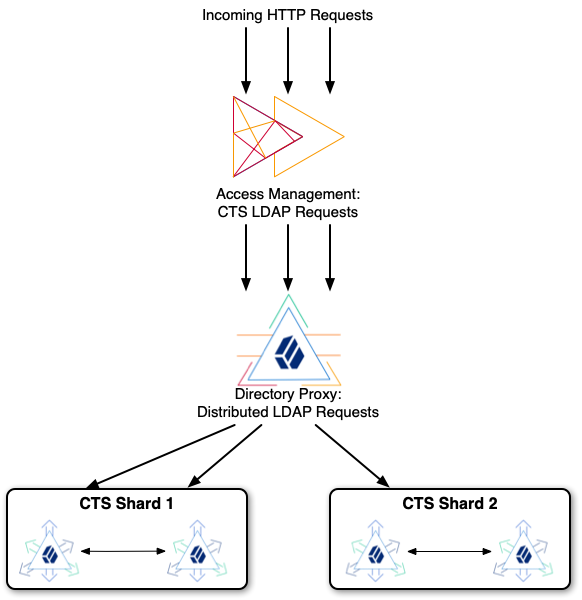

uid. The DS server then searches in all local public naming contexts to find the user’s entry based in theuidvalue. For details, see Identity Mappers. - HTTP OAuth2 CTS

-

Process OAuth 2.0 requests as a resource server, acting as an AM Core Token Service (CTS) store.

When the client bearing an OAuth 2.0 access token presents the token to access the JSON resource, the server tries to resolve the access token against the CTS data that it serves for AM. If the access token resolves correctly (is found in the CTS data and has not expired), the DS server extracts the user identity and OAuth 2.0 scopes. If the required scopes are present and the token is valid, it maps the user identity to a directory account.

This mechanism makes it possible to resolve access tokens by making an internal request, avoiding a request to AM. This mechanism does not ensure that the token requested will have already been replicated to the replica where the request is routed.

AM’s CTS store is constrained to a specific layout. The

authzid-json-pointermust useuserName/0for the user identifier. - HTTP OAuth2 OpenAM

-

Process OAuth 2.0 requests as a resource server, sending requests to AM for access token resolution.

When the client bearing an OAuth 2.0 access token presents the token to access the JSON resource, the directory service requests token information from AM. If the access token is valid, the DS server extracts the user identity and OAuth 2.0 scopes. If the required scopes are present, it maps the user identity to a directory account.

Access token resolution requests should be sent over HTTPS. You can configure a truststore manager if necessary to trust the authorization server certificate, and a keystore manager to obtain the DS server certificate if the authorization server requires mutual authentication.

- HTTP OAuth2 Token Introspection (RFC7662)

-

Handle OAuth 2.0 requests as a resource server, sending requests to an RFC 7662-compliant authorization server for access token resolution.

The DS server must be registered as a client of the authorization server.

When the client bearing an OAuth 2.0 access token presents the token to access the JSON resource, the DS server requests token introspection from the authorization server. If the access token is valid, the DS server extracts the user identity and OAuth 2.0 scopes. If the required scopes are present, it maps the user identity to a directory account.

Access token resolution requests should be sent over HTTPS. You can configure a truststore manager if necessary to trust the authorization server certificate, and a keystore manager to obtain the DS server certificate if the authorization server requires mutual authentication.

| The HTTP OAuth2 File mechanism is an internal interface intended for testing, and not supported for production use. |

When more than one authentication mechanism is specified, mechanisms are applied in the following order:

-

If the client request has an

Authorizationheader, and an OAuth 2.0 mechanism is specified, the server attempts to apply the OAuth 2.0 mechanism. -

If the client request has an

Authorizationheader, or has the custom credentials headers specified in the configuration, and an HTTP Basic mechanism is specified, the server attempts to apply the Basic Auth mechanism. -

Otherwise, if an HTTP anonymous mechanism is specified, and none of the previous mechanisms apply, the server attempts to apply the mechanism for anonymous HTTP requests.

There are many possibilities when configuring HTTP authorization mechanisms. This procedure shows only one OAuth 2.0 example.

The example below uses settings as listed in the following table. When using secure connections, make sure the servers can trust each other’s certificates. Download ForgeRock Access Management software from the ForgeRock BackStage download site:

| Setting | Value |

|---|---|

OpenAM URL |

|

Authorization server endpoint |

|

Identity repository |

DS server configured by the examples that follow. |

OAuth 2.0 client ID |

|

OAuth 2.0 client secret |

|

OAuth 2.0 client scopes |

|

Rest2ldap configuration |

Default settings. See Configure HTTP User APIs. |

Read the ForgeRock Access Management documentation if necessary to install and configure AM. Then follow these steps to try the demonstration:

-

Update the default HTTP OAuth2 OpenAM configuration:

$ dsconfig \ set-http-authorization-mechanism-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --mechanism-name "HTTP OAuth2 OpenAM" \ --set enabled:true \ --set token-info-url:https://am.example.com:8443/openam/oauth2/tokeninfo \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbash -

Update the default Rest2ldap endpoint configuration to use HTTP OAuth2 OpenAM as the authorization mechanism:

$ dsconfig \ set-http-endpoint-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --endpoint-name "/api" \ --set authorization-mechanism:"HTTP OAuth2 OpenAM" \ --no-prompt \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pinbash -

Obtain an access token with the appropriate scopes:

$ curl \ --request POST \ --user "myClientID:password" \ --data "grant_type=password&username=bjensen&password=hifalutin&scope=read%20uid%20write" \ https://am.example.com:8443/openam/oauth2/access_token { "access_token": "token-string", "scope": "uid read write", "token_type": "Bearer", "expires_in": 3599 }bashUse HTTPS when obtaining access tokens.

-

Request a resource at the Rest2ldap endpoint using HTTP Bearer authentication with the access token:

$ curl \ --header "Authorization: Bearer token-string" \ --cacert ca-cert.pem \ https://localhost:8443/api/users/bjensen?_prettyPrint=true { "_id": "bjensen", "_rev": "<revision>", "_schema": "frapi:opendj:rest2ldap:posixUser:1.0", "_meta": {}, "userName": "bjensen@example.com", "displayName": ["Barbara Jensen", "Babs Jensen"], "name": { "givenName": "Barbara", "familyName": "Jensen" }, "description": "Original description", "contactInformation": { "telephoneNumber": "+1 408 555 1862", "emailAddress": "bjensen@example.com" }, "uidNumber": 1076, "gidNumber": 1000, "homeDirectory": "/home/bjensen", "manager": { "_id": "trigden", "displayName": "Torrey Rigden" } }bashUse HTTPS when presenting access tokens.

Use administrative APIs

The APIs for configuring and monitoring DS servers are under the following endpoints:

/admin/config-

Provides a REST API to the server configuration under

cn=config.By default, this endpoint is protected by the HTTP Basic authorization mechanism. Users reading and editing the configuration must have appropriate privileges, such as

config-readandconfig-write.Each LDAP entry maps to a resource under

/admin/config, with default values shown in the resource even if they are not set in the LDAP representation. /alive-

Provides an endpoint to check whether the server is currently alive, meaning that its internal checks have not found any errors that would require administrative action.

By default, this endpoint returns a status code to anonymous requests, and supports authenticated requests. For details, see Server is Alive (HTTP).

/healthy-

Provides an endpoint to check whether the server is currently healthy, meaning that it is alive and any replication delays are below a configurable threshold.

By default, this endpoint returns a status code to anonymous requests, and supports authenticated requests. For details, see Server Health (HTTP).

/metrics/api-

Provides read-only access through Forgerock Common REST to a JSON-based view of

cn=monitorand the monitoring backend.By default, the HTTP Basic authorization mechanism protects this endpoint. Users reading monitoring information must have the

monitor-readprivilege.The endpoint represents a collection where each LDAP entry maps to a resource under

/metrics/api. Use a REST Query with a_queryFilterparameter to access this endpoint. To return all resources, use/metrics/api?_queryFilter=true. /metrics/prometheus-

Provides an API to the server monitoring information for use with Prometheus monitoring software.

By default, this endpoint is protected by the HTTP Basic authorization mechanism. Users reading monitoring information must have the

monitor-readprivilege.

To use the Admin endpoint APIs, follow these steps:

-

Grant users access to the endpoints as appropriate:

-

For access to

/admin/config, assignconfig-readorconfig-writeprivileges, and a global ACI to read or updatecn=config.The following example grants Kirsten Vaughan access to read the configuration:

$ ldapmodify \ --hostname localhost \ --port 1636 \ --useSsl \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --bindDN uid=admin \ --bindPassword password << EOF dn: uid=kvaughan,ou=People,dc=example,dc=com changetype: modify add: ds-privilege-name ds-privilege-name: config-read EOF $ dsconfig \ set-access-control-handler-prop \ --add global-aci:\(target=\"ldap:///cn=config\"\)\(targetattr=\"*\"\)\ \(version\ 3.0\;\ acl\ \"Read\ configuration\"\;\ allow\ \(read,search\)\ userdn=\"ldap:///uid=kvaughan,ou=People,dc=example,dc=com\"\;\) \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbash -

For access to

/metricsendpoints, if no account for monitoring was created at setup time, assign themonitor-readprivilege.The following example adds the

monitor-readprivilege to Kirsten Vaughan’s entry:$ ldapmodify \ --hostname localhost \ --port 1636 \ --useSsl \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --bindDN uid=admin \ --bindPassword password << EOF dn: uid=kvaughan,ou=People,dc=example,dc=com changetype: modify add: ds-privilege-name ds-privilege-name: monitor-read EOFbash

For details, see Administrative privileges.

-

-

Adjust the

authorization-mechanismsettings for the Admin endpoint.By default, the Admin endpoint uses the HTTP Basic authorization mechanism. The HTTP Basic authorization mechanism default configuration resolves the user identity extracted from the HTTP request to an LDAP user identity as follows:

-

If the request has an

Authorization: Basicheader for HTTP Basic authentication, the server extracts the username and password. -

If the request has

X-OpenIDM-UsernameandX-OpenIDM-Passwordheaders, the server extracts the username and password. -

The server uses the default exact match identity mapper to search for a unique match between the username and the UID attribute value of an entry in the local public naming contexts of the DS server.

In other words, in LDAP terms, it searches under all user data base DNs for

(uid=http-username). The usernamekvaughanmaps to the example entry with DNuid=kvaughan,ou=People,dc=example,dc=com.For details, see Identity mappers, and Configure HTTP authorization.

-

-

Test access to the endpoint as an authorized user.

The following example reads the Admin endpoint resource under

/admin/config. The following example generates the CA certificate in PEM format from the server deployment ID and password. It uses the CA certificate to trust the server certificate. A CA certificate is only necessary if the CA is not well-known:$ dskeymgr \ export-ca-cert \ --deploymentId $DEPLOYMENT_ID \ --deploymentIdPassword password \ --outputFile ca-cert.pem $ curl \ --cacert ca-cert.pem \ --user kvaughan:bribery \ https://localhost:8443/admin/config/http-endpoints/%2Fadmin?_prettyPrint=true { "_id" : "/admin", "_rev" : "<revision>", "_schema" : "admin-endpoint", "java-class" : "org.opends.server.protocols.http.rest2ldap.AdminEndpoint", "base-path" : "/admin", "authorization-mechanism" : [ { "_id" : "HTTP Basic", "_rev" : "<revision>" } ], "enabled" : true }bashNotice how the path to the resource in the example above,

/admin/config/http-endpoints/%2Fadmin, corresponds to the DN of the entry undercn=config, which isds-cfg-base-path=/admin,cn=HTTP Endpoints,cn=config.The following example demonstrates reading everything under

/metrics/api:$ curl \ --cacert ca-cert.pem \ --user kvaughan:bribery \ https://localhost:8443/metrics/api?_queryFilter=truebash

LDAP access

Set the LDAP port

The reserved port number for LDAP is 389.

Most examples in the documentation use 1389, which is accessible to non-privileged users:

-

The following example changes the LDAP port number to

11389:$ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDAP \ --set listen-port:11389 \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbash -

Restart the connection handler, and the change takes effect:

$ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDAP \ --set enabled:false \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-prompt $ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDAP \ --set enabled:true \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbash

Enable StartTLS

StartTLS negotiations start on the unsecure LDAP port, and then protect communication with the client:

-

Activate StartTLS on the current LDAP port:

$ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDAP \ --set allow-start-tls:true \ --set key-manager-provider:PKCS12 \ --set trust-manager-provider:"JVM Trust Manager" \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbashIf the key manager provider has multiple key pairs that DS could use for TLS, where the secret key was generated with the same key algorithm, such as

ECorRSA, you can specify which key pairs to use with the--set ssl-cert-nickname:server-certoption. The server-cert is the certificate alias of the key pair. This option is not necessary if there is only one server key pair, or if each secret key was generated with a different key algorithm.The change takes effect. No need to restart the server.

Set the LDAPS port

At setup time, use the --ldapsPort option.

Later, follow these steps to set up an LDAPS port:

-

Configure the server to activate LDAPS access:

$ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDAPS \ --set enabled:true \ --set listen-port:1636 \ --set use-ssl:true \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbash -

If the deployment requires SSL client authentication, set the

ssl-client-auth-policyandtrust-manager-providerproperties appropriately.

Set the LDAPS port

The reserved port number for LDAPS is 636.

Most examples in the documentation use 1636, which is accessible to non-privileged users.

-

Change the port number using the

dsconfigcommand.The following example changes the LDAPS port number to

11636:$ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDAPS \ --set listen-port:11636 \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbash -

Restart the connection handler so the change takes effect.

To restart the connection handler, you disable it, then enable it again:

$ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDAPS \ --set enabled:false \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-prompt $ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDAPS \ --set enabled:true \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbash

LDIF file access

The LDIF connection handler lets you change directory data by placing LDIF files in a file system directory. The DS server regularly polls for changes to the directory. The server deletes the LDIF file after making the changes.

-

Add the directory where you put LDIF to be processed:

$ mkdir /path/to/opendj/config/auto-process-ldifbashThis example uses the default value of the

ldif-directoryproperty. -

Activate LDIF file access:

$ dsconfig \ set-connection-handler-prop \ --hostname localhost \ --port 4444 \ --bindDN uid=admin \ --bindPassword password \ --handler-name LDIF \ --set enabled:true \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --no-promptbashThe change takes effect immediately.

LDAP schema

About LDAP schema

Directory schema, described in RFC 4512, define the kinds of information you find in the directory, and how the information is related.

By default, DS servers conform strictly to LDAPv3 standards for schema definitions and syntax checking. This ensures that data stored is valid and properly formed. Unless your data uses only standard schema present in the server when you install, you must add additional schema definitions to account for the data specific to your applications.

DS servers include many standard schema definitions. You can update and extend schema definitions while DS servers are online. As a result, you can add new applications requiring additional data without stopping your directory service.

The examples that follow focus primarily on the following types of directory schema definitions:

-

Attribute type definitions describe attributes of directory entries, such as

givenNameormail.Here is an example of an attribute type definition:

# Attribute type definition attributeTypes: ( 0.9.2342.19200300.100.1.3 NAME ( 'mail' 'rfc822Mailbox' ) EQUALITY caseIgnoreIA5Match SUBSTR caseIgnoreIA5SubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.26{256} X-ORIGIN 'RFC 4524' )ldifAttribute type definitions start with an OID, and a short name or names that are easier to remember. The attribute type definition can specify how attribute values should be collated for sorting, and what syntax they use.

The X-ORIGIN is an extension to identify where the definition originated. When you define your own schema, provide an X-ORIGIN to help track versions of definitions.

Attribute type definitions indicate whether the attribute is:

-

A user attribute intended to be modified by external applications.

This is the default, and you can make it explicit with

USAGE userApplications.The user attributes that are required and permitted on each entry are defined by the entry’s object classes. The server checks what the entry’s object classes require and permit when updating user attributes.

-

An operational attribute intended to be managed by the server for internal purposes.

You can specify this with

USAGE directoryOperation.The server does not check whether an operational attribute is allowed by an object class.

Attribute type definitions differentiate operational attributes with the following

USAGEtypes:-

USAGE directoryOperationindicates a generic operational attribute.Use this type, for example, when creating a last login time attribute.

-

USAGE dSAOperationindicates a DSA-specific operational attribute, meaning an operational attribute specific to the current server. -

USAGE distributedOperationindicates a DSA-shared operational attribute, meaning an operational attribute shared by multiple servers.

-

-

Object class definitions identify the attribute types that an entry must have, and may have.

Here is an example of an object class definition:

# Object class definition objectClasses: ( 2.5.6.6 NAME 'person' SUP top STRUCTURAL MUST ( sn $ cn ) MAY ( userPassword $ telephoneNumber $ seeAlso $ description ) X-ORIGIN 'RFC 4519' )ldifEntries all have an attribute identifying their object classes(es), called

objectClass.Object class definitions start with an object identifier (OID), and a short name that is easier to remember.

The definition here says that the person object class inherits from the

topobject class, which is the top-level parent of all object classes.An entry can have one STRUCTURAL object class inheritance branch, such as

top→person→organizationalPerson→inetOrgPerson. Entries can have multiple AUXILIARY object classes. The object class defines the attribute types that must and may be present on entries of the object class. -

An attribute syntax constrains what directory clients can store as attribute values.

An attribute syntax is identified in an attribute type definition by its OID. String-based syntax OIDs are optionally followed by a number set between braces. The number represents a minimum upper bound on the number of characters in the attribute value. For example, in the attribute type definition shown above, the syntax is

1.3.6.1.4.1.1466.115.121.1.26{256}, IA5 string. An IA5 string (composed of characters from the international version of the ASCII character set) can contain at least 256 characters.You can find a table matching attribute syntax OIDs with their human-readable names in RFC 4517, Appendix A. Summary of Syntax Object Identifiers. The RFC describes attribute syntaxes in detail. You can list attribute syntaxes with the

dsconfigcommand.If you are trying unsuccessfully to import non-compliant data, clean the data before importing it. If cleaning the data is not an option, read Import Legacy Data.

When creating attribute type definitions, use existing attribute syntaxes where possible. If you must create your own attribute syntax, then consider the schema extensions in Update LDAP schema.

Although attribute syntaxes are often specified in attribute type definitions, DS servers do not always check that attribute values comply with attribute syntaxes. DS servers do enforce compliance by default for the following to avoid bad directory data:

-

Certificates

-

Country strings

-

Directory strings

-

JPEG photos

-

Telephone numbers

-

-

Matching rules define how to compare attribute values to assertion values for LDAP search and LDAP compare operations.

For example, suppose you search with the filter

(uid=bjensen). The assertion value in this case isbjensen.DS servers have the following schema definition for the user ID attribute:

attributeTypes: ( 0.9.2342.19200300.100.1.1 NAME ( 'uid' 'userid' ) EQUALITY caseIgnoreMatch SUBSTR caseIgnoreSubstringsMatch SYNTAX 1.3.6.1.4.1.1466.115.121.1.15{256} X-ORIGIN 'RFC 4519' )ldifWhen finding an equality match for your search, servers use the

caseIgnoreMatchmatching rule to check for user ID attribute values that equalbjensen.You can read the schema definitions for matching rules that the server supports by performing an LDAP search:

$ ldapsearch \ --hostname localhost \ --port 1636 \ --useSsl \ --usePkcs12TrustStore /path/to/opendj/config/keystore \ --trustStorePassword:file /path/to/opendj/config/keystore.pin \ --bindDn uid=kvaughan,ou=People,dc=example,dc=com \ --bindPassword bribery \ --baseDn cn=schema \ --searchScope base \ "(&)" \ matchingRulesbashNotice that many matching rules support string collation in languages other than English. For the full list of string collation matching rules, see Supported locales. For the list of other matching rules, see Matching rules.

Matching rules enable directory clients to compare other values besides strings.

DS servers expose schema over protocol through the cn=schema entry.

The server stores the schema definitions in LDIF format files in the db/schema/ directory.

When you set up a server, the process copies many definitions to this location.

Update LDAP schema

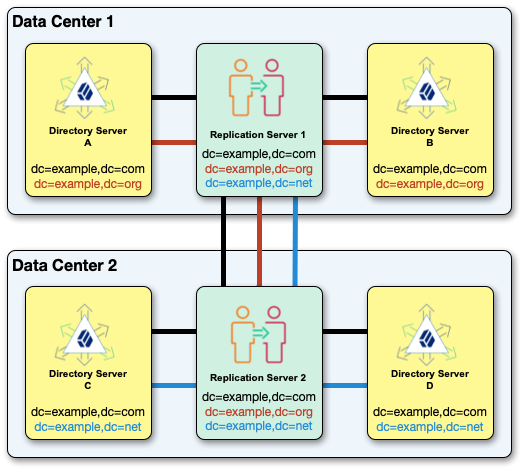

DS servers allow you to update directory schema definitions while the server is running. You can add support for new types of attributes and entries without interrupting the directory service. DS servers replicate schema definitions, propagating them to other replicas automatically.

You update schema either by:

-

Performing LDAP modify operations while the server is running.

-

Adding schema files to the

db/schema/directory before starting the server.

Before adding schema definitions, take note of the following points:

-

Define DS schema elements in LDIF.

For examples, see the build in schema files in the

db/schema/directory. -

Add your schema definitions in a file prefixed with a higher number than built-in files, such as

99-user.ldif, which is the default filename when you modify schema over LDAP.On startup, the DS server reads schema files in order, sorted alphanumerically. Your definitions likely depend on others that the server should read first.

-

Define a schema element before referencing it in other definitions.

For example, make sure you define an attribute type before using it in an object class definition.

For an example, see Custom schema.

DS servers support the standard LDAP schema definitions described in RFC 4512, section 4.1. They also support the following extensions:

X-DEPRECATED-SINCE-

This specifies a release that deprecates the schema element.

Example:

X-DEPRECATED-SINCE: 7.2.5 X-ORIGIN-

This specifies the origin of a schema element.

Examples:

-

X-ORIGIN 'RFC 4519' -

X-ORIGIN 'draft-ietf-ldup-subentry' -

X-ORIGIN 'DS Directory Server'

-

X-SCHEMA-FILE-

This specifies the relative path to the schema file containing the schema element.

Schema definitions are located in

/path/to/opendj/db/schema/*.ldiffiles.Example:

X-SCHEMA-FILE '00-core.ldif'. X-STABILITY-

Used to specify the interface stability of the schema element.

This extension takes one of the following values:

-

Evolving -

Internal -

Removed -

Stable -

Technology Preview

-

Extensions to syntax definitions requires additional code to support syntax checking. DS servers support the following extensions for their particular use cases:

X-ENUM-

This defines a syntax that is an enumeration of values.

The following attribute syntax description defines a syntax allowing four possible attribute values:

ldapSyntaxes: ( security-label-syntax-oid DESC 'Security Label' X-ENUM ( 'top-secret' 'secret' 'confidential' 'unclassified' ) )ldif X-PATTERN-

This defines a syntax based on a regular expression pattern. Valid regular expressions are those defined for java.util.regex.Pattern.

The following attribute syntax description defines a simple, lenient SIP phone URI syntax check:

ldapSyntaxes: ( simple-sip-uri-syntax-oid DESC 'Lenient SIP URI Syntax' X-PATTERN '^sip:[a-zA-Z0-9.]+@[a-zA-Z0-9.]+(:[0-9]+)?$' )ldif X-SUBST-

This specifies a substitute syntax to use for one that DS servers do not implement.

The following example substitutes Directory String syntax, OID

1.3.6.1.4.1.1466.115.121.1.15, for a syntax that DS servers do not implement:ldapSyntaxes: ( non-implemented-syntax-oid DESC 'Not Implemented in DS' X-SUBST '1.3.6.1.4.1.1466.115.121.1.15' )ldif

X-APPROX-

X-APPROXspecifies a non-default approximate matching rule for an attribute type.The default is the double metaphone approximate match.

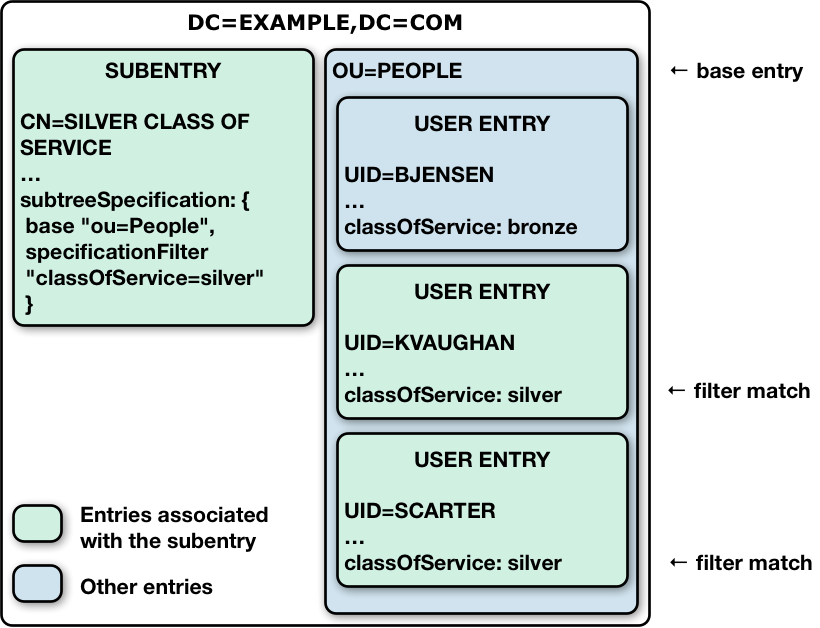

Custom schema

This example updates the LDAP schema while the server is online. It defines a custom enumeration syntax using the attribute type and a custom object class that uses the attribute:

-

A custom enumeration syntax using the

X-ENUMextension. -

A custom attribute type using the custom syntax.

-

A custom object class for entries that have the custom attribute:

$ ldapmodify \

--hostname localhost \

--port 1636 \

--useSsl \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--bindDN uid=admin \

--bindPassword password <<EOF

dn: cn=schema

changetype: modify

add: ldapSyntaxes

ldapSyntaxes: ( temporary-syntax-oid

DESC 'Custom enumeration syntax'

X-ENUM ( 'bronze' 'silver' 'gold' )

X-ORIGIN 'DS Documentation Examples'

X-SCHEMA-FILE '99-user.ldif' )

-

add: attributeTypes

attributeTypes: ( temporary-attr-oid

NAME 'myEnum'

DESC 'Custom attribute type for'

SYNTAX temporary-syntax-oid

USAGE userApplications

X-ORIGIN 'DS Documentation Examples'

X-SCHEMA-FILE '99-user.ldif' )

-

add: objectClasses

objectClasses: ( temporary-oc-oid

NAME 'myEnumObjectClass'

DESC 'Custom object class for entries with a myEnum attribute'

SUP top

AUXILIARY

MAY myEnum

X-ORIGIN 'DS Documentation Examples'

X-SCHEMA-FILE '99-user.ldif' )

EOF

# MODIFY operation successful for DN cn=schemaNotice the follow properties of this update to the schema definitions:

-

The

ldapSyntaxesdefinition comes before theattributeTypesdefinition that uses the syntax.The

attributeTypesdefinition comes before theobjectClassesdefinition that uses the attribute type. -

Each definition has a temporary OID of the form

temporary-*-oid.While you develop new schema definitions, temporary OIDs are fine. Get permanent, correctly assigned OIDs before using schema definitions in production.

-

Each definition has a

DESC(description) string intended for human readers. -

Each definition specifies its origin with the extension

X-ORIGIN 'DS Documentation Examples'. -

Each definition specifies its schema file with the extension

X-SCHEMA-FILE '99-user.ldif'. -

The syntax definition has no name, as it is referenced internally by OID only.

-

X-ENUM ( 'bronze' 'silver' 'gold' )indicates that the syntax allows three values,bronze,silver,gold.DS servers reject other values for attributes with this syntax.

-

The attribute type named

myEnumhas these properties:-

It uses the enumeration syntax,

SYNTAX temporary-syntax-oid. -

It can only have one value at a time,

SINGLE-VALUE.The default, if you omit

SINGLE-VALUE, is to allow multiple values. -

It is intended for use by user applications,

USAGE userApplications.

-

-

The object class named

myEnumObjectClasshas these properties:-

Its parent for inheritance is the top-level object class,

SUP top.topis the abstract parent of all structural object class hierarchies, so all object classes inherit from it. -

It is an auxiliary object class,

AUXILIARY.Auxiliary object classes are used to augment attributes of entries that already have a structural object class.

-

It defines no required attributes (no

MUST). -

It defines one optional attribute which is the custom attribute,

MAY myEnum.

-

After adding the schema definitions, you can add the attribute to an entry as shown in the following example:

$ ldapmodify \

--hostname localhost \

--port 1636 \

--useSsl \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--bindDN uid=admin \

--bindPassword password <<EOF

dn: uid=bjensen,ou=People,dc=example,dc=com

changetype: modify

add: objectClass

objectClass: myEnumObjectClass

-

add: myEnum

myEnum: silver

EOF

# MODIFY operation successful for DN uid=bjensen,ou=People,dc=example,dc=comAs shown in the following example, the attribute syntax prevents users from setting an attribute value that is not specified in your enumeration:

$ ldapmodify \

--hostname localhost \

--port 1636 \

--useSsl \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--bindDN uid=admin \

--bindPassword password <<EOF

dn: uid=bjensen,ou=People,dc=example,dc=com

changetype: modify

replace: myEnum

myEnum: wrong value

EOF

# The LDAP modify request failed: 21 (Invalid Attribute Syntax)

# Additional Information: When attempting to modify entry uid=bjensen,ou=People,dc=example,dc=com to replace the set of values for attribute myEnum, value "wrong value" was found to be invalid according to the associated syntax: The provided value "wrong value" cannot be parsed because it is not allowed by enumeration syntax with OID "temporary-syntax-oid"For more examples, read the built-in schema definition files in the db/schema/ directory.

Schema and JSON

DS software has the following features for working with JSON objects:

-

RESTful HTTP access to directory services

If you have LDAP data, but HTTP client applications want JSON over HTTP instead, DS software can expose your LDAP data as JSON resources over HTTP to REST clients. You can configure how LDAP entries map to JSON resources.

There is no requirement to change LDAP schema definitions before using this feature. To get started, see DS REST APIs.

-

JSON syntax LDAP attributes

If you have LDAP client applications that store JSON in the directory, you can define LDAP attributes that have

Jsonsyntax.The following schema excerpt defines an attribute called

jsonwith case-insensitive matching:attributeTypes: ( json-attribute-oid NAME 'json' SYNTAX 1.3.6.1.4.1.36733.2.1.3.1 EQUALITY caseIgnoreJsonQueryMatch X-ORIGIN 'DS Documentation Examples' )ldifNotice that the JSON syntax OID is

1.3.6.1.4.1.36733.2.1.3.1. The definition above uses the (default)caseIgnoreJsonQueryMatchmatching rule for equality. As explained later in this page, you might want to choose different matching rules for your JSON attributes.When DS servers receive update requests for

Jsonsyntax attributes, they expect valid JSON objects. By default,Jsonsyntax attribute values must comply with The JavaScript Object Notation (JSON) Data Interchange Format, described in RFC 7159. You can use the advanced core schema configuration optionjson-validation-policyto have the server be more lenient in what it accepts, or to disable JSON syntax checking. -

Configurable indexing for JSON attributes

When you store JSON attributes in the directory, you can index every field in each JSON attribute value, or you can index only what you use.

As for other LDAP attributes, the indexes depend on the matching rule defined for the JSON syntax attribute.

DS servers treat JSON syntax attribute values as objects. Two JSON values may be considered equivalent despite differences in their string representations. The following JSON objects can be considered equivalent because each field has the same value. Their string representations are different, however:

[

{ "id": "bjensen", "given-name": "Barbara", "surname": "Jensen" },

{"surname":"Jensen","given-name":"Barbara","id":"bjensen"}

]Unlike other objects with their own LDAP attribute syntaxes, such as X.509 certificates, two JSON objects with completely different structures (different field names and types) are still both JSON. Nothing in the JSON syntax alone tells the server anything about what a JSON object must and may contain.

When defining LDAP schema for JSON attributes, it helps therefore to understand the structure of the expected JSON. Will the attribute values be arbitrary JSON objects, or JSON objects whose structure is governed by some common schema? If a JSON attribute value is an arbitrary object, you can do little to optimize how it is indexed or compared. If the value is a structured object, however, you can configure optimizations based on the structure.

For structured JSON objects, the definition of JSON object equality is what enables you to pick the optimal matching rule for the LDAP schema definition. The matching rule determines how the server indexes the attribute, and how the server compares two JSON values for equality. You can define equality in the following ways:

-

Two JSON objects are equal if all fields have the same values.

By this definition,

{"a": 1, "b": true}equals{"b":true,"a":1}. However,{"a": 1, "b": true}and{"a": 1, "b": "true"}are different. -

Two JSON objects ` equal if some fields have the same values. Other fields are ignored when comparing for equality.

For example, take the case where two JSON objects are considered equal if they have the same "_id" values. By this definition,

{"_id":1,"b":true}equals{"_id":1,"b":false}. However,{"_id":1,"b":true}and{"_id":2,"b":true}are different.DS servers have built-in matching rules for the case where equality means "_id" values are equal. If the fields to compare are different from "_id", you must define your own matching rule and configure a custom schema provider that implements it. This following table helps you choose a JSON equality matching rule:

| JSON Content | Two JSON Objects are Equal if… | Use One of These Matching Rules |

|---|---|---|

Arbitrary (any valid JSON is allowed) |

All fields have the same values. |

|

Structured |

All fields have the same values. |

|

Structured |

One or more other fields have the same values. Additional fields are ignored when comparing for equality. |

When using this matching rule, create a custom |

When you choose an equality matching rule in the LDAP attribute definition,

you are also choosing the default that applies in an LDAP search filter equality assertion.

For example, caseIgnoreJsonQueryMatch works with filters such as "(json=id eq 'bjensen')".

DS servers also implement JSON ordering matching rules for determining

the relative order of two JSON values using a custom set of rules.

You can select which JSON fields should be used for performing the ordering match.

You can also define whether those fields that contain strings should be normalized before comparison

by trimming white space or ignoring case differences.

DS servers can implement JSON ordering matching rules on demand when presented

with an extended server-side sort request,

as described in Server-Side Sort.

If, however, you define them statically in your LDAP schema,

then you must implement them by creating a custom json-ordering-matching-rule

Schema Provider.

For details about the json-ordering-matching-rule object’s properties,

see JSON Ordering Matching Rule.

For examples showing how to add LDAP schema for new attributes, see Update LDAP Schema. For examples showing how to index JSON attributes, see Custom Indexes for JSON.

Import legacy data

By default, DS servers accept data that follows the schema for allowable and rejected data. You might have legacy data from a directory service that is more lenient, allowing non-standard constructions such as multiple structural object classes per entry, not checking attribute value syntax, or even not respecting schema definitions.

For example, when importing data with multiple structural object classes defined per entry, you can relax schema checking to warn rather than reject entries having this issue:

$ dsconfig \

set-global-configuration-prop \

--hostname localhost \

--port 4444 \

--bindDN uid=admin \

--bindPassword password \

--set single-structural-objectclass-behavior:warn \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-promptYou can allow attribute values that do not respect the defined syntax:

$ dsconfig \

set-global-configuration-prop \

--hostname localhost \

--port 4444 \

--bindDN uid=admin \

--bindPassword password \

--set invalid-attribute-syntax-behavior:warn \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-promptYou can even turn off schema checking altogether. Only turn off schema checking when you are absolutely sure that the entries and attributes already respect the schema definitions:

$ dsconfig \

set-global-configuration-prop \

--hostname localhost \

--port 4444 \

--bindDN uid=admin \

--bindPassword password \

--set check-schema:false \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-promptTurning off schema checking can potentially boost import performance.

Standard schema

DS servers provide many standard schema definitions.

For full descriptions, see the Schema Reference.

Find the definitions in LDIF files in the /path/to/opendj/db/schema/ directory:

| File | Description |

|---|---|

|

Schema definitions for the following Internet-Drafts, RFCs, and standards: |

|

Schema for draft-behera-ldap-password-policy (Draft 09), which defines a mechanism for storing password policy information in an LDAP directory server. |

|

This file contains the attribute type and objectclass definitions for use with the server configuration. |

|

Schema for draft-good-ldap-changelog, which defines a mechanism for storing information about changes to directory server data. |

|

Schema for RFC 2713, which defines a mechanism for storing serialized Java objects in the directory server. |

|

Schema for RFC 2714, which defines a mechanism for storing CORBA objects in the directory server. |

|

Schema for RFC 2739, which defines a mechanism for storing calendar and vCard objects in the directory server. Be aware that the definition in RFC 2739 contains a number of errors. This schema file has been altered from the standard definition to fix a number of those problems. |

|

Schema for RFC 2926, which defines a mechanism for mapping between Service Location Protocol (SLP) advertisements and LDAP. |

|

Schema for RFC 3112, which defines the authentication password schema. |

|

Schema for RFC 3712, which defines a mechanism for storing printer information in the directory server. |

|

Schema for RFC 4403, which defines a mechanism for storing UDDIv3 information in the directory server. |

|

Schema for draft-howard-rfc2307bis, which defines a mechanism for storing naming service information in the directory server. |

|

Schema for RFC 4876, which defines a schema for storing Directory User Agent (DUA) profiles and preferences in the directory server. |

|

Schema required when storing Samba user accounts in the directory server. |

|

Schema required for Solaris and OpenSolaris LDAP naming services. |

|

Backwards-compatible schema for use in the server configuration. |

Indexes

About indexes

A basic, standard directory feature is the ability to respond quickly to searches.

An LDAP search specifies the information that directly affects how long the directory might take to respond:

-

The base DN for the search.

The more specific the base DN, the less information to check during the search. For example, a request with base DN

dc=example,dc=compotentially involves checking many more entries than a request with base DNuid=bjensen,ou=people,dc=example,dc=com. -

The scope of the search.

A subtree or one-level scope targets many entries, whereas a base search is limited to one entry.

-

The search filter to match.

A search filter asserts that for an entry to match, it has an attribute that corresponds to some value. For example,

(cn=Babs Jensen)asserts thatcnmust have a value that equalsBabs Jensen.A directory server would waste resources checking all entries for a match. Instead, directory servers maintain indexes to expedite checking for a match.

LDAP directory servers disallow searches that cannot be handled expediently using indexes. Maintaining appropriate indexes is a key aspect of directory administration.

Role of an index

The role of an index is to answer the question, "Which entries have an attribute with this corresponding value?"

Each index is therefore specific to an attribute.

Each index is also specific to the comparison implied in the search filter. For example, a directory server maintains distinct indexes for exact (equality) matching and for substring matching. The types of indexes are explained in Index types. Furthermore, indexes are configured in specific directory backends.

Index implementation

An index is implemented as a tree of key-value pairs.

The key is a form of the value to match, such as babs jensen.

The value is a list of IDs for entries that match the key.

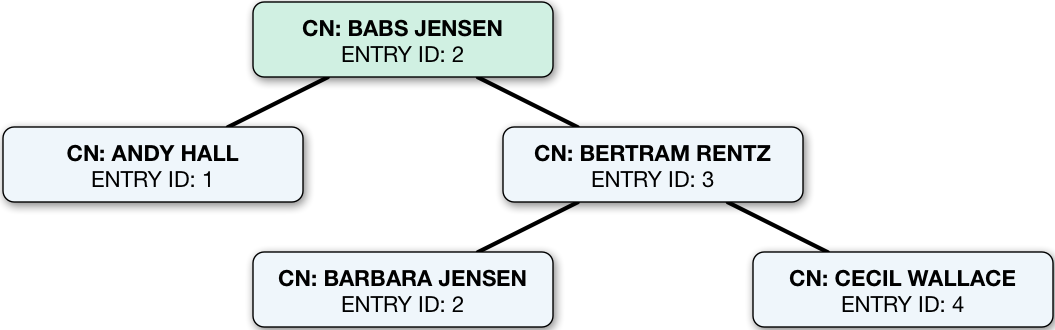

The figure that follows shows an equality (case ignore exact match) index with five keys from a total of four entries.

If the data set were large, there could be more than one entry ID per key:

How DS uses indexes

This example illustrates how DS uses an index.

When the search filter is (cn=Babs Jensen), DS retrieves the IDs for entries whose CN matches Babs Jensen

by looking them up in the equality index of the CN attribute.

(For a complex filter, it might optimize the search by changing the order in which it uses the indexes.)

A successful result is zero or more entry IDs.

These are the candidate result entries.

For each candidate, DS retrieves the entry by ID from a system index called id2entry.

As its name suggests, this index returns an entry for an entry ID.

If there is a match, and the client application has the right to access to the data,

DS returns the search result.

It continues this process until no candidates are left.

Unindexed searches

If there are no indexes that correspond to a search request, DS must check for a match against every entry in the scope of the search. Evaluating every entry for a match is referred to as an unindexed search.

An unindexed search is an expensive operation, particularly for large directories.

A server refuses unindexed searches unless the user has specific permission to make such requests.

The permission to perform an unindexed search is granted with the unindexed-search privilege.

This privilege is reserved for the directory superuser by default.

It should not be granted lightly.

If the number of entries is smaller than the default resource limits,

you can still perform what appear to be unindexed searches,

meaning searches with filters for which no index appears to exist.

That is because the dn2id index returns all user data entries

without hitting a resource limit that would make the search unindexed.

Use cases that may call for unindexed searches include the following:

-

An application must periodically retrieve a very large amount of directory data all at once through an LDAP search.

For example, an application performs an LDAP search to retrieve everything in the directory once a week as part of a batch job that runs during off hours.

Make sure the application has no resource limits. For details, see Resource limits.

-

A directory data administrator occasionally browses directory data through a graphical UI without initially knowing what they are looking for or how to narrow the search.

Big indexes let you work around this problem. They facilitate searches where large numbers of entries match. For example, big indexes can help when paging through all the employees in the large company, or all the users in the state of California. For details, see Big index and Indexes for attributes with few unique values.

Alternatively, DS directory servers can use an appropriately configured VLV index to sort results for an unindexed search. For details, see VLV for paged server-side sort.

Index updates

When an entry is added, changed, or deleted, the directory server updates each affected index to reflect the change. This happens while the server is online, and has a cost. This cost is the reason to maintain indexes only those indexes that are used.

DS only updates indexes for the attributes that change. Updating an unindexed attribute is therefore faster than updating an indexed attribute.

What to index

DS directory server search performance depends on indexes. The default settings are fine for evaluating DS software, and they work well with sample data. The default settings do not necessarily fit your directory data, and the searches your applications perform:

-

Configure necessary indexes for the searches you anticipate.

-

Let DS optimize search queries to use whatever indexes are available.

DS servers may use a presence index when an equality index is not available, for example.

-

Use metrics and index debugging to check that searches use indexes and are optimized.

You cannot configure the optimizations DS servers perform. You can, however, review search metrics, logs, and search debugging data to verify that searches use indexes and are optimized.

-

Monitor DS servers for indexes that are not used.

Necessary indexes

Index maintenance has its costs. Every time an indexed attribute is updated, the server must update each affected index to reflect the change. This is wasteful if the index is not used. Indexes, especially substring indexes, can occupy more memory and disk space than the corresponding data.

Aim to maintain only indexes that speed up appropriate searches, and that allow the server to operate properly. The former indexes depend on how directory users search, and require thought and investigation. The latter includes non-configurable internal indexes, that should not change.

Begin by reviewing the attributes of your directory data. Which attributes would you expect to see in a search filter? If an attribute is going to show up frequently in reasonable search filters, then index it.

Compare your guesses with what you see actually happening in the directory.

Unindexed searches

Directory users might complain their searches fail because they’re unindexed.

By default, DS directory servers reject unindexed searches

with a result code of 50 and additional information about the unindexed search.

The following example attempts, anonymously, to get the entries for all users whose email address ends in .com:

$ ldapsearch \

--hostname localhost \

--port 1636 \

--useSsl \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--bindDN uid=user.0,ou=People,dc=example,dc=com \

--bindPassword password \

--baseDN ou=people,dc=example,dc=com \

"(&(mail=*.com)(objectclass=person))"

# The LDAP search request failed: 50 (Insufficient Access Rights)

# Additional Information: You do not have sufficient privileges to perform an unindexed searchIf they’re unintentionally requesting an unindexed search, suggest ways to perform an indexed search instead. Perhaps the application needs a better search filter. Perhaps it requests more results than necessary. For example, a GUI application lets a user browse directory entries. The application could page through the results, rather than attempting to retrieve all the entries at once. To let the user page back and forth through the results, you could add a browsing (VLV) index for the application to get the entries for the current screen.

An application might have a good reason to get the full list of all entries in one operation.

If so, assign the application’s account the unindexed-search privilege.

Consider other options before you grant the privilege, however.

Unindexed searches cause performance problems for concurrent directory operations.

When an application has the privilege or binds with directory superuser credentials—by default,

the uid=admin DN and password—then DS does not reject its request for an unindexed search.

Check for unindexed searches using the ds-mon-backend-filter-unindexed monitoring attribute:

$ ldapsearch \

--hostname localhost \

--port 1636 \

--useSsl \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--bindDN uid=monitor \

--bindPassword password \

--baseDN cn=monitor \

"(objectClass=ds-monitor-backend-db)" ds-mon-backend-filter-unindexedIf ds-mon-backend-filter-unindexed is greater than zero, review the access log for unexpected unindexed searches.

The following example shows the relevant fields in an access log message:

{

"request": {

"protocol": "LDAP",

"operation": "SEARCH"

},

"response": {

"detail": "You do not have sufficient privileges to perform an unindexed search",

"additionalItems": {

"unindexed": null

}

}

}Beyond the key fields shown in the example, messages in the access log also specify the search filter and scope. Understand the operation that led to each unindexed search. If the filter is appropriate and often used, add an index to ease the search. Either analyze the access logs to find how often operations use the search filter or monitor operations with the index analysis feature, described in Index analysis metrics.

In addition to responding to client search requests, a server performs internal searches. Internal searches let the server retrieve data needed for a request, and maintain internal state information. Sometimes, internal searches become unindexed. When this happens, the server logs a warning similar to the following:

The server is performing an unindexed internal search request with base DN '%s', scope '%s', and filter '%s'. Unindexed internal searches are usually unexpected and could impact performance. Please verify that that backend's indexes are configured correctly for these search parameters.

When you see a message like this in the server log, take these actions:

-

Figure out which indexes are missing, and add them.

For details, see Index analysis metrics, Debug search indexes, and Configure indexes.

-

Check the integrity of the indexes.

For details, see Verify indexes.

-

If the relevant indexes exist, and you have verified that they are sound, the index entry limit might be too low.

This can happen, for example, in directory servers with more than 4000 groups in a single backend. For details, see Index entry limits.

-

If you have made the changes described in the steps above, and problem persists, contact technical support.

Index analysis metrics

DS servers provide the index analysis feature to collect information about filters in search requests. This feature is useful, but not recommend to keep enabled on production servers, as DS maintains the metrics in memory.

You can activate the index analysis mechanism using the dsconfig set-backend-prop command:

$ dsconfig \

set-backend-prop \

--hostname localhost \

--port 4444 \

--bindDN uid=admin \

--bindPassword password \

--backend-name dsEvaluation \

--set index-filter-analyzer-enabled:true \

--no-prompt \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pinThe command causes the server to analyze filters used, and to keep the results in memory. You can read the results as monitoring information:

$ ldapsearch \

--hostname localhost \

--port 1636 \

--useSsl \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--bindDN uid=admin \

--bindPassword password \

--baseDN ds-cfg-backend-id=dsEvaluation,cn=Backends,cn=monitor \

--searchScope base \

"(&)" \

ds-mon-backend-filter-use-start-time ds-mon-backend-filter-use-indexed ds-mon-backend-filter-use-unindexed ds-mon-backend-filter-use

dn: ds-cfg-backend-id=dsEvaluation,cn=backends,cn=monitor

ds-mon-backend-filter-use-start-time: <timestamp>

ds-mon-backend-filter-use-indexed: 2

ds-mon-backend-filter-use-unindexed: 3

ds-mon-backend-filter-use: {"search-filter":"(employeenumber=86182)","nb-hits":1,"latest-failure-reason":"caseIgnoreMatch index type is disabled for the employeeNumber attribute"}The ds-mon-backend-filter-use values include the following fields:

search-filter-

The LDAP search filter.

nb-hits-

The number of times the filter was used.

latest-failure-reason-

A message describing why the server could not use any index for this filter.

The output can include filters for internal use, such as (aci=*).

In the example above, you see a filter used by a client application.

In the example, a search filter that led to an unindexed search, (employeenumber=86182), had no matches

because, "caseIgnoreMatch index type is disabled for the employeeNumber attribute".

Some client application has tried to find users by employee number, but no index exists for that purpose.

If this appears regularly as a frequent search, add an employee number index.

To avoid impacting server performance, turn off index analysis after you collect the information you need.

Use the dsconfig set-backend-prop command:

$ dsconfig \

set-backend-prop \

--hostname localhost \

--port 4444 \

--bindDN uid=admin \

--bindPassword password \

--backend-name dsEvaluation \

--set index-filter-analyzer-enabled:false \

--no-prompt \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pinDebug search indexes

Sometimes it is not obvious by inspection how a directory server processes a given search request.

The directory superuser can gain insight with the debugsearchindex attribute.

The default global access control prevents users from reading the debugsearchindex attribute.

To allow an administrator to read the attribute, add a global ACI such as the following:

$ dsconfig \

set-access-control-handler-prop \

--hostname localhost \

--port 4444 \

--bindDN uid=admin \

--bindPassword password \

--add global-aci:"(targetattr=\"debugsearchindex\")(version 3.0; acl \"Debug search indexes\"; \

allow (read,search,compare) userdn=\"ldap:///uid=user.0,ou=people,dc=example,dc=com\";)" \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-prompt|

The format of The values are intended to be read by human beings, not scripts.

If you do write scripts that interpret |

The debugsearchindex attribute value indicates how the server would process the search.

The server use its indexes to prepare a set of candidate entries.

It iterates through the set to compare candidates with the search filter, returning entries that match.

The following example demonstrates this feature for a subtree search with a complex filter:

Show details

$ ldapsearch \

--hostname localhost \

--port 1636 \

--useSsl \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--bindDN uid=user.0,ou=people,dc=example,dc=com \

--bindPassword password \

--baseDN dc=example,dc=com \

"(&(objectclass=person)(givenName=aa*))" \

debugsearchindex | sed -n -e "s/^debugsearchindex: //p"

{

"baseDn": "dc=example,dc=com",

"scope": "sub",

"filter": "(&(givenName=aa*)(objectclass=person))",

"maxCandidateSize": 100000,

"strategies": [

{

"name": "BaseObjectSearchStrategy",

"diagnostic": "not applicable"

},

{

"name": "VlvSearchStrategy",

"diagnostic": "not applicable"

},

{

"name": "AttributeIndexSearchStrategy",

"filter": {

"query": "INTERSECTION",

"rank": "RANGE_MATCH",

"filter": "(&(givenName=aa*)(objectclass=person))",

"subQueries": [

{

"query": "ANY_OF",

"rank": "RANGE_MATCH",

"filter": "(givenName=aa*)",

"subQueries": [

{

"query": "ANY_OF",

"rank": "RANGE_MATCH",

"filter": "(givenName=aa*)",

"subQueries": [

{

"query": "RANGE_MATCH",

"rank": "RANGE_MATCH",

"index": "givenName.caseIgnoreMatch",

"range": "[aa,ab[",

"diagnostic": "indexed",

"candidates": 50

},

{

"query": "RANGE_MATCH",

"rank": "RANGE_MATCH",

"index": "givenName.caseIgnoreSubstringsMatch:6",

"range": "[aa,ab[",

"diagnostic": "skipped"

}

],

"diagnostic": "indexed",

"candidates": 50

},

{

"query": "MATCH_ALL",

"rank": "MATCH_ALL",

"filter": "(givenName=aa*)",

"index": "givenName.presence",

"diagnostic": "skipped"

}

],

"diagnostic": "indexed",

"candidates": 50,

"retained": 50

},

{

"query": "ANY_OF",

"rank": "OBJECT_CLASS_EQUALITY_MATCH",

"filter": "(objectclass=person)",

"subQueries": [

{

"query": "OBJECT_CLASS_EQUALITY_MATCH",

"rank": "OBJECT_CLASS_EQUALITY_MATCH",

"filter": "(objectclass=person)",

"subQueries": [

{

"query": "EXACT_MATCH",

"rank": "EXACT_MATCH",

"index": "objectClass.objectIdentifierMatch",

"key": "person",

"diagnostic": "not indexed",

"candidates": "[LIMIT-EXCEEDED]"

},

{

"query": "EXACT_MATCH",

"rank": "EXACT_MATCH",

"index": "objectClass.objectIdentifierMatch",

"key": "2.5.6.6",

"diagnostic": "skipped"

}

],

"diagnostic": "not indexed",

"candidates": "[LIMIT-EXCEEDED]"

},

{

"query": "MATCH_ALL",

"rank": "MATCH_ALL",

"filter": "(objectclass=person)",

"index": "objectClass.presence",

"diagnostic": "skipped"

}

],

"diagnostic": "not indexed",

"candidates": "[LIMIT-EXCEEDED]",

"retained": 50

}

],

"diagnostic": "indexed",

"candidates": 50

},

"scope": {

"type": "sub",

"diagnostic": "not indexed",

"candidates": "[NOT-INDEXED]",

"retained": 50

},

"diagnostic": "indexed",

"candidates": 50

}

],

"final": 50

}The filter in the example matches person entries whose given name starts with aa.

The search scope is not explicitly specified, so the scope defaults to the subtree including the base DN.

Notice that the debugsearchindex value has the following top-level fields:

-

(Optional)

"vlv"describes how the server uses VLV indexes.The VLV field is not applicable for this example, and so is not present.

-

"filter"describes how the server uses the search filter to narrow the set of candidates. -

"scope"describes how the server uses the search scope. -

"final"indicates the final number of candidates in the set.

In the output, notice that the server uses the equality and substring indexes to find candidate entries

whose given name starts with aa.

If the filter indicated given names containing aa, as in givenName=*aa*,

the server would rely only on the substring index.

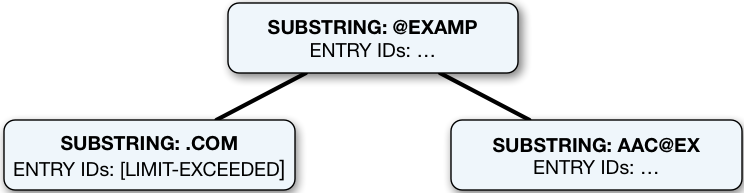

Notice that the output for the (objectclass=person) portion of the filter shows "candidates": "[LIMIT-EXCEEDED]".

In this case, there are so many entries matching the value specified that the index is not useful

for narrowing the set of candidates.

The scope is also not useful for narrowing the set of candidates.

Ultimately, however, the givenName indexes help the server to narrow the set of candidates.

The overall search is indexed and the result is 50 matching entries.

The following example shows a subtree search for accounts with initials starting either with aa or with zz:

Show details

$ ldapsearch \

--hostname localhost \

--port 1636 \

--useSsl \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--baseDN dc=example,dc=com \

--bindDN uid=user.0,ou=people,dc=example,dc=com \

--bindPassword password \

"(|(initials=aa*)(initials=zz*))" \

debugsearchindex | sed -n -e "s/^debugsearchindex: //p"

{

"baseDn": "dc=example,dc=com",

"scope": "sub",

"filter": "(|(initials=aa*)(initials=zz*))",

"maxCandidateSize": 100000,

"strategies": [

{

"name": "BaseObjectSearchStrategy",

"diagnostic": "not applicable"

},

{

"name": "VlvSearchStrategy",

"diagnostic": "not applicable"

},

{

"name": "AttributeIndexSearchStrategy",

"filter": {

"query": "UNION",

"rank": "MATCH_ALL",

"filter": "(|(initials=aa*)(initials=zz*))",

"subQueries": [

{

"query": "ANY_OF",

"rank": "MATCH_ALL",

"filter": "(initials=aa*)",

"subQueries": [

{

"query": "MATCH_ALL",

"rank": "MATCH_ALL",

"filter": "(initials=aa*)",

"index": "initials.presence",

"diagnostic": "not indexed"

},

{

"query": "MATCH_ALL",

"rank": "MATCH_ALL",

"filter": "(initials=aa*)",

"index": "initials.presence",

"diagnostic": "not indexed"

}

],

"diagnostic": "not indexed"

},

{

"query": "ANY_OF",

"rank": "MATCH_ALL",

"filter": "(initials=zz*)",

"subQueries": [

{

"query": "MATCH_ALL",

"rank": "MATCH_ALL",

"filter": "(initials=zz*)",

"index": "initials.presence",

"diagnostic": "skipped"

},

{

"query": "MATCH_ALL",

"rank": "MATCH_ALL",

"filter": "(initials=zz*)",

"index": "initials.presence",

"diagnostic": "skipped"

}

],

"diagnostic": "skipped"

}

],

"diagnostic": "not indexed"

},

"scope": {

"type": "sub",

"diagnostic": "not indexed",

"candidates": "[NOT-INDEXED]",

"retained": "[NOT-INDEXED]"

},

"diagnostic": "not indexed",

"candidates": "[NOT-INDEXED]"

},

{

"name": "BigIndexSearchStrategy",

"filter": {

"query": "UNION",

"rank": "MATCH_ALL",

"filter": "(|(initials=aa*)(initials=zz*))",

"subQueries": [

{

"query": "ANY_OF",

"rank": "MATCH_ALL",

"filter": "(initials=aa*)",

"subQueries": [

{

"query": "MATCH_ALL",

"rank": "MATCH_ALL",

"filter": "(initials=aa*)",

"index": "initials.big.presence",

"diagnostic": "not supported"

},

{

"query": "MATCH_ALL",

"rank": "MATCH_ALL",

"filter": "(initials=aa*)",

"index": "initials.big.presence",

"diagnostic": "not supported"

}

],

"diagnostic": "not indexed"

}

],

"diagnostic": "not indexed"

},

"diagnostic": "not indexed"

}

],

"final": "[NOT-INDEXED]"

}As shown in the output, the search is not indexed. To fix this, index the initials attribute:

$ dsconfig \

create-backend-index \

--hostname localhost \

--port 4444 \

--bindDN uid=admin \

--bindPassword password \

--backend-name dsEvaluation \

--index-name initials \

--set index-type:equality \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pin \

--no-prompt

$ rebuild-index \

--hostname localhost \

--port 4444 \

--bindDn uid=admin \

--bindPassword password \

--baseDn dc=example,dc=com \

--index initials \

--usePkcs12TrustStore /path/to/opendj/config/keystore \

--trustStorePassword:file /path/to/opendj/config/keystore.pinAfter configuring and building the new index, try the same search again:

Show details

$ ldapsearch \

--hostname localhost \

--port 1636 \

--useSsl \