Synchronization

Configure synchronization between ForgeRock® Identity Management and other resources.

Synchronizing identity data between resources is one of the core services of ForgeRock Identity Management (IDM). In this guide, you will learn about the different types of synchronization, and how to configure the flexible synchronization mechanism. This guide is written for systems integrators building solutions based on ForgeRock Identity Management services.

Synchronization overview

Understand synchronization types and configuration.

Mappings

Map data between resources.

Situations and actions

Learn about synchronization situations and how to configure actions for each.

Filter synchronization data

Use filtering mechanisms to limit the synchronized data.

Implicit sync and liveSync

Configure automatic synchronization between resources.

Reconciliation performance

Learn about ways to improve reconciliation performance.

ForgeRock Identity Platform™ serves as the basis for our simple and comprehensive Identity and Access Management solution. We help our customers deepen their relationships with their customers, and improve the productivity and connectivity of their employees and partners. For more information about ForgeRock and about the platform, refer to https://www.forgerock.com.

The ForgeRock Common REST API works across the platform to provide common ways to access web resources and collections of resources.

Synchronization overview

Synchronization keeps data consistent across disparate resources. Within IDM, we refer to two resource types—managed resources (stored in the IDM repository) and external resources. An external resource can be any system that holds identity data, such as ForgeRock Directory Services (DS), Active Directory, a CSV file, a JDBC database, and so on.

IDM connects to external resources through connectors. Synchronization across resources happens when managed resources change or when IDM discovers a change on a system resource. There are various synchronization mechanisms that ensure data consistency.

Synchronization types

-

IDM discovers and synchronizes changes from external resources by using reconciliation and liveSync.

-

IDM synchronizes changes made to managed resources by using reconciliation and implicit synchronization.

- Reconciliation

-

Reconciliation is the process of ensuring that the objects in two different data stores are consistent. Traditionally, reconciliation applies mainly to user objects, but IDM can reconcile any objects, such as groups, roles, and devices.

In any reconciliation operation, there is a source system (the system that contains the changes) and a target system (the system to which the changes will be propagated). The source and target system are defined in a mapping. The IDM repository can be either the source or the target in a mapping. You can configure multiple mappings for one IDM instance, depending on the external resources to which you are connecting.

To perform reconciliation, IDM analyzes both the source system and the target system, to discover the differences between them. Reconciliation can therefore be a heavyweight process. When working with large data sets, finding all changes can be more work than processing the changes.

Reconciliation is very thorough. It recognizes system error conditions and catches changes that might be missed by liveSync, and therefore serves as the basis for compliance and reporting.

- LiveSync

-

LiveSync captures the changes that occur on an external system, and pushes those changes to IDM. IDM uses any defined mappings to replay those changes where they are required—to its managed objects, to another remote system, or to both. Unlike reconciliation, liveSync uses a polling system, and is intended to react quickly to changes as they happen.

To perform this polling, liveSync relies on a change detection mechanism on the external resource to determine which objects have changed. The change detection mechanism is specific to the external resource, and can be a time stamp, a sequence number, a change vector, or any other method of recording changes that have occurred on the system. For example, ForgeRock Directory Services (DS) implements a change log that provides IDM with a list of objects that have changed since the last request. Active Directory implements a change sequence number, and certain databases might have a

lastChangeattribute. - Implicit synchronization

-

Implicit synchronization automatically pushes changes that are made to IDM managed objects out to external systems.

For direct changes to managed objects, IDM immediately synchronizes those changes to all mappings configured to use those objects as their source. A direct change can originate not only as a write request through the REST interface, but also as an update resulting from reconciliation with another resource.

Implicit synchronization only synchronizes changed objects to external resources. To synchronize a complete data set, you must run a reconciliation operation. The entire changed object is synchronized. If you want to synchronize only the attributes that have changed, you can modify the

onUpdatescript in your mapping to compare attribute values before pushing changes.

Synchronization configuration overview

This section describes the high-level steps required to set up synchronization between two resources. A basic synchronization configuration involves the following steps:

-

Set up a connection between the source and target resource.

A connector configuration references a specific connector type and indicates the connection details of the external resource. You must define a connector configuration for each external resource to which you are connecting.

For more information, refer to Connections between resources.

-

Map source objects to target objects.

The mapping configuration your project’s

conf/sync.jsonfile or in individual mapping files. Mappings are synchronized in the order in which they are specified in thesync.jsonfile. If there are multiple mapping files, thesyncAfterproperty dictates the order in which they are processed.For more information, refer to Resource mapping.

-

Configure any scripts that are required to check source and target objects, and to manipulate attributes.

-

In addition to these configuration elements, IDM stores a

linkstable in its repository. The links table maintains a record of relationships established between source and target objects.

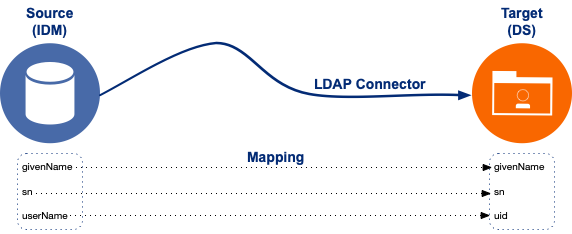

The following diagram illustrates the high-level synchronization configuration:

Data mapping model

IDM uses mappings to determine which data to synchronize, and how that data must be synchronized.

In general, identity management software implements one of the following data models:

-

A meta-directory data model, where all data are mirrored in a central repository.

The meta-directory model offers fast access at the risk of getting outdated data.

-

A virtual data model, where only a minimum set of attributes are stored centrally, and most are loaded on demand from the external resources in which they are stored.

The virtual model guarantees fresh data, but pays for that guarantee in terms of performance.

IDM leaves the data model choice up to you. You determine the right trade-offs for a particular deployment. IDM does not hard code any particular schema or set of attributes stored in the repository. Instead, you define how external system objects map onto managed objects, and IDM dynamically updates the repository to store the managed object attributes that you configure.

Connections between resources

A connector lets you transfer data between different resource systems. The connector configuration works in conjunction with the synchronization mapping and specifies how target object attributes map to attributes on external objects.

Connector configuration files exist in your project’s conf directory and are named provisioner.resource-name.json, where resource-name reflects the connector technology and the external resource. For example, openicf-csv.

To create and modify connector configurations, use one of the following methods:

Configure connectors using the admin UI

From the navigation bar, click Configure > Connectors, and do one of the following.

-

Select an existing connector to modify.

-

Click New Connector, and configure the new connector.

Edit connector configuration files

IDM provides a number of sample provisioner files in the path/to/openidm/samples/example-configurations/provisioners directory. To modify connector configuration files directly, edit one of the sample provisioner files that corresponds to the resource to which you are connecting.

The following excerpt of an example LDAP connector configuration shows the attributes of an account object type. In the attribute mapping definitions, the attribute name is mapped from the IDM managed object to the nativeName (the attribute name used on the external resource). The lastName attribute in IDM is mapped to the sn attribute in LDAP. The homePhone attribute is defined as an array, because it can have multiple values:

{

...

"objectTypes": {

"account": {

"lastName": {

"type": "string",

"required": true,

"nativeName": "sn",

"nativeType": "string"

},

"homePhone": {

"type": "array",

"items": {

"type": "string",

"nativeType": "string"

},

"nativeName": "homePhone",

"nativeType": "string"

}

}

}

}For IDM to access external resource objects and attributes, the object and its attributes must match the connector configuration. Note that the connector file only maps IDM managed objects and attributes to their counterparts on the external resource. To construct attributes and to manipulate their values, you use a synchronization mapping, described in Resource mapping.

Configure connectors using REST

Create connector configurations using REST with the createCoreConfig and createFullConfig actions. For more information, refer to Configure Connectors Using REST.

Resource mapping

A synchronization mapping specifies a relationship between objects and their attributes in two data stores. The following example shows a typical attribute mapping, between objects in an external LDAP directory and an IDM managed user data store:

"source": "lastName", "target": "sn"

In this case, the lastName source attribute is mapped to the sn (surname) attribute in the target LDAP directory.

The core synchronization configuration is defined in the mapping configuration.

You can define a single file with all your mappings (conf/sync.json), or a separate file per mapping. Individual mapping files are named mapping-mappingName.json; for example, mapping-managedUser_systemCsvfileAccounts.json. Individual mapping files can be useful if your deployment includes many mappings that are difficult to manage in a single file. You can also use a combination of individual mapping files and a monolithic sync.json

file, particularly if you are adding mappings to an existing deployment.

If you use a single sync.json

file, mappings are processed in the order in which they appear within that file. If you use multiple mapping files, mappings are processed according to the syncAfter property in the mapping. The following example indicates that this particular mapping must be processed after the managedUser_systemCsvfileAccount mapping:

"source" : "managed/user",

"target" : "system/csvfile/account",

"syncAfter" : [ "managedUser_systemCsvfileAccount" ],If you use a combination of sync.json and individual mapping files, the synchronization engine processes the mappings in sync.json first (in order), and then any mappings specified in the individual mapping files, according to the syncAfter property in each mapping.

For a list of all mappings, use the following request:

curl \ --header "X-OpenIDM-Username: openidm-admin" \ --header "X-OpenIDM-Password: openidm-admin" \ --header "Accept-API-Version: resource=1.0" \ --request GET \ "http://localhost:8080/openidm/sync/mappings?_queryFilter=true"

This call returns the mappings in the order in which they will be processed.

|

The admin UI only shows the mappings configured in the |

Mappings are always defined from a source resource to a target resource. To configure bidirectional synchronization, you must define two mappings. For example, to configure bidirectional synchronization between an LDAP server and an IDM repository, you would define the following two mappings:

-

LDAP Server > IDM Repository

-

IDM Repository > LDAP Server

Bidirectional mappings can include a links property that lets you reuse the links established between objects, for both mappings. For more information, refer to Reuse Links Between Mappings.

You can update a mapping while the server is running. To avoid inconsistencies between data stores, do not update a mapping while a reconciliation is in progress for that mapping.

Configure a resource mapping

Objects in external resources are specified in a mapping as system/name/object-type, where name is the name used in the connector configuration, and object-type is the object defined in the connector configuration list of object types. Objects in the repository are specified in the mapping as managed/object-type, where object-type is defined in the managed object configuration.

External resources, and IDM managed objects, can be the source or the target in a mapping. By convention, the mapping name is a string of the form source_target, as shown in the following example:

Basic LDAP mapping

{

"mappings": [

{

"name": "systemLdapAccounts_managedUser",

"source": "system/ldap/account",

"target": "managed/user",

"properties": [

{

"source": "lastName",

"target": "sn"

},

{

"source": "telephoneNumber",

"target": "telephoneNumber"

},

{

"target": "phoneExtension",

"default": "0047"

},

{

"source": "email",

"target": "mail",

"comment": "Set mail if non-empty.",

"condition": {

"type": "text/javascript",

"source": "(object.email != null)"

}

},

{

"source": "",

"target": "displayName",

"transform": {

"type": "text/javascript",

"source": "source.lastName +', ' + source.firstName;"

}

},

{

"source" : "uid",

"target" : "userName",

"condition" : "/linkQualifier eq \"user\""

}

},

]

}

]

}In this example, the name of the source is the external resource (ldap), and the target is IDM’s user repository; specifically, managed/user. The properties defined in the mapping correspond to attribute names that are defined in the IDM configuration. For example, the source attribute uid is defined in the ldap connector configuration file, rather than on the external resource itself.

Individual mapping files do not include a name property. The mapping name is taken from the file name. For example, the mapping shown in Basic LDAP Mapping would be in a file named mapping-systemLdapAccounts_managedUser.json, and start as follows:

{

"source": "system/ldap/account",

"target": "managed/user",

...

}Configure mappings using the admin UI

To set up a synchronization mapping using the admin UI:

-

From the navigation bar, click Configure > Mappings.

-

Click New Mapping.

-

On the New Mapping page, select a source and target resource from the configured resources at the bottom of the window, and click Create Mapping.

You can filter these resources to display only connector configurations or managed objects.

-

Select Add property on the Attributes grid to map a target property to its corresponding source property.

The Property list shows all configured properties on the target resource. If the target resource is specified in a connector configuration, the Property list shows all properties configured for this connector. If the target resource is a managed object, the Property list shows the list of properties (defined in the managed object configuration for that object).

-

Select Add Missing Required Properties to add all the properties that are configured as required on the target resource. You can then map these required properties individually.

-

Select Quick Mapping to show all source and target properties simultaneously. Drag a source property onto its corresponding target property, or vice versa. When you’re done, click Save.

-

-

To test your mapping configuration on a single source entry, click the Behaviors tab and scroll down to Single Record Reconciliation. Search for the entry to reconcile.

The UI displays a preview of the target entry after a reconciliation. You can then click Reconcile Selected Record to perform the reconciliation on that one source entry.

Remove a mapping

-

To remove a mapping, delete the corresponding section from your mapping configuration. If you have configured mappings in individual mapping files, delete the file associated with the mapping you want to remove.

-

To remove a mapping using the admin UI, select Configure > Mappings, and then click Delete under the mapping to remove.

|

If you delete a mapping using the admin UI, the |

Transform attributes using a mapping

You can use a mapping to define attribute transformations during synchronization. In the following sample mapping excerpt, the value of the displayName attribute on the target is set using a combination of the lastName and firstName attribute values from the source:

{

"source": "",

"target": "displayName",

"transform": {

"type": "text/javascript",

"source": "source.lastName +', ' + source.firstName;"

}

},For transformations, the source property is optional. However, a source object is only available if you specify the source property. Therefore, in order to use source.lastName and source.firstName to calculate the displayName, the example specifies "source" : "".

If you set "source" : "" (not specifying an attribute), the entire object is regarded as the source, and you must include the attribute name in the transformation script. For example, to transform the source username to lowercase, your script would be source.mail.toLowerCase();. If you do specify a source attribute (for example, "source" : "mail"), just that attribute is regarded as the source. In this case, the transformation script would be source.toLowerCase();.

Configure attribute transformation using the admin UI

-

From the navigation bar, click Configure > Mappings, and select a mapping.

-

Select the line with the target attribute value to set.

-

On the Transformation Script tab, select Javascript or Groovy, and enter the transformation as an Inline Script, or specify the path to the file containing your transformation script.

When you use the UI to map a property with an encrypted value, you are prompted to set up a transformation script to decrypt the value when that property is synchronized. The resulting mapping looks similar to the following, which shows the transformation of a user’s password property:

{

"target" : "userPassword",

"source" : "password",

"transform" : {

"type" : "text/javascript",

"globals" : { },

"source" : "openidm.decrypt(source);"

},

"condition" : {

"type" : "text/javascript",

"globals" : { },

"source" : "object.password != null"

}

}Default attribute values in a mapping

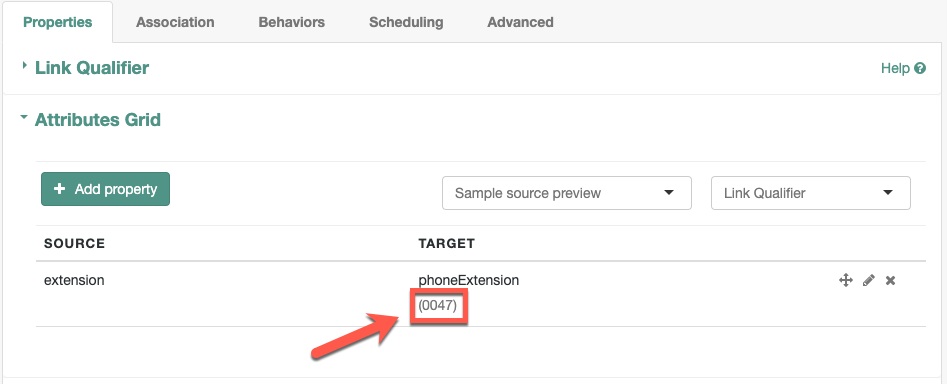

You can use a mapping to create attributes on the target resource. The following mapping excerpt creates a phoneExtension attribute with a default value of 0047 on the target object:

{

"target": "phoneExtension",

"default": "0047"

},The default property specifies a value to assign to the attribute on the target object. Before IDM determines the value of the target attribute, it evaluates any applicable conditions, followed by any transformation scripts. If the source property and the transform script yield a null value, IDM applies the default value in the create and update actions. The default value overrides the target value, if one exists.

Configure default attribute values using the admin UI

-

From the navigation bar, click Configure > Mappings, and click the mapping to edit.

-

Click the Properties tab.

-

Expand the Attributes Grid node, and click the Target property to edit.

-

In the Target Property: name window, click the Default Values tab, and add or edit the default values.

-

Click Save.

The default value displays in the Attributes Grid.

Scriptable conditions in a mapping

By default, IDM synchronizes all attributes in a mapping. For more complex relationships between source and target objects, you can define conditions under which IDM maps certain attributes. You can define two types of mapping conditions:

-

Scriptable conditions, in which an attribute is mapped only if the defined script evaluates to

true. -

Condition filters, a declarative filter that sets the conditions under which the attribute is mapped. Condition filters can include a link qualifier , that identifies the type of relationship between the source object and multiple target objects. For more information, refer to Map a Single Source Object to Multiple Target Objects.

The following list shows examples of condition filters:

-

"condition": "/object/country eq 'France'"—Only map the attribute if the object’scountryattribute equalsFrance. -

"condition": "/object/password pr"—Only map the attribute if the object’spasswordattribute is present. -

"condition": "/linkQualifier eq 'admin'"—Only map the attribute if the link between this source and target object is of typeadmin.

-

Configure mapping conditions using the admin UI

-

From the navigation bar, click Configure > Mappings, and click the mapping to edit.

-

Click the Properties tab.

-

Expand the Attributes Grid node, click the property to edit, click the Conditional Updates tab, and then do one of the following:

-

To configure a filtered condition, click Condition Filter.

-

To configure a scriptable condition, click Script.

-

-

Click Save.

Scriptable conditions create mapping logic, based on the result of the condition script. If the script does not return true, IDM does not manipulate the target attribute during a synchronization operation.

In the following excerpt, the value of the target mail attribute is set to the value of the source email attribute only if the source attribute is not empty:

{

"target": "mail",

"comment": "Set mail if non-empty.",

"source": "email",

"condition": {

"type": "text/javascript",

"source": "(object.email != null)"

}

...|

You can add comments to JSON files. This example includes a property named |

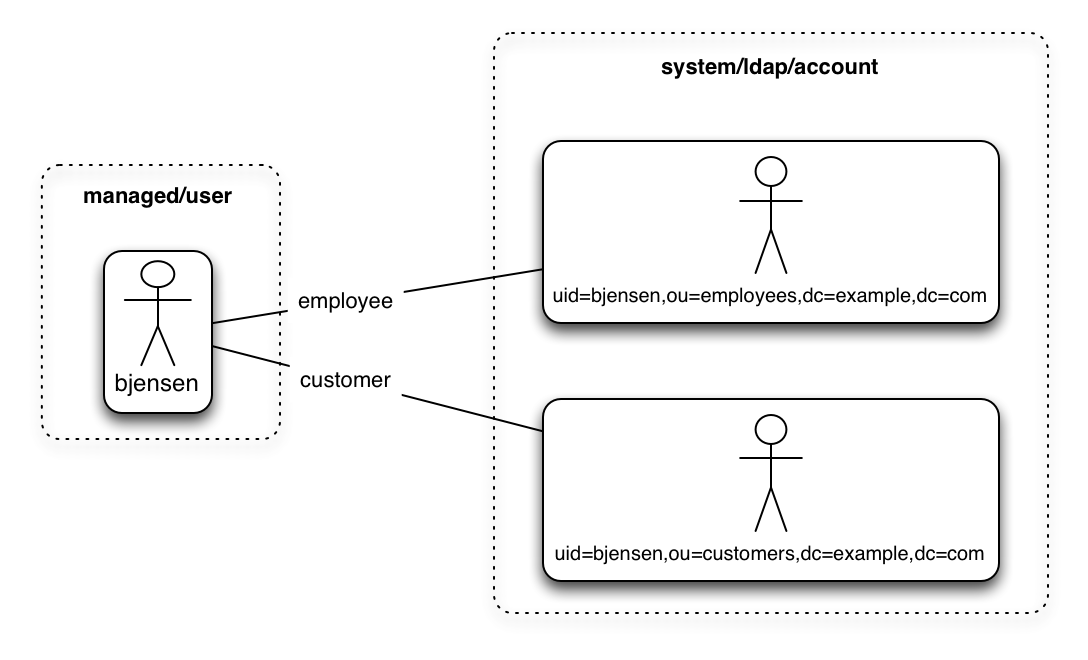

Map a single source object to multiple target objects

In certain cases, you might have a single object in a resource that maps to more than one object in another resource. For example, assume that managed user, bjensen, has two distinct accounts in an LDAP directory: an employee account (under uid=bjensen,ou=employees,dc=example,dc=com) and a customer account (under uid=bjensen,ou=customers,dc=example,dc=com). You want to map both of these LDAP accounts to the same managed user account.

IDM uses link qualifiers to manage this one-to-many scenario. A link qualifier is essentially a label that identifies the type of link (or relationship) between objects.

The following diagram shows two link qualifiers that let you link both of bjensen’s LDAP accounts to her managed user object:

|

The previous diagram displays that the link qualifier is a property of the link between the source and target object, and not a property of the source or target object itself. |

Link qualifiers are defined as part of the mapping. Each link qualifier must be unique within the mapping. If no link qualifier is specified (when only one possible matching target object exists), IDM uses a default link qualifier with the value default.

Link qualifiers can be defined as a static list, or dynamically, using a script. The following excerpt of a sample mapping shows the two static link qualifiers, employee and customer, described at the top of this topic:

{

"mappings": [

{

"name": "managedUser_systemLdapAccounts",

"source": "managed/user",

"target": "system/MyLDAP/account",

"linkQualifiers" : [ "employee", "customer" ],

...IDM evaluates the list of static link qualifiers for every source record. That is, every reconciliation processes all synchronization operations, for each link qualifier, in turn.

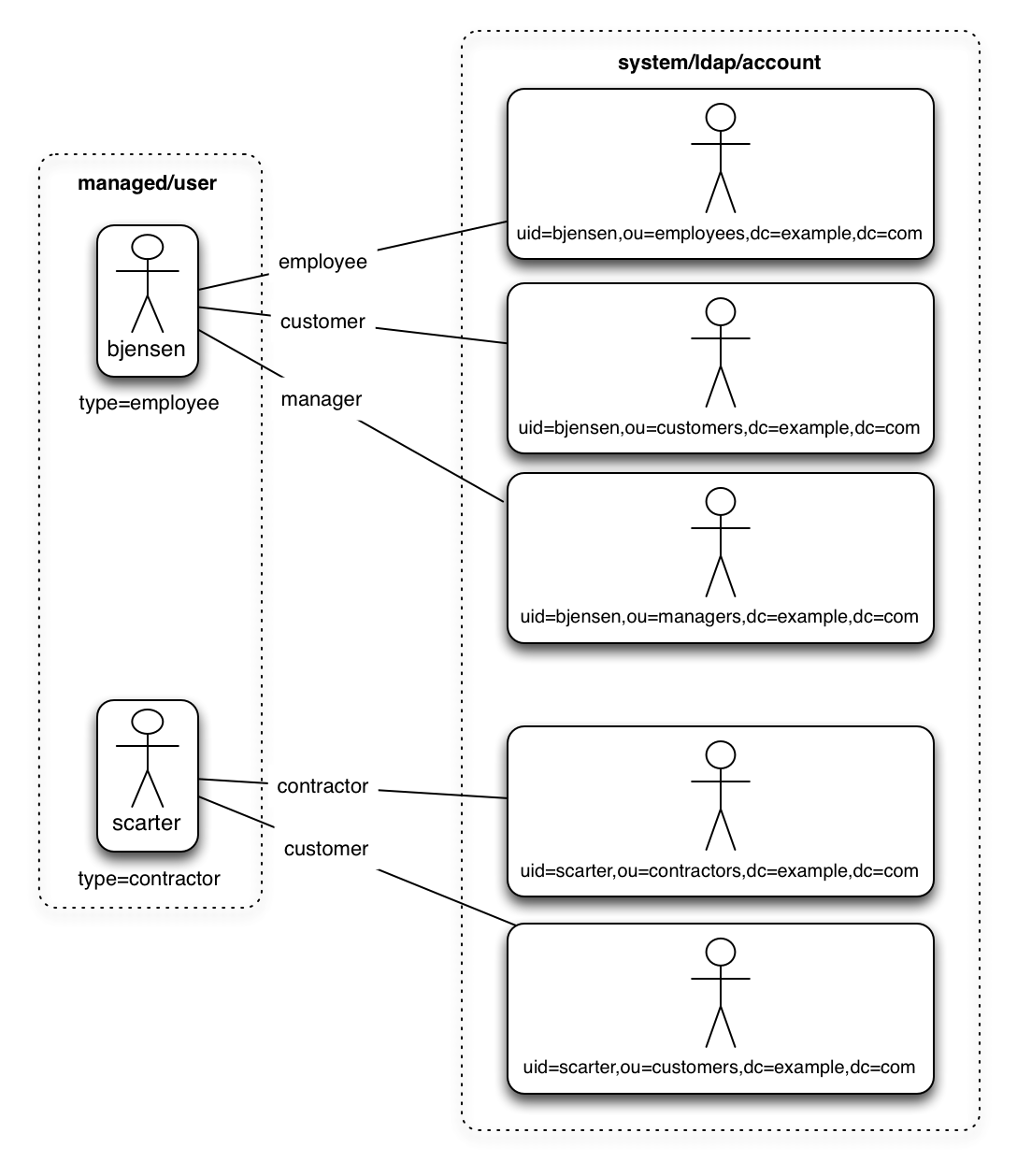

A dynamic link qualifier script returns a list of link qualifiers that can be applied to each source record. For example, suppose you have two types of managed users—employees and contractors. For employees, a single managed user (source) account can correlate with three different LDAP (target) accounts—employee, customer, and manager. For contractors, a single managed user account can correlate with only two separate LDAP accounts—contractor, and customer. The following diagram displays the possible linking situations for this scenario:

In this scenario, you could write a script to generate a dynamic list of link qualifiers, based on the managed user type. For employees, the script would return [employee, customer, manager] in its list of possible link qualifiers. For contractors, the script would return [contractor, customer] in its list of possible link qualifiers. A reconciliation operation would then process only the list of link qualifiers applicable to each source object.

If your source resource includes many records, you should use a dynamic link qualifier script instead of a static list of link qualifiers. Generating the list of applicable link qualifiers dynamically avoids unnecessary additional processing for those qualifiers that will never apply to specific source records. Therefore, synchronization performance is improved for large source data sets.

You can include a dynamic link qualifier script inline (using the source property), or by referencing a JavaScript or Groovy script file (using the file property). The following link qualifier script sets up the dynamic link qualifier lists described in the previous example.

|

In this example, the |

{

"mappings": [

{

"name": "managedUser_systemLdapAccounts",

"source": "managed/user",

"target": "system/MyLDAP/account",

"linkQualifiers" : {

"type" : "text/javascript",

"globals" : { },

"source" : "if (returnAll) {

['contractor', 'employee', 'customer', 'manager']

} else {

if(object.type === 'employee') {

['employee', 'customer', 'manager']

} else {

['contractor', 'customer']

}

}"

}

...To reference an external link qualifier script, provide a link to the file in the file property:

{

"mappings": [

{

"name": "managedUser_systemLdapAccounts",

"source": "managed/user",

"target": "system/MyLDAP/account",

"linkQualifiers" : {

"type" : "text/javascript",

"file" : "script/linkQualifiers.js"

}

...Dynamic link qualifier scripts must return all valid link qualifiers when the returnAll global variable is true. The returnAll variable is used during the target reconciliation phase to check whether there are any target records that are unassigned, for each known link qualifier.

If you configure dynamic link qualifiers through the UI, the complete list of dynamic link qualifiers displays in the Generated Link Qualifiers item below the script. This list represents the values returned by the script when the returnAll variable is passed as true. For a list of the variables available to a dynamic link qualifier script, refer to Script Triggers Defined in Mappings.

Link qualifiers have no functionality on their own, but they can be referenced in reconciliation operations to manage situations where a single source object maps to multiple target objects. The following examples show how link qualifiers can be used in reconciliation operations:

-

Use link qualifiers during object creation, to create multiple target objects per source object.

The following mapping excerpt defines a transformation script that generates the value of the

dnattribute on an LDAP system. If the link qualifier isemployee, the value of the targetdnis set to"uid=userName,ou=employees,dc=example,dc=com". If the link qualifier iscustomer, the value of the targetdnis set to"uid=userName,ou=customers,dc=example,dc=com". The reconciliation operation iterates through the link qualifiers for each source record. In this case, two LDAP objects, with differentdns are created for each managed user object:{ "target" : "dn", "transform" : { "type" : "text/javascript", "globals" : { }, "source" : "if (linkQualifier === 'employee') { 'uid=' + source.userName + ',ou=employees,dc=example,dc=com'; } else if (linkQualifier === 'customer') { 'uid=' + source.userName + ',ou=customers,dc=example,dc=com'; }" }, "source" : "" }json -

Use link qualifiers with correlation queries. The correlation query assigns a link qualifier based on the values of an existing target object.

During source synchronization, IDM queries the target system for every source record and link qualifier, to check if there are any matching target records. If a match is found, the sourceId, targetId, and linkQualifier are all saved as the link.

The following excerpt of a sample mapping shows the two link qualifiers described previously (

employeeandcustomer). The correlation query first searches the target system for theemployeelink qualifier. If a target object matches the query, based on the value of itsdnattribute, IDM creates a link between the source object and that target object, and assigns theemployeelink qualifier to that link. This process is repeated for all source records. Then, the correlation query searches the target system for thecustomerlink qualifier. If a target object matches that query, IDM creates a link between the source object and that target object and assigns thecustomerlink qualifier to that link:"linkQualifiers" : ["employee", "customer"], "correlationQuery" : [ { "linkQualifier" : "employee", "type" : "text/javascript", "source" : "var query = {'_queryFilter': 'dn co \"' + uid=source.userName + 'ou=employees\"'}; query;" }, { "linkQualifier" : "customer", "type" : "text/javascript", "source" : "var query = {'_queryFilter': 'dn co \"' + uid=source.userName + 'ou=customers\"'}; query;" } ] ...jsonFor more information about correlation queries, refer to Writing Correlation Queries.

-

Use link qualifiers during policy validation to apply different policies based on the link type.

The following excerpt of a sample mapping shows two link qualifiers,

userandtest. Depending on the link qualifier, different actions are taken when the target record is ABSENT:{ "mappings" : [ { "name" : "systemLdapAccounts_managedUser", "source" : "system/ldap/account", "target" : "managed/user", "linkQualifiers" : [ "user", "test" ], "properties" : [ ... "policies" : [ { "situation" : "CONFIRMED", "action" : "IGNORE" }, { "situation" : "FOUND", "action" : "UPDATE } { "condition" : "/linkQualifier eq \"user\"", "situation" : "ABSENT", "action" : "CREATE", "postAction" : { "type" : "text/javascript", "source" : "java.lang.System.out.println('Created user: \');" } }, { "condition" : "/linkQualifier eq \"test\"", "situation" : "ABSENT", "action" : "IGNORE", "postAction" : { "type" : "text/javascript", "source" : "java.lang.System.out.println('Ignored user: ');" } }, ...jsonWith this sample mapping, the synchronization operation creates an object in the target system only if the potential match is assigned a

userlink qualifier. If the match is assigned atestqualifier, no target object is created. In this way, the process avoids creating duplicate test-related accounts in the target system.

Configure link qualifiers using the admin UI

-

From the navigation bar, click Configure > Mappings, and click the mapping to edit.

-

Click the Properties tab, and expand the Link Qualifier node.

-

Select Static or Dynamic, configure the link qualifier, and click Save.

| For an example that uses link qualifiers in conjunction with roles, refer to Link Multiple Accounts to a Single Identity. |

Prevent the accidental deletion of a target system

If a source resource is empty, the default behavior is to exit without failure and to log a warning similar to the following:

[318] Feb 19, 2020 1:51:56.455 PM org.forgerock.openidm.sync.NonClusteredRecon dispatchRecon WARNING: Cannot reconcile from an empty data source, unless allowEmptySourceSet is true.

The reconciliation summary is also logged in the reconciliation audit log.

This behavior prevents reconciliation operations from accidentally deleting everything in a target resource. In the event that a source system is unavailable but erroneously reports its status as up, the absence of source objects should not result in objects being removed on the target resource.

If you do want reconciliations of an empty source resource to proceed, override the default behavior by setting the allowEmptySourceSet property to true in the mapping. For example:

{

"mappings" : [

{

"name" : "systemCsvfileAccounts_managedUser",

"source" : "system/csvfile/account",

"allowEmptySourceSet" : true,

...When an empty source is reconciled, the data in the target is wiped out.

Scripts in mappings

You can use a number of script hooks to manipulate objects and attributes during synchronization. Scripts can be triggered during various stages of the synchronization process, and are defined as part of the mapping.

You can trigger a script when a managed or system object is created (onCreate), updated (onUpdate), or deleted (onDelete). You can also trigger a script when a link is created (onLink) or removed (onUnlink).

In the default synchronization mapping, changes are always written to target objects, not to source objects. However, you can explicitly include a call to an action that should be taken on the source object within the script.

Construct and manipulate attributes

The most common use of synchronization scripts is when a target object is created or updated.

The onUpdate script is always called for an UPDATE situation, even if the synchronization process determines that there is no difference between the source and target objects, and that the target object will not be updated.

If the onUpdate script has run and the synchronization process then determines that the target value to set is the same as its existing value, the change is prevented from synchronizing to the target.

The following excerpt of a sample mapping derives a DN for an LDAP entry when the corresponding managed entry is created:

{

"onCreate": {

"type": "text/javascript",

"source":

"target.dn = 'uid=' + source.uid + ',ou=people,dc=example,dc=com'"

}

}Perform other actions

Construct and Manipulate Attributes With Scripts shows how to manipulate attributes with scripts when objects are created and updated. You can also trigger scripts in response to other synchronization actions. For example, you might not want to delete a managed user directly when an external account is deleted, but instead unlink the objects and deactivate the user in another resource. Alternatively, you might delete the object in IDM and run a script to perform some subsequent action.

The following example shows a more advanced mapping configuration that exposes the script hooks available during synchronization:

{

"mappings": [

{

"name": "systemLdapAccount_managedUser",

"source": "system/ldap/account",

"target": "managed/user",

"validSource": {

"type": "text/javascript",

"file": "script/isValid.js"

},

"correlationQuery" : {

"type" : "text/javascript",

"source" : "var map = {'_queryFilter': 'uid eq \"' +

source.userName + '\"'}; map;"

},

"properties": [

{

"source": "uid",

"transform": {

"type": "text/javascript",

"source": "source.toLowerCase()"

},

"target": "userName"

},

{

"source": "",

"transform": {

"type": "text/javascript",

"source": "if (source.myGivenName)

{source.myGivenName;} else {source.givenName;}"

},

"target": "givenName"

},

{

"source": "",

"transform": {

"type": "text/javascript",

"source": "if (source.mySn)

{source.mySn;} else {source.sn;}"

},

"target": "familyName"

},

{

"source": "cn",

"target": "fullname"

},

{

"condition": {

"type": "text/javascript",

"source": "var clearObj = openidm.decrypt(object);

((clearObj.password != null) &&

(clearObj.ldapPassword != clearObj.password))"

},

"transform": {

"type": "text/javascript",

"source": "source.password"

},

"target": "__PASSWORD__"

}

],

"onCreate": {

"type": "text/javascript",

"source": "target.ldapPassword = null;

target.adPassword = null;

target.password = null;

target.ldapStatus = 'New Account'"

},

"onUpdate": {

"type": "text/javascript",

"source": "target.ldapStatus = 'OLD'"

},

"onUnlink": {

"type": "text/javascript",

"file": "script/triggerAdDisable.js"

},

"policies": [

{

"situation": "CONFIRMED",

"action": "UPDATE"

},

{

"situation": "FOUND",

"action": "UPDATE"

},

{

"situation": "ABSENT",

"action": "CREATE"

},

{

"situation": "AMBIGUOUS",

"action": "EXCEPTION"

},

{

"situation": "MISSING",

"action": "EXCEPTION"

},

{

"situation": "UNQUALIFIED",

"action": "UNLINK"

},

{

"situation": "UNASSIGNED",

"action": "EXCEPTION"

}

]

}

]

}The following list shows the properties that you can use as hooks in mapping configurations to call scripts:

- Triggered by Situation

-

onCreate, onUpdate, onDelete, onLink, onUnlink

- Object Filter

-

validSource, validTarget

- Correlating Objects

-

correlationQuery

- Triggered on Reconciliation

-

result

- Scripts Inside Properties

-

condition, transform

Scripts can obtain data from any connected system by using the openidm.read(id) function, where id is the identifier of the object to read.

The following example reads a managed user object from the repository:

repoUser = openidm.read("managed/user/9dce06d4-2fc1-4830-a92b-bd35c2f6bcbb");The following example reads an account from an external LDAP resource:

externalAccount = openidm.read("system/ldap/account/uid=bjensen,ou=People,dc=example,dc=com");|

For illustration purposes, this query targets a DN rather than a UID as it did in the previous example. The attribute that is used for the |

Generate log messages

IDM provides a logger object that you can use from scripts defined in your mapping. These scripts can log messages to the OSGi console and to log files. The logger object includes the following functions:

-

debug() -

error() -

info() -

trace() -

warn()

Consider the following mapping excerpt:

{

"mappings" : [

{

"name" : "systemCsvfileAccounts_managedUser",

"source" : "system/csvfile/account",

"target" : "managed/user",

"correlationQuery" : {

"type" : "text/javascript",

"source" : "var query = {'_queryId' : 'for-userName', 'uid' : source.name};query;"

},

"onCreate" : {

"type" : "text/javascript",

"source" : "logger.warn('Case onCreate: the source object contains: = {} ', source); source;"

},

"onUpdate" : {

"type" : "text/javascript",

"source" : "logger.warn('Case onUpdate: the source object contains: = {} ', source); source;"

},

"result" : {

"type" : "text/javascript",

"source" : "logger.warn('Case result: the source object contains: = {} ', source); source;"

},

"properties" : [

{

"transform" : {

"type" : "text/javascript",

"source" : "logger.warn('Case no Source: the source object contains: = {} ', source); source;"

},

"target" : "sourceTest1Nosource"

},

{

"source" : "",

"transform" : {

"type" : "text/javascript",

"source" : "logger.warn('Case emptySource: the source object contains: = {} ', source); source;"

},

"target" : "sourceTestEmptySource"

},

{

"source" : "description",

"transform" : {

"type" : "text/javascript",

"source" : "logger.warn('Case sourceDescription: the source object contains: = {} ', source); source"

},

"target" : "sourceTestDescription"

},

...

]

}

]

}The scripts that are defined for onCreate, onUpdate, and result log a warning message to the console whenever an object is created or updated, or when a result is returned. The script result includes the full source object.

The scripts that are defined in the properties section of the mapping log a warning message if the property in the source object is missing or empty. The last script logs a warning message that includes the description of the source object.

During a reconciliation operation, these scripts would generate output in the OSGi console, similar to the following:

2017-02... WARN Case no Source: the source object contains: = null [9A00348661C6790E7881A7170F747F...]

2017-02... WARN Case emptySource: the source object contains: = {roles=openidm-..., lastname=Jensen...]

2017-02... WARN Case no Source: the source object contains: = null [9A00348661C6790E7881A7170F747F...]

2017-02... WARN Case emptySource: the source object contains: = {roles=openidm..., lastname=Carter,...]

2017-02... WARN Case sourceDescription: the source object contains: = null [EEE2FF4BCE9748927A1832...]

2017-02... WARN Case sourceDescription: the source object contains: = null [EEE2FF4BCE9748927A1832...]

2017-02... WARN Case onCreate: the source object contains: = {roles=openidm-..., lastname=Carter, ...]

2017-02... WARN Case onCreate: the source object contains: = {roles=openidm-..., lastname=Jensen, ...]

2017-02... WARN Case result: the source object contains: = {SOURCE_IGNORED={count=0, ids=[]}, FOUND_ALL...]

You can use similar scripts to inject logging into any aspect of a mapping. You can also call the logger functions from any configuration file that has scripts hooks. For more information about the logger functions, refer to Log Functions.

Reuse links between mappings

When two mappings synchronize the same objects bidirectionally, use the links property in one mapping to have IDM use the same link for both mappings. If you do not specify a links property, IDM maintains a separate link for each mapping.

The following excerpt shows two mappings, one from MyLDAP accounts to managed users, and another from managed users to MyLDAP accounts. In the second mapping, the link property indicates that IDM should reuse the links created in the first mapping, rather than create new links:

{

"mappings": [

{

"name": "systemMyLDAPAccounts_managedUser",

"source": "system/MyLDAP/account",

"target": "managed/user"

},

{

"name": "managedUser_systemMyLDAPAccounts",

"source": "managed/user",

"target": "system/MyLDAP/account",

"links": "systemMyLDAPAccounts_managedUser"

}

]

}Reconcile with case-insensitive data stores

IDM is case-sensitive, which means that an uppercase ID is considered different from an otherwise identical lowercase ID during reconciliation. Some data stores, such as ForgeRock Directory Services (DS), are case-insensitive. This can be problematic during reconciliation, because the ID of the links created by reconciliation might not match the case of the IDs expected by IDM.

If a mapping inherits links by using the links property, you do not need to worry about case-sensitivity, because the mapping uses the setting of the referred links.

Alternatively, you can address case-sensitivity issues with target systems in the following ways:

-

Specify a case-insensitive data store. To do so, set the

sourceIdsCaseSensitiveortargetIdsCaseSensitiveproperties tofalsein the mapping for those links. For example, if the source LDAP data store is case-insensitive, set the mapping from the LDAP store to the managed user repository as follows:"mappings" : [ { "name" : "systemLdapAccounts_managedUser", "source" : "system/ldap/account", "sourceIdsCaseSensitive" : false, "target" : "managed/user", "properties" : [ ...jsonYou might also need to modify the connector configuration, setting the

enableFilteredResultsHandlerproperty tofalse:"resultsHandlerConfig" : { "enableFilteredResultsHandler":false },jsonDo not disable the filtered results handler for the CSV file connector. The CSV file connector does not perform filtering. Therefore, if you disable the filtered results handler for this connector, the full CSV file will be returned for every request. -

Use a case-insensitive option in your managed repository. For example, for a MySQL repository, change the collation of

managedobjectproperties.propvaluetoutf8_general_ci. For more information, refer to Case insensitivity for a JDBC repo.

In general, to address case-sensitivity, focus on database-, table-, or column-level collation settings. Queries performed against repositories configured in this way are subject to the collation, and are used for comparison.

Synchronization situations and actions

The synchronization process assesses source and target objects, and the links between them, and then determines the synchronization situation that applies to each object. The process then performs a specific action, usually on the target object, depending on the assessed situation.

The action that is taken for each situation is defined in the policies section of your synchronization mapping.

The following excerpt of a sample mapping shows the defined actions in that sample:

{

"policies": [

{

"situation": "CONFIRMED",

"action": "UPDATE"

},

{

"situation": "FOUND",

"action": "LINK"

},

{

"situation": "ABSENT",

"action": "CREATE"

},

{

"situation": "AMBIGUOUS",

"action": "IGNORE"

},

{

"situation": "MISSING",

"action": "IGNORE"

},

{

"situation": "SOURCE_MISSING",

"action": "DELETE"

},

{

"situation": "UNQUALIFIED",

"action": "IGNORE"

},

{

"situation": "UNASSIGNED",

"action": "IGNORE"

}

]

}You can also define these actions using the admin UI:

-

From the navigation bar, click Configure > Mappings, and click the mapping to edit.

-

Click the Behaviors tab, expand the Situational Event Scripts node, and configure event actions.

-

Click Save.

| If you do not define an action for a particular situation, IDM takes the default action for that situation. |

How IDM assesses synchronization situations

IDM performs reconciliation in two phases:

-

Source reconciliation accounts for source objects and associated links based on the configured mapping.

-

Target reconciliation iterates over the target objects that were not processed in the first phase.

For example, if a source object was deleted, the source reconciliation phase will not identify the target object that was previously linked to that source object. Instead, this orphaned target object is detected during the second phase.

Source reconciliation

During source reconciliation and liveSync, IDM iterates through the objects in the source resource. For reconciliation, the list of objects includes all objects that are available through the connector. For liveSync, the list contains only changed objects. IDM can filter objects from the list by using the following:

-

Scripts specified in the

validSourceproperty -

A query specified in the

sourceConditionproperty -

A query specified in the

sourceQueryproperty

For each object in the list, IDM assesses the following conditions:

-

Is the source object valid?

Valid source objects are categorized

qualifies=1. Invalid source objects are categorizedqualifies=0. Invalid objects include objects that were filtered out by avalidSourcescript orsourceCondition. For more information, refer to Filter Source and Target Objects With Scripts. -

Does the source object have a record in the links table?

Source objects that have a corresponding link in the repository’s

linkstable are categorizedlink=1. Source objects that do not have a corresponding link are categorizedlink=0. -

Does the source object have a corresponding valid target object?

Source objects that have a corresponding object in the target resource are categorized

target=1. Source objects that do not have a corresponding object in the target resource are categorizedtarget=0.

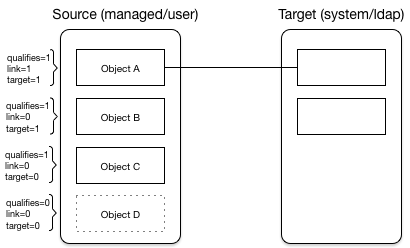

The following diagram illustrates the categorization of four sample objects during source reconciliation. In this example, the source is the managed user repository and the target is an LDAP directory:

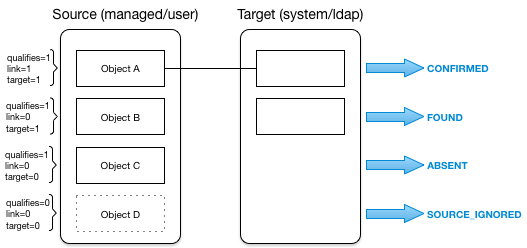

Based on the categorizations of source objects during the source reconciliation phase, the synchronization process assesses a situation for each source object, and executes the action that is configured for each situation.

Not all situations are detected during all synchronization types (reconciliation, implicit synchronization, and liveSync). The following table describes the set of synchronization situations detected during source reconciliation, the default action taken for each situation, and valid alternative actions that can be configured for each situation:

| Source Qualifies | Link Exists | Target Objects Found | Situation | Default Action | Possible Actions |

|---|---|---|---|---|---|

NO |

NO |

0 |

SOURCE_IGNORED |

IGNORE source object |

EXCEPTION, REPORT, NOREPORT, ASYNC |

NO |

NO |

1 |

UNQUALIFIED |

DELETE target object |

EXCEPTION, IGNORE, REPORT, NOREPORT, ASYNC |

NO |

NO |

> 1 |

UNQUALIFIED |

DELETE target objects |

EXCEPTION, IGNORE, REPORT, NOREPORT, ASYNC |

NO |

YES |

0 |

UNQUALIFIED |

DELETE linked target object [1] |

EXCEPTION, REPORT, NOREPORT, ASYNC |

NO |

YES |

1 |

UNQUALIFIED |

DELETE linked target object |

EXCEPTION, REPORT, NOREPORT, ASYNC |

NO |

YES |

> 1 |

UNQUALIFIED |

DELETE linked target object |

EXCEPTION, REPORT, NOREPORT, ASYNC |

YES |

NO |

0 |

ABSENT |

CREATE target object |

EXCEPTION, IGNORE, REPORT, NOREPORT, ASYNC |

YES |

NO |

1 |

FOUND |

UPDATE target object |

EXCEPTION, IGNORE, REPORT, NOREPORT, ASYNC |

YES |

NO |

1 |

FOUND_ALREADY_LINKED [2] |

EXCEPTION |

IGNORE, REPORT, NOREPORT, ASYNC |

YES |

NO |

> 1 |

AMBIGUOUS [3] |

EXCEPTION |

REPORT, NOREPORT, ASYNC |

YES |

YES |

0 |

MISSING [4] |

EXCEPTION |

CREATE, UNLINK, DELETE, IGNORE, REPORT, NOREPORT, ASYNC |

YES |

YES |

1 |

CONFIRMED |

UPDATE target object |

IGNORE, REPORT, NOREPORT, ASYNC |

Based on this table, the following situations would be assigned to the previous diagram:

Target reconciliation

During source reconciliation, the synchronization process cannot detect situations where no source object exists. In this case, the situation is detected during the second reconciliation phase, target reconciliation.

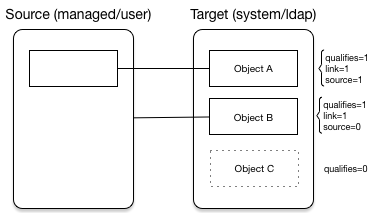

Target reconciliation iterates through the target objects that were not accounted for during source reconciliation. The process checks each object against the validTarget filter, determines the appropriate situation, and executes the action configured for the situation. Target reconciliation evaluates the following conditions:

-

Is the target object valid?

Valid target objects are categorized

qualifies=1. Invalid target objects are categorizedqualifies=0. Invalid objects include objects that were filtered out by avalidTargetscript. For more information, refer to Filter Source and Target Objects With Scripts. -

Does the target object have a record in the links table?

Target objects that have a corresponding link in the

linkstable are categorizedlink=1. Target objects that do not have a corresponding link are categorizedlink=0. -

Does the target object have a corresponding source object?

Target objects that have a corresponding object in the source resource are categorized

source=1. Target objects that do not have a corresponding object in the source resource are categorizedsource=0.

The following diagram illustrates the categorization of three sample objects during target reconciliation:

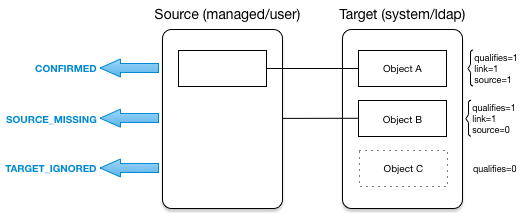

Based on the categorizations of target objects during the target reconciliation phase, a situation is assessed for each remaining target object. Not all situations are detected in all synchronization types. The following table describes the set of situations that can be detected during the target reconciliation phase:

| Target Qualifies | Link Exists | Source Exists | Source Qualifies | Situation | Default Action | Possible Actions |

|---|---|---|---|---|---|---|

NO |

n/a |

n/a |

n/a |

TARGET_IGNORED [5] |

IGNORE |

DELETE, UNLINK, REPORT, NOREPORT, ASYNC |

YES |

NO |

NO |

n/a |

UNASSIGNED |

EXCEPTION |

IGNORE, REPORT, NOREPORT, ASYNC |

YES |

YES |

YES |

YES |

CONFIRMED |

UPDATE target object |

IGNORE, REPORT, NOREPORT |

YES |

YES |

YES |

NO |

UNQUALIFIED [6] |

DELETE |

UNLINK, EXCEPTION, IGNORE, REPORT, NOREPORT, ASYNC |

YES |

YES |

NO |

n/a |

SOURCE_MISSING [7] |

EXCEPTION |

DELETE, UNLINK, IGNORE, REPORT, NOREPORT, ASYNC |

Based on this table, the following situations would be assigned to the previous diagram:

Situations specific to implicit synchronization and liveSync

Certain situations occur only during implicit synchronization (when changes made in the repository are pushed out to external systems) and liveSync (when IDM polls external system change logs for changes and updates the repository).

The following table shows the situations that pertain only to implicit sync and liveSync, when records are deleted from the source or target resource.

| Source Qualifies | Link Exists | Targets Found [8] | Targets Qualify | Situation | Default Action | Possible Actions |

|---|---|---|---|---|---|---|

n/a |

YES |

0 |

n/a |

LINK_ONLY |

EXCEPTION |

IGNORE, REPORT, NOREPORT, ASYNC |

n/a |

YES |

1 |

1 |

SOURCE_MISSING |

EXCEPTION |

IGNORE, REPORT, NOREPORT, ASYNC |

n/a |

YES |

1 |

0 |

TARGET_IGNORED |

IGNORE |

DELETE, UNLINK, EXCEPTION, REPORT, NOREPORT, ASYNC |

n/a |

NO |

0 |

n/a |

ALL_GONE |

IGNORE |

EXCEPTION, REPORT, NOREPORT, ASYNC |

YES |

NO |

0 |

n/a |

ALL_GONE |

IGNORE |

EXCEPTION, REPORT, NOREPORT, ASYNC |

YES |

NO |

1 |

1 |

UNASSIGNED |

EXCEPTION |

REPORT, NOREPORT |

YES |

NO |

> 1 |

> 1 |

AMBIGUOUS |

EXCEPTION |

IGNORE, REPORT, NOREPORT, ASYNC |

NO |

NO |

0 |

n/a |

ALL_GONE |

IGNORE |

EXCEPTION, REPORT, NOREPORT, ASYNC |

NO |

NO |

1 |

1 |

TARGET_IGNORED |

IGNORE target object |

DELETE, UNLINK, EXCEPTION, REPORT, NOREPORT, ASYNC |

NO |

NO |

> 1 |

> 1 |

UNQUALIFIED |

DELETE target objects |

EXCEPTION, IGNORE, REPORT, NOREPORT, ASYNC |

Synchronization actions

When an object has been assigned a situation, the synchronization process takes the configured action on that object. If no action is configured, the default action for that situation applies.

The following actions can be taken:

CREATE-

Create and link a target object.

UPDATE-

Link and update a target object.

DELETE-

Delete and unlink the target object.

LINK-

Link the correlated target object.

UNLINK-

Unlink the linked target object.

EXCEPTION-

Flag the link situation as an exception.

Do not use this action for liveSync mappings.

In the context of liveSync, the EXCEPTION action triggers the liveSync failure handler, and the operation is retried in accordance with the configured retry policy. This is not useful because the operation will never succeed. If the configured number of retries is high, these pointless retries can continue for a long period of time.

If the maximum number of retries is exceeded, the liveSync operation terminates and does not continue processing the entry that follows the failed (EXCEPTION) entry. LiveSync is only resumed at the next liveSync polling interval.

This behavior differs from reconciliation, where a failure to synchronize a single source-target association does not fail the entire reconciliation.

IGNORE-

Do not change the link or target object state.

REPORT-

Do not perform any action but report what would happen if the default action were performed.

NOREPORT-

Do not perform any action or generate any report.

ASYNC-

An asynchronous process has been started, so do not perform any action or generate any report.

Launch a script as an action

In addition to the static synchronization actions described previously, you can provide a script to run in specific synchronization situations. The script can be either JavaScript or Groovy. You can specify the script inline (with the "source" property), or reference it from a file, (with the "file" property).

The following excerpt of a sample mapping specifies that an inline script should be invoked when a synchronization operation assesses an entry as ABSENT in the target system. The script checks whether the employeeType property of the corresponding source entry is contractor. If so, the source entry is ignored. Otherwise, the entry is created on the target system:

{

"situation" : "ABSENT",

"action" : {

"type" : "text/javascript",

"globals" : { },

"source" : "if (source.employeeType === 'contractor') {action='IGNORE'}

else {action='CREATE'};action;"

},

}The following variables are available to a script that is called as an action:

-

source -

target -

linkQualifier -

recon(whererecon.actionParamcontains information about the current reconciliation operation)

For more information about the variables available to scripts, refer to Script variables.

The result obtained from evaluating this script must be a string whose value is one of the synchronization actions listed in Synchronization actions. This resulting action is shown in the reconciliation log.

To launch a script as a synchronization action using the admin UI:

-

From the navigation bar, click Configure > Mappings, and click the mapping to edit.

-

Click the Behaviors tab, and expand the Policies node.

-

Click the edit button for the situation action to edit.

-

On the Perform this Action tab, click Script, and enter the script that corresponds to the action.

-

Click Submit, and then click Save.

Launch a workflow as an action

The triggerWorkflowFromSync.js

script launches a predefined workflow when a synchronization operation assesses a particular situation. The mechanism for triggering this script is the same as for any other script. The script is provided in the openidm/bin/defaults/script/workflow directory. If you customize the script, copy it to the script directory of your project to ensure that your customizations are preserved during an upgrade.

The parameters for the workflow are passed as properties of the action parameter.

The following extract of a sample mapping specifies that, when a synchronization operation assesses an entry as ABSENT, the workflow named managedUserApproval is invoked:

{

"situation" : "ABSENT",

"action" : {

"workflowName" : "managedUserApproval",

"type" : "text/javascript",

"file" : "workflow/triggerWorkflowFromSync.js"

}

}To launch a workflow as a synchronization action Using the admin UI:

-

From the navigation bar, click Configure > Mappings, and click the mapping to edit.

-

Click the Behaviors tab, and expand the Policies node.

-

Click the edit button for the situation action to edit.

-

On the Perform this Action tab, click Workflow, and enter the details of the workflow to launch.

-

Click Submit, and then click Save.

Correlate source objects with existing target objects

When a synchronization operation creates an object on a target system, it also creates a link between the source and target object. IDM then uses that link to determine the object’s synchronization situation during later synchronization operations. For a list of synchronization situations, refer to How IDM assesses synchronization situations.

Every synchronization operation can correlate existing source and target objects. Correlation matches source and target objects, based on the results of a query or script, and creates links between matched objects.

Correlation queries and correlation scripts are configured as part of the mapping. Each query or script is specific to the mapping for which it is configured.

Configure correlation using the admin UI

-

From the navigation bar, click Configure > Mappings.

-

From the Mappings page, click the mapping to correlate.

-

From the Mapping Detail page, click the Association tab.

-

Expand the Association Rules node, click the drop-down menu, and select one of the following:

-

Correlation Queries

-

Correlation Script

-

-

Build and/or write your script or query, and click Save.

Correlation queries

IDM processes a correlation query by constructing a query map. The content of the query is generated dynamically, using values from the source object. For each source object, a new query is sent to the target system, using (possibly transformed) values from the source object for its execution.

Queries are run against target resources, either managed or system objects, depending on the mapping. Correlation queries on system objects access the connector, which executes the query on the external resource.

You express a correlation query using a query filter (_queryFilter). For more information about query filters, refer to Define and call data queries. The synchronization process executes the correlation query to search through the target system for objects that match the current source object.

To configure a correlation query, define a script whose source returns a query that uses the _queryFilter, for example:

{ "_queryFilter" : "uid eq \"" + source.userName + "\"" }Use filtered queries to correlate objects

For filtered queries, the script that is defined or referenced in the correlationQuery property must return an object with the following elements:

-

The element that is being compared on the target object; for example,

uid.The element on the target object is not necessarily a single attribute. Your query filter can be simple or complex; valid query filters range from a single operator to an entire boolean expression tree.

If the target object is a system object, this attribute must be referred to by its IDM name rather than its ICF

nativeName. For example, with the following provisioner configuration, the attribute to use in the correlation query would beuidand not+NAME`:... "uid" : { "type" : "string", "nativeName" : "__NAME__", "required" : true, "nativeType" : "string" } ...json -

The value to search for in the query.

This value is generally based on one or more values from the source object. However, it does not have to match the value of a single source object property. You can define how your script uses the values from the source object to find a matching record in the target system.

You might use a transformation of a source object property, such as

toUpperCase(). You can concatenate that output with other strings or properties. You can also use this value to call an external REST endpoint, and redirect the response to the final "value" portion of the query.

The following correlation query matches source and target objects if the value of the uid attribute on the target is the same as the userName attribute on the source:

"correlationQuery" : {

"type" : "text/javascript",

"source" : "var qry = {'_queryFilter': 'uid eq \"' + source.userName + '\"'}; qry"

},The query can return zero or more objects. The situation assigned to the source object depends on the number of target objects that are returned, and on the presence of any link qualifiers in the query. For information about synchronization situations, refer to How Synchronization Situations Are Assessed. For information about link qualifiers, refer to Map a Single Source Object to Multiple Target Objects.

Create Correlation Queries Using the Expression Builder

The Expression Builder is a wizard that lets you quickly build expressions using drop-down menu options.

-

From the navigation bar, click Configure > Mappings.

-

On the Mappings page, click the mapping to correlate.

-

From the Mapping Detail page, click the Association tab.

-

Expand the Association Rules node, click the drop-down menu, and select Correlation Queries.

-

Click Add Correlation Query.

-

In the Correlation Query window, click the Link Qualifier drop-down menu, and select a link qualifier.

If you do not need to correlate multiple potential target objects per source object, select the default link qualifier. For more information about linking to multiple target objects, refer to Map a Single Source Object to Multiple Target Objects.

-

Select Expression Builder.

-

To create an expression, use the drop-down menus to add and remove items, as necessary. List the fields to use for matching existing items in your source to items in your target.

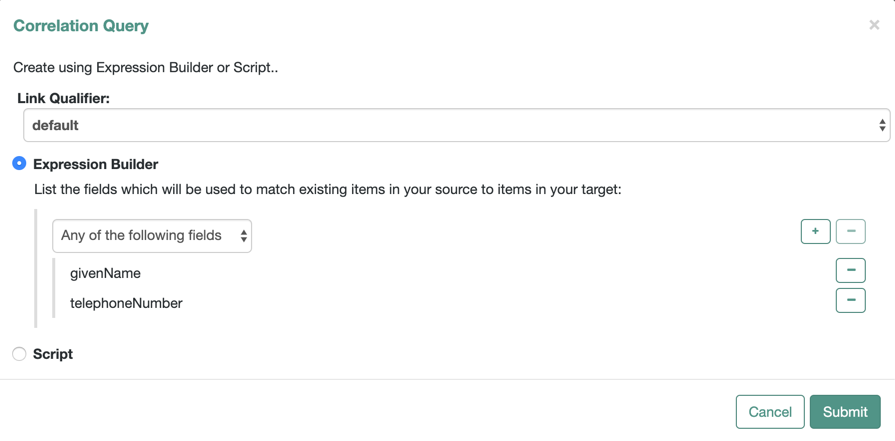

The following example displays an Expression Builder correlation query for a mapping from

managed/usertosystem/ldap/accountsobjects. The query creates a match between the source (managed) object and the target (LDAP) object if the value of thegivenNameor thetelephoneNumberof those objects is the same.

-

After you finish building the expression, click Submit.

-

On the Mapping Detail page, under the Association Rules node, click Save.

The correlation query displays as follows in the mapping:

"correlationQuery" : [

{

"linkQualifier" : "default",

"expressionTree" : {

"any" : [

"givenName",

"telephoneNumber"

]

},

"mapping" : "managedUser_systemLdapAccounts",

"type" : "text/javascript",

"file" : "ui/correlateTreeToQueryFilter.js"

}

]Correlation scripts

In general, a correlation query should meet the requirements of most deployments. However, if you need a more powerful correlation mechanism than a simple query can provide, you can write a correlation script with additional logic. Correlation scripts can be useful if your query needs extra processing, such as fuzzy-logic matching or out-of-band verification with a third-party service over REST. Correlation scripts are generally more complex than correlation queries, and impose no restrictions on the methods used to find matching objects.

A correlation script must execute a query and return the result of that query. The result of a correlation script is a list of maps, each of which contains a candidate _id value. If no match is found, the script returns a zero-length list. If exactly one match is found, the script returns a single-element list. If there are multiple ambiguous matches, the script returns a list with multiple elements. There is no assumption that the matching target record or records can be found by a simple query on the target system. All of the work required to find matching records is left to the script.

To invoke a correlation script, use one of the following properties:

correlationQuery-

Returns a

Mapwhose values specify theQueryFilterfor the sync engine to execute. correlationScript-

Returns a

List<Map>whose value is a list of correlated objects from the target.You can invoke a correlation script inline:

"correlationScript" : { "type": "text/javascript", "source": " var resultData = openidm.query("system/ldap/account", myQuery); return resultData.result;" }jsonYou can also invoke a correlation script using a script file:

"correlationScript" : { "type": "text/javascript", "file": "myCustomCorrelationScript.js" }json

Correlation Script Using Link Qualifiers

The following example shows a correlation script that uses link qualifiers. The script returns resultData.result—a list of maps, each of which has an _id entry. These entries will be the values that are used for correlation.

(function () {

var query, resultData;

switch (linkQualifier) {

case "test":

logger.info("linkQualifier = test");

query = {'_queryFilter': 'uid eq \"' + source.userName + '-test\"'};

break;

case "user":

logger.info("linkQualifier = user");

query = {'_queryFilter': 'uid eq \"' + source.userName + '\"'};

break;

case "default":

logger.info("linkQualifier = default");

query = {'_queryFilter': 'uid eq \"' + source.userName + '\"'};

break;

default:

logger.info("No linkQualifier provided.");

break;

}

var resultData = openidm.query("system/ldap/account", query);

logger.info("found " + resultData.result.length + " results for link qualifier " + linkQualifier)

for (i=0;i<resultData.result.length;i++) {

logger.info("found target: " + resultData.result[i]._id);

}

return resultData.result;

} ());Configure a correlation script using the admin UI

-

From the navigation bar, click Configure > Mappings.

-

On the Mappings page, select the mapping to correlate.

-

From the Mapping Detail page, click the Association tab.

-

Expand the Association Rules node, click the drop-down menu, and select Correlation Script.

-

From the Type drop-down menu, select JavaScript or Groovy.

-

Enter the correlation script:

-

To use an inline script, select Inline Script, and type the script source.

-

To use a script file, select File Path, and enter the path to the script.

To create a correlation script, use the details from the source object to find the matching record in the target system. If you are using link qualifiers to match a single source record to multiple target records, you must also use the value of the

linkQualifiervariable within your correlation script to find the target ID that applies for that qualifier. -

-

Click Save.

Manage reconciliation

To trigger, cancel, and monitor reconciliation operations over REST, use the openidm/recon REST endpoint. You can perform most of these actions using the admin UI.

Trigger a reconciliation

The following example triggers a reconciliation operation over REST based on the systemLdapAccounts_managedUser mapping:

curl \ --header "X-OpenIDM-Username: openidm-admin" \ --header "X-OpenIDM-Password: openidm-admin" \ --header "Accept-API-Version: resource=1.0" \ --request POST \ "http://localhost:8080/openidm/recon?_action=recon&mapping=systemLdapAccounts_managedUser"

By default, a reconciliation run ID is returned immediately when the reconciliation operation is initiated. Clients can make subsequent calls to the reconciliation service, using this reconciliation run ID to query its state, and to call operations on it. For an example, refer to Reconciliation Details.

The reconciliation run initiated previously would return something similar to the following:

{

"_id": "05f63bce-4aaa-492e-9e86-a702d5c9d6c0-1144",

"state": "ACTIVE"

}To complete the reconciliation operation before the reconciliation run ID is returned, set the waitForCompletion property to true when the reconciliation is initiated:

curl \ --header "X-OpenIDM-Username: openidm-admin" \ --header "X-OpenIDM-Password: openidm-admin" \ --header "Accept-API-Version: resource=1.0" \ --request POST \ "http://localhost:8080/openidm/recon?_action=recon&mapping=systemLdapAccounts_managedUser&waitForCompletion=true"

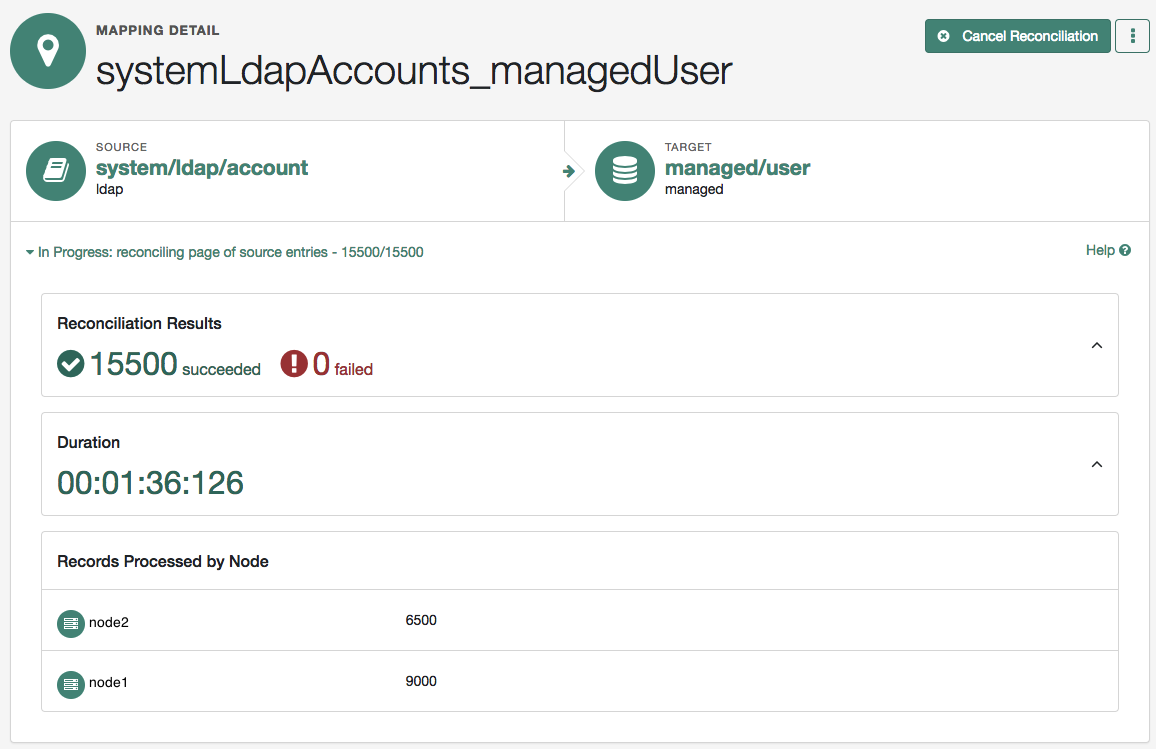

|

To trigger this reconciliation using the admin UI, click Configure > Mappings, select a mapping, then click Reconcile. If you click Cancel Reconciliation before it completes, you will need to start the reconciliation again. |

Cancel a reconciliation

To cancel an in progress reconciliation, specify the reconciliation run ID. The following REST call cancels the reconciliation run initiated in the previous section:

curl \ --header "X-OpenIDM-Username: openidm-admin" \ --header "X-OpenIDM-Password: openidm-admin" \ --header "Accept-API-Version: resource=1.0" \ --request POST \ "http://localhost:8080/openidm/recon/0890ad62-4738-4a3f-8b8e-f3c83bbf212e?_action=cancel"

The output for a reconciliation cancellation request is similar to the following:

{

"status":"INITIATED",

"action":"cancel",

"_id":"0890ad62-4738-4a3f-8b8e-f3c83bbf212e"

}If the reconciliation run is waiting for completion before its ID is returned, obtain the reconciliation run ID from the list of active reconciliations, as described in the following section.

|

To cancel a reconciliation run in progress using the admin UI, click Configure > Mappings, click on the mapping reconciliation to cancel, and click Cancel Reconciliation. |

List reconciliation history

Display a list of reconciliation processes that have completed, and those that are in progress, by running a RESTful GET on "http://localhost:8080/openidm/recon".

The following example displays all reconciliation runs:

curl \ --header "X-OpenIDM-Username: openidm-admin" \ --header "X-OpenIDM-Password: openidm-admin" \ --header "Accept-API-Version: resource=1.0" \ --request GET \ "http://localhost:8080/openidm/recon"

Example Output

The output is similar to the following, with one item for each reconciliation run:

"reconciliations": [

{

"_id": "05f63bce-4aaa-492e-9e86-a702d5c9d6c0-1144",

"mapping": "systemLdapAccounts_managedUser",

"state": "SUCCESS",

"stage": "COMPLETED_SUCCESS",

"stageDescription": "reconciliation completed.",

"progress": {

"source": {

"existing": {

"processed": 2,

"total": "2"

}

},

"target": {

"existing": {

"processed": 0,

"total": "0"

},

"created": 2,

"unchanged": 0,

"updated": 0,

"deleted": 0

},

"links": {

"existing": {

"processed": 0,

"total": "0"

},

"created": 2

}

},

"situationSummary": {

"SOURCE_IGNORED": 0,

"FOUND_ALREADY_LINKED": 0,

"UNQUALIFIED": 0,

"ABSENT": 2,

"TARGET_IGNORED": 0,

"MISSING": 0,

"ALL_GONE": 0,

"UNASSIGNED": 0,

"AMBIGUOUS": 0,

"CONFIRMED": 0,

"LINK_ONLY": 0,

"SOURCE_MISSING": 0,

"FOUND": 0

},

"statusSummary": {

"SUCCESS": 2,

"FAILURE": 0

},

"durationSummary": {

"sourceQuery": {

"min": 42,

"max": 42,

"mean": 42,

"count": 1,

"sum": 42,

"stdDev": 0

},

"auditLog": {

"min": 0,

"max": 1,

"mean": 0,

"count": 24,

"sum": 15,

"stdDev": 0

},

"linkQuery": {

"min": 5,

"max": 5,

"mean": 5,

"count": 1,

"sum": 5,

"stdDev": 0

},

"targetQuery": {

"min": 3,

"max": 3,

"mean": 3,

"count": 1,

"sum": 3,

"stdDev": 0

},

"targetPhase": {

"min": 0,

"max": 0,

"mean": 0,

"count": 1,

"sum": 0,

"stdDev": 0

},

"sourceObjectQuery": {

"min": 6,

"max": 34,

"mean": 21,

"count": 22,

"sum": 474,

"stdDev": 9

},

"postMappingScript": {

"min": 0,

"max": 1,

"mean": 0,